|

|

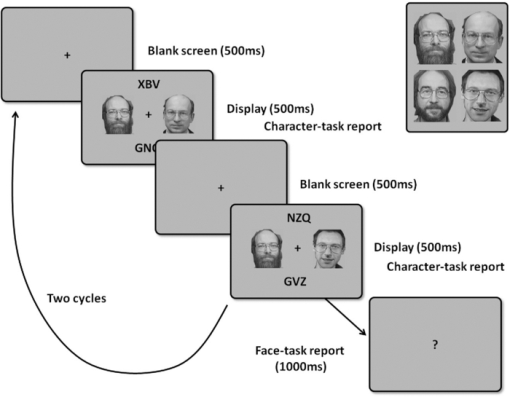

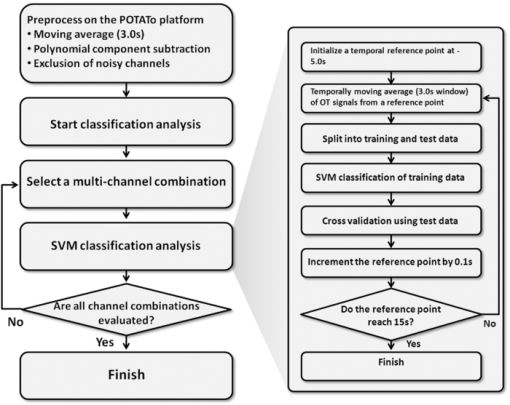

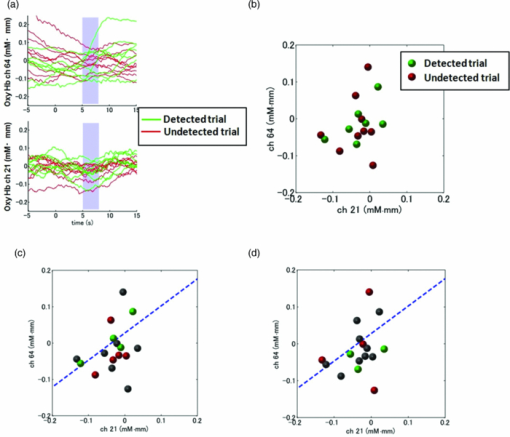

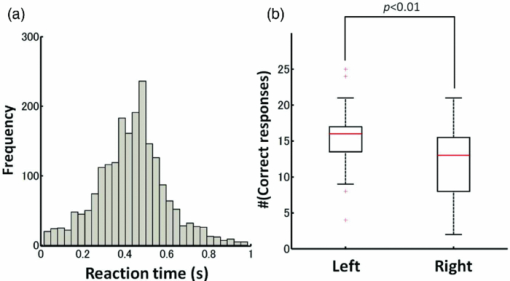

1.IntroductionRecent advances in psychophysical studies have revealed that the visual-attention system often fails to detect large and obvious changes in a visual scene if the changes are masked with a uniform gray blank screen for a short duration (i.e., a one-shot or flicker paradigm),1 rendered slow (slow change),2 or shown together with attentional distracters such as “mud splashes.”3 Blindness to changes occurs not only in a laboratory setting but also in real-world situations.4, 5 Contrary to our common belief that our visual system maintains a meticulously detailed duplicate of a visual scene, changes in a visual scene are not readily detectable unless focused spatial attention is allocated to a place where changes occur.6 (Regarding the discussion on how much visual memory is maintained, see Ref. 7) This inability to detect visual changes is referred to as change blindness and is a topic of psychophysical studies. Understanding the neural and psychological processes underlying change detection and blindness is of practical interest for preventing accidents caused by an operator's oversight or inattention in areas such as industrial interface designs,8 operator training,9 and driving safety.10 Neural correlates of change detection and change blindness have been investigated with functional magnetic-resonance imaging11, 12, 13, 14 (fMRI) and electroencephalography (EEG),15, 16, 17, 18, 19, 20 as well as with single-unit recording of humans and monkeys.21, 22 These functional-neuroimaging studies have reported neural loci and processes related to change detection and change blindness. The fMRI studies identified stronger activations in the dorsal and ventral visual areas12 and in the parieto-frontal attentional network when a change was detected.11, 13, 14 On the other hand, the EEG studies revealed temporal dynamics of neural correlates of sub–second order and found increased P1 and P3 components, which reflected subjective awareness of changes in a visual scene15, 16, 17 and that predicted the onset of visual awareness.15, 20 A recent trend in functional neuroimaging, especially using EEG and fMRI, is to extract, or decode, the information that an activated neural pattern encodes, namely, the field now known as brain decoding.23, 24, 25 In contrast to traditional studies on single-voxel-based activation,26, 27 brain decoding makes use of distributed, multivoxel activation patterns to infer primary visual28, 29, 30, 31 and sensorimotor32 representations often on a trial-by-trial basis. This new approach extends the applicability of neuroimaging to extracting latent information buried in subtle patterns in neuroimaging data and offers a new possibility of brain–machine interfaces (BMIs) for normal and physically impaired users. This decoding approach is not limited to primary sensory and motor areas but is also being applied successfully to higher cognitive states such as intention, decision-making, and emotion.33, 34, 35, 36 It can therefore be expected that change detection and change blindness can be decoded from neuroimaging data. This study attempted to classify change detection and change blindness by using near-infrared spectroscopy (NIRS) signals. NIRS has a centimeter-order spatial resolution for identifying neural loci and sub–second-order temporal sampling for identifying hemodynamic changes,37 and has been successfully applied to sensorimotor functions,38, 39, 40, 41 visual functions,42, 43 auditory functions,44 and higher cognitive functions, such as working memory,45, 46, 47 cognitive inhibition,48, 49 and language processing.50, 51 Several recent studies have extended the application of NIRS to brain decoding of hand-movement motor imagery,52 moment-by-moment magnitudes of pinch-force production,53 subjective preference of beverages,54 emotional responses to facial expressions,55 and applications to brain–computer interfaces.56, 57, 58 NIRS has advantages, such as relatively low cost and negligible physical constraints, compared to other imaging modalities, such as fMRI, making it an ideal candidate for applications of brain–machine interfaces in a real-life environment.57 In the current study, while subjects were performing a change-detection task, their cerebral activities were continuously monitored with NIRS. We tested the hypothesis that successful and unsuccessful trials in detecting a change can be classified on a trial-by-trial basis by applying a machine learning algorithm to the NIRS signals. 2.Methods2.1.Change-Detection Experiment2.1.1.SubjectsSeven subjects that were recruited in our laboratory (five male and two female with normal or corrected-to-normal vision and no reported history of neurological problems; age 27 to 37) volunteered for the behavioral experiment, and five of them (three male and two female; age 33 to 37) participated in the NIRS experiment. Two of them were the authors. The other subjects were familiar in general with psychophysical and NIRS experiments but not informed of the purpose of these particular experiments. All the subjects provided written informed consent. All measurements were conducted with the approval of the Ethics Committee of Hitachi, Ltd. 2.1.2.Change-detection taskA task designed by Beck 11 was used for both the behavioral and NIRS experiments. A single trial of this task consisted of two subtasks—a character task and a face task—and the subjects were instructed to respond to both of the subtasks. The time line of stimulus presentation and subject's responses is shown in Fig. 1. First, a fixation cross appeared at the center of a display on which subjects were instructed to fixate during the trial. A screen with two faces, one on the right and another on the left (each 2.0 deg from the fixation cross), was then shown for 500 ms. At the same time, two alphabet strings composed of three characters were shown (2.4 deg above and below the fixation cross). The face images and character strings subtended 3.2 × 3.7 and 1.8 × 1.0 deg, respectively. Four facial images of middle-aged men wearing glasses, adopted from a face-image database,59 were used (see upper-right inset in Fig. 1). Then, a uniform gray blank screen was interleaved for 500 ms. The screen with faces and character strings and the gray screen were repeated four times. The subjects were seated ∼30 cm in front of the computer display with their heads stabilized on a chin rest and were instructed to keep fixating at the fixation cross. While the screen was flickering, the subjects were asked to report if a target character (in this case X) was contained either in the top or bottom of the screen by using the key pad of an external keyboard (pressing “7” for an X present in top, “0” for an X present at bottom, or no key press indicating the absence of an X). This procedure was referred to as the character task. The target character appeared in one-third of the runs either at the top or bottom at one time. Fig. 1Schematics of the time course of a single trial of the change-detection task. The upper-right inset shows the four faces used in the experiment.59 In a single trial, the subjects were instructed to respond to the face task for four times and to the face task once at the end of a trial.  At the end of each trial run, a question mark appearing at the center of the screen for 1000 ms prompted subjects to report if there was a change in the face stimuli during the character task (pressing “0” for no change or “8” for change). This subtask was referred to as the face task. The subject had three chances to notice a change of the face stimuli. A single-trial of the change-detection task run took 5.0 s. Because the attentional resources of the visual system were divided into the two tasks described above, occasional failure to detect face changes was expected. In-house Matlab codes on a Windows-based laptop computer were used to present the face and character-string stimuli and to record subjects’ responses for later off-line analysis. The face stimuli were changed in two-thirds of all trials. These trials are hereafter referred to as the face-change trials in which either the left stimulus or the right stimulus was changed at equal frequency [referred to as left-change (LC) or right-change (RC) trials, respectively]. In the remaining one-third of the entire trials, the face stimuli were not changed [no change (NC) trials]. The NC trials were included to avoid the risk that subjects might falsely report a change without paying attention to the face stimuli. The sequence LC, RC, and NC trials was randomized session by session so that the subjects could not memorize it from previous sessions with a constraint of equal frequencies. In some change trials, the subjects correctly reported a change in the face stimuli (defined as successful trials), but in other change trials the subjects failed to report a change irrespective of the physical change (defined as unsuccessful trials). Subjects were instructed to report a change in the face task only when they were confident that a change occurred. 2.1.3.Behavioral experimentA behavioral experiment was conducted prior to the NIRS experiment described below. The purpose of this experiment was twofold, namely, to familiarize the subjects with this rather difficult change-detection task and to evaluate the task difficulty so as to invoke change blindness to some degree. One session consisted of 25 NC trials, 25 LC trials, and 25 RC trials (totally, 75 trials) and took ∼8 min. The intertrial interval was 1.0 s. Seven subjects attended three to seven sessions each (4.0 sessions on average). The subjects’ responses during the character task and the face task were recorded for later analysis. During this behavioral experiment, the experimenter closely watched the eye movements of subjects and instructed to keep fixating at the fixation cross whenever they broke their fixations. 2.1.4.Near-infrared spectroscopy experimentThe same change-detection task as described above was used for the NIRS experiment, with a few modifications. First, the intertrial interval was taken randomly from 20 to 25 s so that the hemodynamic responses went back to the baseline level by the beginning of each trial.60 Second, to minimize the physical burden and to conduct each session in a reasonable time, the total number of trials was limited to 30 (10 NC, 10 LC, and 10 RC trials). The 30 trials took ∼14 min, and the whole experimental duration, including NIRS preparation, took ∼30 min. No subjects reported any discomfort either during or after the experimental session. An ETG-7000 (Hitachi Medical Co., Tokyo, Japan) was used for the NIRS measurements and was controlled by the same Windows-based computer used for the visual-stimulus presentation and response recording. Sixty probes (32 light-emitting optical fibers and 28 light-detecting optical fibers) were stabilized on the scalp by means of four probe-holder sheets, each of which had 15 probes. Each laser emitter and detector formed a pair that provided a recording channel, resulting in 88 channels in total (Fig. 2). Note that channels refer to cortical locations, located approximately at the midline of corresponding laser emitters and detectors that are located on the scalp.61 The probe-holder sheets were connected to each other with elastic bands. The landmarks of the nasion, inion, and the left and right tragus in the ear were identified for each subject, and the reference site C z was determined from these four landmarks according to the international 10–20 method.62 The probe-holder sheets were arranged so that their center of mass was located at C z. To map out the corresponding cortical locations, the actual three-dimensional positions of all probes were measured with a three-dimensional digitizer (ISOTRAK II, Polhemus Corporation, Colchester, Vermont) for two subjects. The three-dimensional locations of channels were derived as midpoints of the corresponding light-emitter/-detector pairs. These locations were first translated into MNI coordinates by using statistical spatial registration63 and then into corresponding cortical areas by applying automatic anatomical labeling.64 2.2.Data AnalysisThe subjects’ behavioral data and NIRS signal data were analyzed with Matlab (The MathWorks, Natick, Massachusetts). The NIRS signals were preprocessed with an analysis software (Platform for Optical Topography Analysis Tools, POTATo) running on Matlab that has been developed by T.K.65 The POTATo software provides several convenient functions for preprocessing NIRS data. The overall flow for preprocessing and classifying NIRS data is summarized in Fig. 3. Fig. 3Schematics of NIRS-data analysis procedure (the right blowup describes the details of the SVM classification analysis).  2.2.1.Behavioral data analysisCorrect responses and reaction times in the behavioral experiment, for both the character task and the face task, were counted. In a single trial of the character task, the subjects had to report the presence or absence of the target character X and its location in four consecutive screens. Responses were defined as successful when subjects correctly reported the location of the X when it was present and when subjects did not press any key when no X was present. Any other responses were regarded as unsuccessful. The correct-response ratio was computed by dividing the number of successful trials by the number of screens presented to subjects. The distribution of reaction times of successful responses when the subjects correctly reported the presence of an X was also computed. For the face task, trials were defined as successful if subjects reported a change when there was a change in the face stimuli or if subjects reported no change when there was no change in the face stimuli. The correct-response ratio was defined as the ratio of successful trials to the number of trials. The correct-response ratio and the response times for the NC, LC, and RC trials were computed. 2.2.2.Preprocessing of near-infrared spectroscopy dataAll analysis procedures were performed offline. Oxy-hemoglobin-concentration signals were used for the analysis because they have been shown to have a high signal-to-noise ratio.66 The NIRS signals were preprocessed as follows. First, to remove certain noises of extra-cortex origins, such as pulsations, a temporal moving average over 3.0-s duration was applied.67, 68 A third-order polynomial a 0 + a 1 t + a 2 t 2 + a 3 t 3 was fitted to NIRS signals of an entire experimental session by the least-squares method, and this polynomial component was subtracted from the NIRS signals in order to remove global trends. This polynomial detrending was applied separately to all channels. This procedure ensured that the detrended NIRS signals had no drift components. The subsequent classification analysis used NIRS signals from combinations of channels and required a considerable computational resource. Out of the entire 88 channels, those noisy channels were therefore excluded if their amplitudes exceeded 0.5. Channels whose power spectrum appeared to be white were also excluded according to the following criterion. Low-frequency components (<3.0 Hz) contain biologically relevant signals including hemodynamic changes, whereas high-frequency components (>3.0) can be regarded as biologically irrelevant noises. If the spectrum power of the high-frequency band is comparable to that of the low-frequency band in a NIRS channel, then biologically relevant signals in the low-frequency band are not to be detected due to high-frequency noise. In other words, the ratio of low- and high-frequency bands can be used as a measure of “whiteness.” A t-test was applied to determine whether the spectrum power of low- (0.01–0.5 Hz) and high-frequency bands (4.0–4.5 Hz) in each channel was statistically distinguishable (an indication that the channel's spectrum differed from a white spectrum). Channels were used for further analysis if the p-value computed in the t-test was smaller than an empirically determined threshold of 1 × 10−10, and were excluded otherwise. The threshold p-value was empirically determined by visual inspection of NIRS data in this study. A more objective criterion for determining a threshold value is being developed. 2.2.3.Classification analysis by support-vector machineThere are a variety of machine-learning algorithms applied to classification of neuroimaging data. Some examples are minimum-distance classification,69 Fisher's linear-discriminant analysis (LDA),70 support-vector-machine (SVM) algorithms,71 and hidden Markov models.52 In this study, a support-vector-machine algorithm with a linear kernel72 was adopted for the binary classification problem. To exploit the temporal resolution of NIRS, classification probabilities of successful and unsuccessful trials were computed in time steps of 0.1 s (Fig. 4). First, a combination of channels was chosen, NIRS signals were extracted from all face-change trials, and each subject's trial was labeled as being a change-detected (successful) or change-undetected (unsuccessful) trial [green and red lines, respectively, in Fig. 4a]. The signals were then averaged in a temporal window [blue-shaded areas in Fig. 4a] to give points in a multidimensional space [Fig. 4b]. The width of the temporal window was fixed at 3.0 s, and the onset of the temporal average was varied from −5.0 to 13.0 s in steps of 0.1 s (note that 0 s was defined as the onset of the task). The SVM classification algorithm was then applied to the points of the multidimensional space [Fig. 4b]. To evaluate how well the data points could be classified, twofold cross validation was used [Figs. 4c and 4d]. Approximately half of the data points were randomly selected as training data [green and red points in Fig. 4c], and the rest were preserved as test data [gray points in Fig. 4c]. An SVM decision boundary was computed using only the training data [blue dashed line in Fig. 4c]. The performance of the decision boundary was evaluated by applying it to the test data [green and red points in Fig. 4d] and by computing the probability that the test data were correctly classified>. (defined as classification probability). This cross-validation procedure [Figs. 4c and 4d] was repeated 30 times with randomly chosen test and trial data in order to compute the mean classification probability and its confidence intervals. This SVM classification was performed on a subject-by-subject basis; a decision boundary was determined from a subject's data and its performance was evaluated with the same subject's data. Fig. 4Schematic of classification analysis procedure. (a) A pair of NIRS signals recorded in change trials. Green and red lines depict signals in change-detected and change-undetected trials, respectively. The blue shaded area is an example of a temporal window in which the NIRS signals were averaged. (b) Two-dimensional map of temporally averaged NIRS signals obtained from the data in (a). (c) SVM classification applied to training data (a decision boundary indicated by blue dashed line) and (d) cross validation of the decision boundary using test data. These plots were created from actual experimental data. (Color online only.)  2.2.4.Number of channels used for classification analysisBefore applying the classification procedure described earlier, it was necessary to determine how many dimensions or channels should be used. In general, there should be optimal dimensions for a classification problem. Small feature dimensions may not provide sufficient information for classifying the change-detected and change-undetected trials. On the other hand, large feature dimensions incur the risk of overfitting to training data. Too small or too large feature dimensions both result in poor performance of classification of the test data. Moreover, the number of possible combinations of features grows rapidly with an increasing number of feature dimensions; therefore, it is desirable to keep the feature dimension as small as possible to save the computational time needed for analysis. For the classification problem stated in Sec. 2.2.3, the performances of classification using single channels, pairs of channels, or triplets of channels were compared in terms of the test data of Subject 1. After the number of channels was determined, the same number of channels was used for the data of other subjects. 2.2.5.Clustering analysis of temporal classification probabilitiesTo discover typical temporal profiles of classification probabilities, an unsupervised classification algorithm was applied to the classification probabilities computed by using the SVM method (as described in Sec. 2.2.4). The k-means clustering algorithm with a squared Euclidean distance was adopted as a similarity measure.73 The number of clusters was adjusted by visual inspection. 3.Results3.1.Behavioral ResultsMost subjects reported that, at first, the task was difficult because of its tight requirement concerning keyboard response time and the unfamiliar method of reporting their responses. The subjects reported that these issues disappeared after a few sessions of training. Seven subjects attended 28 sessions in total (i.e., 75 × 28 = 2100 trials) of the behavioral experiment (average 4.0 sessions per subject). For the character task, the correct response ratio was 95.4% for all seven subjects, indicating that they performed the tasks almost perfectly. The reaction time when subjects reported an X was 577 ms (SD 127 ms). For the face task, the subjects responded within the time reaction limit (1.0 s) in 97.05% of all the trials. For the following behavioral analysis, the trials in which the subjects responded within the time limit were used. Reaction times had a mean of 436 ms and a standard deviation of 165 ms. Figure 5a plots the distribution of reaction times for all trials of the seven subjects. Reaction times for the NC, LC, and RC trials were 445 ms (SD 170 ms), 431 ms (SD 167 ms), and 432 ms (SD 160 ms), respectively. There was no statistical difference between the reaction times for these three conditions [one-way analysis of variance (ANOVA); F(2,2035) = 1.32, p = 0.266]. Fig. 5Summary of behavioral results obtained from the face task. (a) Distribution of reaction time from 2100 trials. The 1000-ms response period was split uniformly into thirty bins. (b) Box plot of correct responses for the LC and RC trials.  The correct-response ratio for the face task was 54.5%, indicating that the task difficulty was properly adjusted. Interestingly, there was asymmetry between the success rates in the LC and RC trials; the subjects detected visual changes more correctly in the left visual hemifield [15.5 (SD 4.57) trials] than in the right [13.3 (SD 5.33) trials] [Fig. 5b]. The performance difference was statistically significant [unpaired double-sided t-test; t(54) = 2.82.; p = 0.0066)]. This asymmetry between the left and right visual fields is consistent with a clinical study that showed right-hemisphere (which corresponds to the left visual hemifield) superiority in a face match-to-sample task in split-brain patients74 and with imaging studies that showed the right-hemisphere dominance of face-processing activities.75, 76 The false-positive rate that subjects reported a change when there was actually no change was 6.4%. 3.2.Analysis of Near-Infrared Spectroscopy SignalsThe correct-response ratios of five subjects are summarized in Table 1. Four of them had the correct response ratios in the range of 43.8–66.7%, and their NIRS signals were classified according to the procedure stated in Sec. 2. Subject 5, however, had a high correct-response ratio (83.3%) with far fewer undetected trials than detected trials. This subject was excluded from further analysis. For the other four subjects, 70 channels on average (out of 88 channels) were used (Table 1) after excluding channels that were considered not to reflect cortical activities under the criteria described in 2.2.2. Table 1Summary of behavioral and NIRS data used for the classification analysis.

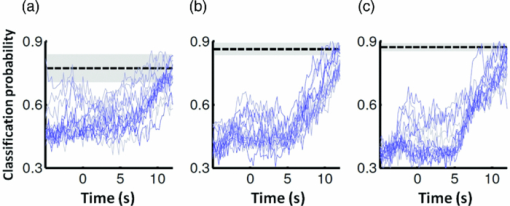

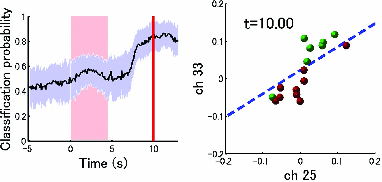

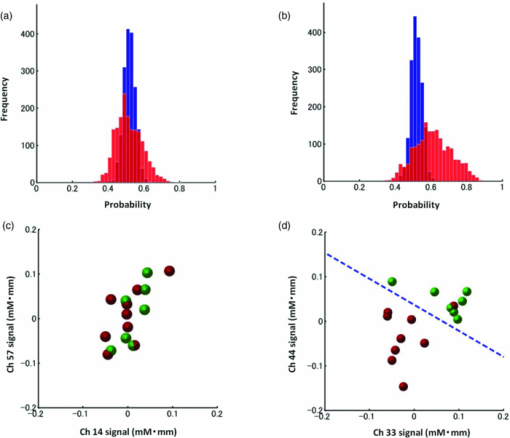

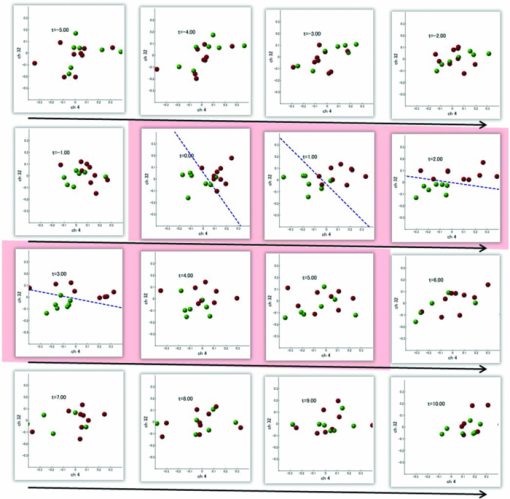

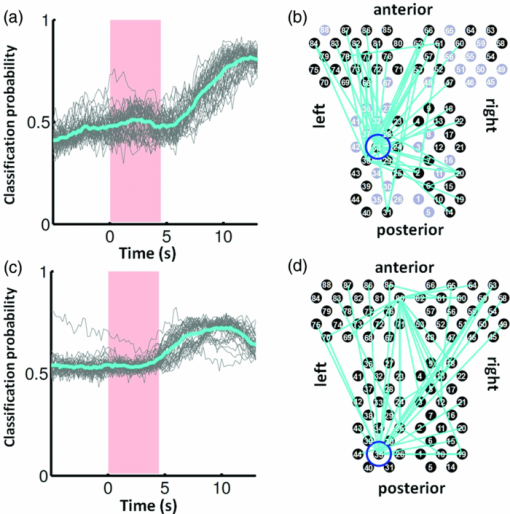

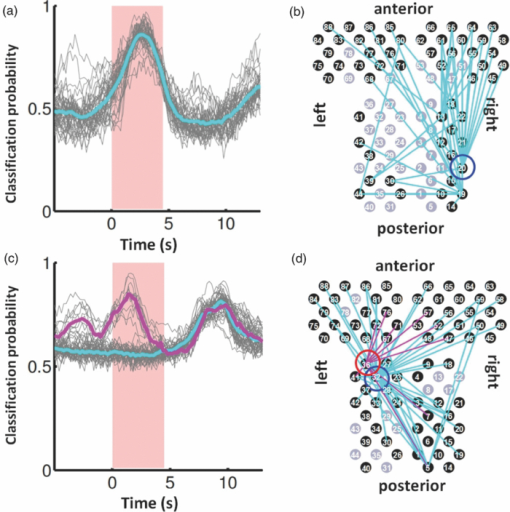

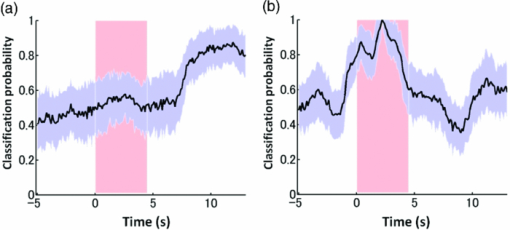

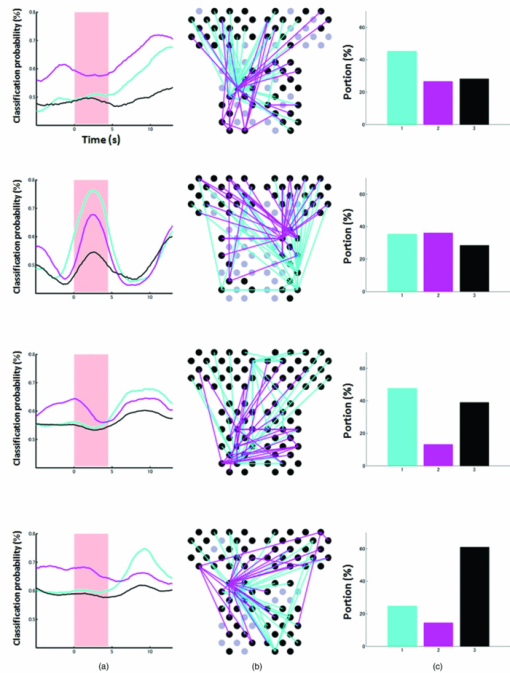

3.2.1.Optimal number of channels necessary for classification algorithmTo determine the optimal number of channels, the classification probabilities were evaluated by using single channels (60), channel pairs (60 C 2 = 1,770), and channel triplets (60 C 3 = 34,220) and the response data of Subject 1. The computation for these three cases took, respectively, 15 min, 7.2 h, and five days on a Windows-based computer (Intel Core2Duo, 3.0 GHz). Figure 6 summarizes 50 classification probabilities that varied significantly in their time courses for the three cases. Average values of maximum classification probabilities of the 50 combinations were 77% (SD 6.7%), 86% (SD 3.1%), and 87% (SD 2.1%). The classification probabilities in the channel-pair case were considerably improved compared to those in the single-channel case. In contrast, when channel triplets were used instead of the channel pairs, the classification probabilities changed little, indicating that the channel pairs were sufficient for classifying the successful from unsuccessful trials. Moreover, the exhaustive search using channel triplets was prohibitively time consuming for analyzing the data of multiple subjects. Accordingly, channel pairs were used for further analyses of the other subjects. 3.2.2.Distribution of classification probabilitiesMoment-by-moment classification probabilities were computed using all possible channel pairs for the four subjects. The temporal window was first fixed, and the population distributions of classification probabilities for possible channel pairs were investigated. The red histograms in Fig. 7 illustrate distributions of classification probabilities computed using all channel pairs for Subject 1 during the task period (0–3 s) [Fig. 7a] and 5 s after the task completion (10–13 s) [Fig. 7b]. The distribution in Fig. 7a had a mean of 51.6% and a standard deviation of 7.3%. In contrast, the distribution in Fig. 7b had a mean of 61.3% and a standard deviation of 10.1%. Figures 7c and 7d illustrate NIRS signals that show the maximum and 50% classification probabilities, respectively, in the distribution of Fig. 7b. Fig. 7Classification probabilities (red histograms) obtained by using the NIRS signals from all 1770 channel pairs (a) during task (0- and 3-s interval) and (b) after task completion (10- and 13-s interval). The blue histograms describe classification probabilities computed from surrogate data (i.e., randomly relabeled as successful and unsuccessful trials). Examples of NIRS signals, found in the posttrial distribution which: (c) gave 50% probability and (d) gave the highest probability. The blue dashed line in (d) indicates the decision boundary computed by an SVM algorithm. (Color online only.)  Note that the distributions computed using randomly relabeled, surrogate data [blue histograms in Figs. 7a and 7b] were highly peaked and did not change during and after the task. The differences between the histograms computed using the subjects’ response and surrogate data were statistically significant [Mann–Whitney U-test; p = 8.9 × 10−4 for Fig. 7a, and p = 6.2 × 10−128 for Fig. 7b]. This analysis confirmed that the high values of posttrial classification could not be attributed to a statistical chance. 3.2.3.Temporal profiles of classification probabilitiesIt was found that there were, generally, two types of temporal profiles, which we call postdictive and predictive. The postdictive type of temporal profile exhibited a plateau before and during the task period, a gradual increase on task completion, and a peak value ∼5 s after task completion. Figure 8a illustrates a representative probability profile computed from a channel pair (25, 33) of Subject 1 [for corresponding images of the NIRS signal, see Appendix (Fig. 11 and 1). By classifying the signals of this channel pair, it was possible to determine if this subject noticed a face change in the change trial that had just been finished. We interpreted that this temporal profile of classification probability reflected the success or failure of a trial. Fig. 11Snapshots of NIRS signals from t = −5.0 to t = 10.0 that were used to compute the probability in Fig. 8a. Horizontal and vertical axes in each image denote temporally averaged NIRS signals from channels 25 and 33, respectively. The pink shade indicates the task period. The blue dashed lines depicting the decision boundaries are included if the classification probability is >75%. (Color online only.)  The predictive type of temporal profile was found in the case of Subject 2 [Fig. 8b]. The classification probability increased 3.0 s before task initiation, took a maximum value around the time of the task initiation, and decreased to approximately the correct response ratio [for corresponding snapshots of NIRS signal, see Appendix (Fig. 12 and 1)]. By classifying the signals of this channel pair, it was possible to predict whether the subject noticed a face chance immediately before the trial was completed. The signals from this channel pair were interpreted as predictive for the success or failure of a trial Fig. 12Images of NIRS signals used for Fig. 8b. Channels 4 and 32 were used for the horizontal and vertical axes. The pink shade indicates the task period. (Color online only.)  3.2.4.Analysis of four subjectsWe performed the same classification procedure exhaustively on all possible channel pairs (1770, 1596, 3828, and 2580 pairs from Subjects 1–4, respectively; see Table 1). Because the temporal dynamics of classification probabilities was the focus of interest, the 50 channel pairs that showed maximal amplitudes of classification probabilities (i.e., maximum probability – minimum probability in each temporal profile) were chosen. Figure 9 summarizes the results for Subjects 1 and 3. Classification probabilities in Fig. 9a (Subject 1) had postdictive temporal profiles that were similar to the one shown in Fig. 8a. The corresponding channel pairs from which the probabilities in Fig. 9a were computed are shown in Fig. 9b. We looked for the most informative channels, or hubs, which are defined as NIRS channels that most frequently appeared in the 50 channel pairs. Channel 33 (indicated by a blue circle) located in the left angular gyrus appeared in 32 channel pairs out of the total 50 channel pairs, contributing most to the postdicitive classification. The results for Subject 3 are summarized in Figs. 9c and 9d, exhibiting characteristics similar to those of Subject 1. The most informative channel was channel 35 (indicated by a blue circle), which was located in the left occipital area and appeared in 24 channel pairs. For both subjects, the pairs that contained channels in the left temporoparietal or occipital areas gave the higher values of classification probabilities, and the most informative channels tended to be paired with channels in the frontal lobe [Figs. 9b and 9d]. Fig. 9(a) Temporal profiles of the classification probability computed using the NIRS signals of Subject 1. The pink-shaded area depicts the task period. The light blue line denotes the average values of all probabilities. (b) Channel pairs used to compute the probabilities shown in (a). The pairs are connected by light blue lines. The black filled circles describe the locations of the channels used for the analysis, and gray filled circles the locations of the channels that were excluded according to the criteria stated in Sec. 2. The numbers printed on the channels indicate the cortical location depicted in Fig. 2. (c) Temporal classification probabilities of Subject 3 and (d) the locations of the channels pairs. (Color online only.)  Figure 10a shows predictive temporal profiles of classification probabilities computed from 50 channel pairs of Subject 2. Most temporal profiles exhibited maximum values before the task completion, thereby predicting whether that subject would report the presence or absence of a face change. Pairs that contained channels in the right temporoparietal areas contributed to these high-classification probabilities [Fig. 10b]. Channel 20 (located at the right occipito-temporal junction), appearing in 34 pairs, was most informative for this predictive classification. Subjects 1–3 had either pre- or postdicitive temporal profiles only. Interestingly, Subject 4 had both pre- and postdicitive components [Fig. 10c]. Channel pairs that had posterior-parietal-lobe channels had contributed to both pre- and postdicitive classification [light blue and purple curves for postdictive and predictive classification, Fig. 10d]. Channel 32 (located in the posterior parietal area) appeared in 34 pairs and was most informative for postdictive classification (a blue circle), and channel 36 (located in the postcentral area) appeared in 16 pairs and was most informative for predictive classification (red circle). The above analysis found either predictive or postdictive components of classification probabilities that exhibited high temporal amplitudes. The clustering analysis was performed on classification probabilities computed from all possible channel pairs, and it was found that three subjects (1–4) had both predictive and postdictive components [see Appendix (Fig. 13)]. 4.DiscussionThis study demonstrated that moment-by-moment classification probabilities could be computed from NIRS signals measured in a change-detection task. The NIRS signals provided the temporal dynamics and the most informative cortical locations simultaneously for classifying whether subjects noticed a change in a visual scene. 4.1.Postdictive and Predictive Classification ProbabilitiesThe classification probabilities had two distinct types: postdictive and predicate. The postdictive type [Figs. 8a and 9] remained at ∼50% before and during the task, began to increase at the task completion, and took the maximum value approximately 5 s after the task completion. These postdictive classification probabilities arose mainly from a combination of the most informative channels in the parietal, temporal, or occipital lobe and another channel in the frontal lobe. The predictive classification probability [Figs. 8b and 10] predicted the performance in subsequent trials immediately before and during the task onset. A combination of the frontal and temporoparietal cortices contributed most to this type of classification probability. Fig. 10(a) Temporal classification Probabilities of Subject 2 and (b) the locations of the channels pairs. (c) Temporal classification probabilities of Subject 4 and (d) the locations of the channels pairs. In (c), two kinds of temporal profiles, whose corresponding channel pairs are shown in (d), are shown in light blue and purple lines. These two temporal profiles are centers of clusters found with a k-means (k = 2) algorithm. (Color online only.)  Although we initially did not expect to find a predictive component in the change-detection experiment, such a component was not surprising in retrospect because recent fMRI studies reported that bold signals immediately before task initiation predicted task performances such as magnitude of force production32 and sensitivity to somatosensory stimuli.77 Another fMRI study reported brain activation that began to evolve gradually as early as thirty seconds before subjects made errors in a flanker task.78 Moreover, hemodynamic signals recorded simultaneously with electrophysiological recording revealed an anticipatory component predicting upcoming sensory stimuli.79 The NIRS signals measured from the temporoparietal and frontal cortices contributed to the predictive type of classification probability in general agreement with those fMRI studies. There are, in general, two types of visual attention;80, 81 bottom-up, sensory-driven attention which enhances a stimulus whose features differ from those of other surrounding stimuli, and top-down, behavior-driven attention, which enhances a stimulus of behavioral relevance. It is tempting to speculate that our finding of pre- and postdicitive types may correspond to the two types of visual attention. There are equally plausible speculations for the predictive component. If the predictive component resulted from allocation of attentional resources to face task, then it could be related to top-down attention. Or, if the predictive component reflected enhancement to sensory stimulus processing, then it could be attributed to bottom-up attention. Also, the postdictive component could be due to either top-down or bottom-up attention. The postdictive component might have reflected a process that top-down attention was covertly attracted to coincidently found face changes. Or, bottom-up attention may have caused the postdictive component due to a novel event, such as face changes. Designing an experiment to dissociate top-down and bottom-up components in relation to our findings of pre- and postdictive components will be of neuroscientific interest. 4.2.Statistical Validation of Decoding ResultsA recent NIRS study reported that subjective preference of beverages could be decoded on a trial-by-trial basis with a probability of ∼80% by using analysis and classification methods similar to ours.54 A subsequent commentary pointed that there is a risk of high-classification probability out of random data with no information about actual preference, and it questioned if the high-classification probability of the study might be caused by a statistical coincidence in choosing “the best feature” out of a large number of features.82 It is emphasized that this methodological criticism is not applicable to our study. We demonstrated that the distribution of classification probabilities was shifted from the task period to the posttask period (Fig. 7). If no information concerning change-detected and change-undetected trials were encoded, such a shift in the distribution would not occur. Using surrogate data (i.e., randomly relabeled successful and unsuccessful trials), the distributions of classification probabilities were computed and found to differ significantly from the distributions created from subjects’ responses. We thus concluded that the structured temporal profiles of classification probabilities in Figs. 8, 9, 10 reflected the subjects’ performance. Fig. 8Classification probabilities (a) that was postdicitive for Subject 1 and (b) that was predictive for Subject 2. The pink shaded areas indicate the task period. Black lines and surrounding blue shaded areas illustrate average values and 95% confidence intervals of moment-by-moment classification probabilities, respectively. (Color online only.)  4.3.Possible Applications toward Brain-Machine InterfacesAccording to a recent study,83 NIRS can be an appropriate substitute for fMRI across multiple cognitive tasks, although care should be taken for its lower spatial resolution and weaker signal-to-noise ratio. NIRS has a few important advantages (such as fewer physical constraints and relatively lower cost) with regard to BMIs.37 This opens up an alternative possibility of monitoring an operator's latent cognitive states from NIRS measurements in a real-world setting. Our result that classified the success and failure in a change-detection task suggests, for example, an interface based on NIRS signals to monitor an operator's performance and attentive states. In addition, our analysis of how many channels were necessary for decoding visual awareness to changes revealed that a small number of channels were sufficient if their locations were deliberately chosen. It is possible to make a compact and portable device84, 85 for NIRS-based decoding. 4.4.Limitations of Current StudyDespite the success in classifying the successful and unsuccessful trials, the current study has a few concerns. First, we could not monitor the subjects’ eye movements due to a lack of gaze tracking instrument. It might be possible to increase the classification performance by removing trials in which overt eye movements occur. Second, although most subjects exhibited both pre- and postdictive types, their proportions differed considerably; some had strong postdictive and weak predictive components, whereas others showed weak postdictive and strong predictive components. It is unclear, at this moment, what caused the difference. Third, although we showed that two channels sufficed for successful classification, the locations of the most informative channels varied from subject to subject. It will be desirable to optimize the number of required channels on a subject-by-subject basis. Also, we used only instantaneous oxy-hemoglobin signals; however, a recent study suggested that a decoding performance can be improved by considering a history or gradients of NIRS signals.86 A thorough search for an optimal set of variables should be performed to achieve the better performance and robustness of decoding. Lastly, the current study employed a small number of subjects, and two of them were the authors themselves who were aware of the aim of this study. We tried to minimize the confounding risks of using the authors as subjects by randomizing trial sequences session by session so that they could not expect what trial type would come next. However, the authors knew the proportion of the three trial types; thus, it cannot be excluded that they might have implicitly made a statistical guess of trial types. To overcome these limitations, it would be worth extending the current study by recruiting a larger number of subjects. By inspecting a large data set, we expect to see what variables play a dominant role in boosting the decoding performance and what determines the relative strengths of post- and predictive components. Also, one interesting direction is to decode one subject's state by using a classifier trained by other's NIRS data set. This will save a training session, which is of practical convenience for NIRS-based brain machine interfaces. This approach has not been examined with a few exceptions.87 These lines of studies will be pursued in our future study. AppendicesAppendix A: Temporal Snapshots of Near-Infrared Spectroscopy SignalsFigures 11 and 12 demonstrate typical examples of NIRS signals that were used to compute the classification probabilities in Fig. 8. These snapshots were computed from –5.0 to +8.0 s in steps of 1.0 s. The green and red circles in each snapshot denote NIRS signals from successful (change detected) and unsuccessful (change undetected) trials (see 1). Appendix B: Coexisting Predictive and Postdictive ComponentsIn Figs. 9 and 10, only 50 temporal profiles of classification probabilities that showed maximal amplitudes were analyzed, and in the cases of Subjects 1–3, either a pre- or postdictive component was found. Both components were found only in the case of Subject 4. Here we show that, when all possible profiles were analyzed, Subjects 1, 2, and 4 exhibited both pre- and postdictive components. The k-means clustering algorithm was applied to all possible temporal profiles of NIRS signals. The number of clusters was set to 3 (k = 3). In Fig. 13a, three representative profiles of the clusters are depicted using three colors (light blue, magenta, and black in descending order of temporal amplitudes). In the case of Subjects 1 and 3, the largest-amplitude components (light blue) had peak values after task completion. The second largest-amplitude components (magenta) had two peaks before and after the task completion, indicating that these contained both pre- and postdictive components. Interestingly, the cortical locations for light blue and magenta components considerably overlapped. The components with the smallest amplitude (black) are almost flat, indicating these are irrelevant to subjective visual experience. The same trend was observed in the case of Subject 4, who already showed predictive and postdictive components in Fig. 10. Three temporal profiles computed from Subject 2's data have peaks only in the task period, indicating that only predictive components were found in the case of Subject 2. Figure 13c depicts the ratios of three components; for Subjects 1, 3, and 4, the postdictive components are most dominant. Fig. 13Clustering analysis applied to all possible channel pairs. (a) Three representative temporal profiles of the clusters (light blue, magenta, and black in descending order of temporal amplitudes). (b) Locations of channel pairs for the light blue and magenta profiles of (a). To avoid cluttering, only 30 pairs (those with the largest temporal amplitudes) are shown. (c) Distribution of three temporal profiles. (Color online only.)  AcknowledgmentsWe thank Kyoko Yamazaki for assisting with the experiments, Hiroki Sato for his advice on NIRS experiments and analyses, Hiroshi Imamizu for commenting on a previous version of the manuscript, and Hideaki Koizumi for his continuous encouragement. ReferencesD. J. Simons and

D. T. Levin,

“Change blindness,”

Trends Cogn. Sci., 1 261

–267

(1997). https://doi.org/10.1016/S1364-6613(97)01080-2 Google Scholar

D. J. Simons, S. L. Franconeri, and

R. L. Reimer,

“Change blindness in the absence of a visual disruption,”

Perception, 29 1143

–1154

(2000). https://doi.org/10.1068/p3104 Google Scholar

J. K. O’Regan, R. A. Rensink, and

J. J. Clark,

“Change-blindness as a result of ‘mudsplashes,”

Nature, 398 34

–34

(1999). https://doi.org/10.1038/17953 Google Scholar

D. J. Simons and

D. T. Levin,

“Failure to detect changes to people during a real-world interaction,”

Psychonomic Bull. Rev., 5 644

–649

(1998). https://doi.org/10.3758/BF03208840 Google Scholar

D. J. Simons and

C. F. Chabris,

“Gorillas in our midst: sustained inattentional blindness for dynamic events,”

Perception, 28 1059

–1074

(1999). https://doi.org/10.1068/p2952 Google Scholar

R. A. Rensink,

“Change detection,”

Annu. Rev. Psychol., 53 245

–277

(2002). https://doi.org/10.1146/annurev.psych.53.100901.135125 Google Scholar

A. Hollingworth,

“Visual memory for natural scenes: Evidence from change detection and visual search,”

Vis. Cogn., 14 781

–807

(2006). https://doi.org/10.1080/13506280500193818 Google Scholar

D. A. Varakin, D. T. Levin, and

R. Fidler,

“Unseen and unaware: implications of recent research on failures of visual awareness for human-computer interface design,”

Human-Comput. Interac., 19 389

–422

(2004). https://doi.org/10.1207/s15327051hci1904_9 Google Scholar

P. J. Durlach,

“Change blindness and its implications for complex monitoring and control systems design and operator training,”

Hum.-Comput. Interaction, 19 423

–451

(2004). https://doi.org/10.1207/s15327051hci1904_10 Google Scholar

J. K. Caird, C. J. Edwards, J. I. Creaser, and

W. J. Horrey,

“Older driver failures of attention at intersections: using change blindness methods to assess turn decision accuracy,”

Hum. Factors, 47 235

–249

(2005). https://doi.org/10.1518/0018720054679542 Google Scholar

D. M. Beck, G. Rees, C. D. Frith, and

N. Lavie,

“Neural correlates of change detection and change blindness,”

Nat. Neurosci., 4 645

–650

(2001). https://doi.org/10.1038/88477 Google Scholar

S. A. Huettel, G. Guzeldere, and

G. McCarthy,

“Dissociating the neural mechanisms of visual attention in change detection using functional MRI,”

J. Cogn. Neurosci., 13 1006

–1018

(2001). https://doi.org/10.1162/089892901753165908 Google Scholar

L. Pessoa and

L. G. Ungerleider,

“Neural correlates of change detection and change blindness in a working memory task,”

Cereb. Cortex, 14 511

–520

(2004). https://doi.org/10.1093/cercor/bhh013 Google Scholar

H. C. Lau and

R. E. Passingham,

“Relative blindsight in normal observers and the neural correlate of visual consciousness,”

Proc. Natl. Acad. Sci. USA, 103 18763

–18768

(2006). https://doi.org/10.1073/pnas.0607716103 Google Scholar

M. Niedeggen, P. Wichmann, and

P. Stoerig,

“Change blindness and time to consciousness,”

Eur. J. Neurosci., 14 1719

–1726

(2001). https://doi.org/10.1046/j.0953-816x.2001.01785.x Google Scholar

M. Turatto, A. Angrilli, V. Mazza, C. Umilta, and

J. Driver,

“Looking without seeing the background change: electrophysiological correlates of change detection versus change blindness,”

Cognition, 84 B1

–B10

(2002). https://doi.org/10.1016/S0010-0277(02)00016-1 Google Scholar

M. Koivisto and

A. Revonsuo,

“An ERP study of change detection, change blindness, and visual awareness,”

Psychophysiology, 40 423

–429

(2003). https://doi.org/10.1111/1469-8986.00044 Google Scholar

M. Eimer and

V. Mazza,

“Electrophysiological correlates of change detection,”

Psychophysiology, 42 328

–342

(2005). https://doi.org/10.1111/j.1469-8986.2005.00285.x Google Scholar

M. Koivisto, A. Revonsuo, and

M. Lehtonen,

“Independence of visual awareness from the scope of attention: an electrophysiological study,”

Cereb. Cortex, 16 415

–424

(2006). https://doi.org/10.1093/cercor/bhi121 Google Scholar

G. Pourtois, M. De Pretto, C. A. Hauert, and

P. Vuilleumier,

“Time course of brain activity during change blindness and change awareness: performance is predicted by neural events before change onset,”

J. Cogn. Neurosc., 18 2108

–2129

(2006). https://doi.org/10.1162/jocn.2006.18.12.2108 Google Scholar

J. Cavanaugh and

R. H. Wurtz,

“Subcortical modulation of attention counters change blindness,”

J. Neurosci., 24 11236

–11243

(2004). https://doi.org/10.1523/JNEUROSCI.3724-04.2004 Google Scholar

L. Reddy, R. Q. Quiroga, P. Wilken, C. Koch, and

I. Fried,

“A single-neuron correlate of change detection and change blindness in the human medial temporal lobe,”

Curr. Biol., 16 2066

–2072

(2006). https://doi.org/10.1016/j.cub.2006.08.064 Google Scholar

T. M. Mitchell, R. Hutchinson, R. S. Niculescu, F. Pereira, X. R. Wang, M. Just, and

S. Newman,

“Learning to decode cognitive states from brain images,”

Mach. Learning, 57 145

–175

(2004). https://doi.org/10.1023/B:MACH.0000035475.85309.1b Google Scholar

J. D. Haynes and

G. Rees,

“Decoding mental states from brain activity in humans,”

Nat. Rev. Neurosci., 7 523

–534

(2006). https://doi.org/10.1038/nrn1931 Google Scholar

K. A. Norman, S. M. Polyn, G. J. Detre, J. V. Haxby,

“Beyond mind-reading: multi-voxel pattern analysis of fMRI data,”

Trends Cogn. Sci., 10 424

–430

(2006). https://doi.org/10.1016/j.tics.2006.07.005 Google Scholar

K. J. Worsley, A. C. Evans, S. Marrett, and

P. Neelin,

“A three-dimensional statistical analysis for CBF activation studies in human brain,”

J. Cereb. Blood Flow Metab., 12 900

–918

(1992). https://doi.org/10.1038/jcbfm.1992.127 Google Scholar

“Characterizing evoked hemodynamics with fMRI,”

Neuroimage, 2 157

–165

(1995). https://doi.org/10.1006/nimg.1995.1018 Google Scholar

Y. Kamitani and

F. Tong,

“Decoding the visual and subjective contents of the human brain,”

Nat. Neurosci., 8 679

–685

(2005). https://doi.org/10.1038/nn1444 Google Scholar

Y. Kamitani and

F. Tong,

“Decoding seen and attended motion directions from activity in the human visual cortex,”

Curr. Biol., 16 1096

–1102

(2006). https://doi.org/10.1016/j.cub.2006.04.003 Google Scholar

B. Thirion, E. Duchesnay, E. Hubbard, J. Dubois, J. B. Poline, D. Lebihan, and

S. Dehaene,

“Inverse retinotopy: inferring the visual content of images from brain activation patterns,”

Neuroimage, 33 1104

–1116

(2006). https://doi.org/10.1016/j.neuroimage.2006.06.062 Google Scholar

K. N. Kay, T. Naselaris, R. J. Prenger, and

J. L. Gallant,

“Identifying natural images from human brain activity,”

Nature, 452 352

–355

(2008). https://doi.org/10.1038/nature06713 Google Scholar

M. D. Fox, A. Z. Snyder, J. L. Vincent, and

M. E. Raichle,

“Intrinsic fluctuations within cortical systems account for intertrial variability in human behavior,”

Neuron, 56 171

–184

(2007). https://doi.org/10.1016/j.neuron.2007.08.023 Google Scholar

J. D. Haynes, K. Sakai, G. Rees, S. Gilbert, C. Frith, and

R. E. Passingham,

“Reading hidden intentions in the human brain,”

Curr. Biol., 17 323

–328

(2007). https://doi.org/10.1016/j.cub.2006.11.072 Google Scholar

L. Pessoa and

S. Padmala,

“Decoding near-threshold perception of fear from distributed single-trial brain activation,”

Cereb. Cortex, 17 691

–701

(2007). https://doi.org/10.1093/cercor/bhk020 Google Scholar

E. Eger, V. Michel, B. Thirion, A. Amadon, S. Dehaene, and

A. Kleinschmidt,

“Deciphering cortical number coding from human brain activity patterns,”

Curr. Biol., 19 1608

–1615

(2009). https://doi.org/10.1016/j.cub.2009.08.047 Google Scholar

T. Ethofer, D. V. De Ville, K. Scherer, and

P. Vuilleumier,

“Decoding of emotional information in voice-sensitive cortices,”

Curr. Biol., 19 1028

–1033

(2009). https://doi.org/10.1016/j.cub.2009.04.054 Google Scholar

H. Koizumi, Y. Yamashita, A. Maki, T. Yamamoto, Y. Ito, H. Itagaki, and

R. Kennan,

“Higher-order brain function analysis by trans-cranial dynamic near-infrared spectroscopy imaging,”

J. Biomed. Opt., 4 403

–413

(1999). https://doi.org/10.1117/1.429959 Google Scholar

C. Hirth, H. Obrig, K. Villringer, A. Thiel, J. Bernarding, W. Muhlnickel, H. Flor, U. Dirnagl, and

A. Villringer,

“Non-invasive functional mapping of the human motor cortex using near-infrared spectroscopy,”

Neuroreport, 7 1977

–1981

(1996). https://doi.org/10.1097/00001756-199608120-00024 Google Scholar

A. Maki, Y. Yamashita, E. Watanabe, and

H. Koizumi,

“Visualizing human motor activity by using non-invasive optical topography,”

Front. Med. Biol. Eng., 7 285

–297

(1996). Google Scholar

H. Obrig, T. Wolf, C. Doge, J. J. Hulsing, U. Dirnagl, and

A. Villringer,

“Cerebral oxygenation changes during motor and somatosensory stimulation in humans, as measured by near-infrared spectroscopy,”

Adv. Exp. Med. Biol., 388 219

–224

(1996). Google Scholar

H. Sato, M. Kiguchi, A. Maki, Y. Fuchino, A. Obata, T. Yoro, and

H. Koizumi,

“Within-subject reproducibility of near-infrared spectroscopy signals in sensorimotor activation after 6 months,”

J. Biomed. Opt., 11 014021

(2006). https://doi.org/10.1117/1.2166632 Google Scholar

T. Kato, A. Kamei, S. Takashima, and

T. Ozaki,

“Human visual cortical function during photic stimulation monitoring by means of near-infrared spectroscopy,”

J. Cereb. Blood Flow Metab., 13 516

–520

(1993). https://doi.org/10.1038/jcbfm.1993.66 Google Scholar

G. Taga, K. Asakawa, A. Maki, Y. Konishi, and

H. Koizumi,

“Brain imaging in awake infants by near-infrared optical topography,”

Proc. Natl. Acad. Sci. USA, 100 10722

–10727

(2003). https://doi.org/10.1073/pnas.1932552100 Google Scholar

K. Sakatani, S. Chen, W. Lichty, H. Zuo, and

Y. P. Wang,

“Cerebral blood oxygenation changes induced by auditory stimulation in newborn infants measured by near infrared spectroscopy,”

Early Hum. Dev., 55 229

–236

(1999). https://doi.org/10.1016/S0378-3782(99)00019-5 Google Scholar

S. Tsujimoto, T. Yamamoto, H. Kawaguchi, H. Koizumi, and

T. Sawaguchi,

“Prefrontal cortical activation associated with working memory in adults and preschool children: an event-related optical topography study,”

Cereb. Cortex, 14 703

–712

(2004). https://doi.org/10.1093/cercor/bhh030 Google Scholar

J. Fuster, M. Guiou, A. Ardestani, A. Cannestra, S. Sheth, Y. D. Zhou, A. Toga, and

M. Bodner,

“Near-infrared spectroscopy (NIRS) in cognitive neuroscience of the primate brain,”

Neuroimage, 26 215

–220

(2005). https://doi.org/10.1016/j.neuroimage.2005.01.055 Google Scholar

K. Yamanaka, B. Yamagata, H. Tomioka, S. Kawasaki, and

M. Mimura,

“Transcranial magnetic stimulation of the parietal cortex facilitates spatial working memory: near-infrared spectroscopy study,”

Cereb. Cortex, 20 1037

–1045

(2010). https://doi.org/10.1093/cercor/bhp163 Google Scholar

M. L. Schroeter, S. Zysset, T. Kupka, F. Kruggel, and

D. Y. von Cramon,

“Near-infrared spectroscopy can detect brain activity during a color-word matching Stroop task in an event-related design,”

Hum. Brain Mapping, 17 61

–71

(2002). https://doi.org/10.1002/hbm.10052 Google Scholar

M. Boecker, M. M. Buecheler, M. L. Schroeter, and

S. Gauggel,

“Prefrontal brain activation during stop-signal response inhibition: an event-related functional near-infrared spectroscopy study,”

Behav. Brain Res., 176 259

–266

(2007). https://doi.org/10.1016/j.bbr.2006.10.009 Google Scholar

H. Sato, T. Takeuchi, and

K. L. Sakai,

“Temporal cortex activation during speech recognition: an optical topography study,”

Cognition, 73 B55

–66

(1999). https://doi.org/10.1016/S0010-0277(99)00060-8 Google Scholar

M. Pena, A. Maki, D. Kovacic, G. Dehaene-Lambertz, H. Koizumi, F. Bouquet, and

J. Mehler,

“Sounds and silence: an optical topography study of language recognition at birth,”

Proc. Natl. Acad. Sci. USA, 100 11702

–11705

(2003). https://doi.org/10.1073/pnas.1934290100 Google Scholar

R. Sitaram, H. H. Zhang, C. T. Guan, M. Thulasidas, Y. Hoshi, A. Ishikawa, K. Shimizu, and

N. Birbaumer,

“Temporal classification of multichannel near-infrared spectroscopy signals of motor imagery for developing a brain-computer interface,”

Neuroimage, 34 1416

–1427

(2007). https://doi.org/10.1016/j.neuroimage.2006.11.005 Google Scholar

I. Nambu, R. Osu, M. A. Sato, S. Ando, M. Kawato, and

E. Naito,

“Single-trial reconstruction of finger-pinch forces from human motor-cortical activation measured by near-infrared spectroscopy (NIRS),”

Neuroimage, 47 628

–637

(2009). https://doi.org/10.1016/j.neuroimage.2009.04.050 Google Scholar

S. Luu and

T. Chau,

“Decoding subjective preference from single-trial near-infrared spectroscopy signals,”

J. Neural Eng., 6 016003

(2009). https://doi.org/10.1088/1741-2560/6/1/016003 Google Scholar

K. Tai and

T. Chau,

“Single-trial classification of NIRS signals during emotional induction tasks: towards a corporeal machine interface,”

J. Neuroeng. Rehab., 6 39

–52

(2009). https://doi.org/10.1186/1743-0003-6-39 Google Scholar

N. Birbaumer,

“Breaking the silence: brain-computer interfaces (BCI) for communication and motor control,”

Psychophysiology, 43 517

–532

(2006). https://doi.org/10.1111/j.1469-8986.2006.00456.x Google Scholar

F. Matthews, B. A. Pearlmutter, T. E. Ward, C. Soraghan, and

C. Markham,

“Hemodynamics for brain-computer interfaces: optical correlates of control signals,”

IEEE Signal Process. Mag., 25 87

–94

(2008). https://doi.org/10.1109/MSP.2008.4408445 Google Scholar

K. Utsugi, A. Obata, H. Sato, R. Aoki, A. Maki, H. Koizumi, K. Sagara, H. Kawamichi, H. Atsumori, and

T. Katura,

“GO-STOP control using optical brain-computer interface during calculation task,”

IEICE Trans. Commun., 91 2133

–2141

(2008). https://doi.org/10.1093/ietcom/e91-b.7.2133 Google Scholar

AT&T Laboratories Cambridge, “The Database of Faces”, accessed July 9th,

(2011) http://www.cl.cam.ac.uk/research/dtg/attarchive/facedatabase.html Google Scholar

M. L. Schroeter, T. Kupka, T. Mildner, K. Uludag, and

D. Y. von Cramon,

“Investigating the post-stimulus undershoot of the BOLD signal—a simultaneous fMRI and fNIRS study,”

Neuroimage, 30 349

–358

(2006). https://doi.org/10.1016/j.neuroimage.2005.09.048 Google Scholar

T. J. Huppert, R. D. Hoge, A. M. Dale, M. A. Franceschini, and

D. A. Boas,

“Quantitative spatial comparison of diffuse optical imaging with blood oxygen level-dependent and arterial spin labeling-based functional magnetic resonance imaging,”

J. Biomed. Opt., 11 064018

(2006). https://doi.org/10.1117/1.2400910 Google Scholar

H. H. Jasper,

“The ten-twenty electrode system of the International Federation,”

Electroencephalography and Clinical Neurophysiology, 10 371

–375

(1958). Google Scholar

A. K. Singh, M. Okamoto, H. Dan, V. Jurcak, and

I. Dan,

“Spatial registration of multichannel multi-subject fNIRS data to MNI space without MRI,”

Neuroimage, 27 842

–851

(2005). https://doi.org/10.1016/j.neuroimage.2005.05.019 Google Scholar

N. Tzourio-Mazoyer, B. Landeau, D. Papathanassiou, F. Crivello, O. Etard, N. Delcroix, B. Mazoyer, and

M. Joliot,

“Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain,”

Neuroimage, 15 273

–289

(2002). https://doi.org/10.1006/nimg.2001.0978 Google Scholar

T. Katura, H. Sato, and

H. Tanaka,

“Toward standard analysis of functional NIRS: Common platform software for signal analysis tools,”

Google Scholar

Y. Hoshi, N. Kobayashi, and

M. Tamura,

“Interpretation of near-infrared spectroscopy signals: a study with a newly developed perfused rat brain model,”

J. Appl. Physiol., 90 1657

–1662

(2001). Google Scholar

T. Katura, N. Tanaka, A. Obata, H. Sato, and

A. Maki,

“Quantitative evaluation of interrelations between spontaneous low-frequency oscillations in cerebral hemodynamics and systemic cardiovascular dynamics,”

Neuroimage, 31 1592

–1600

(2006). https://doi.org/10.1016/j.neuroimage.2006.02.010 Google Scholar

T. Katura, H. Sato, Y. Fuchino, T. Yoshida, H. Atsumori, M. Kiguchi, A. Maki, M. Abe, and

N. Tanaka,

“Extracting task-related activation components from optical topography measurement using independent components analysis,”

J. Biomed. Opt., 13 054008

(2008). https://doi.org/10.1117/1.2981829 Google Scholar

J. V. Haxby, M. I. Gobbini, M. L. Furey, A. Ishai, J. L. Schouten, and

P. Pietrini,

“Distributed and overlapping representations of faces and objects in ventral temporal cortex,”

Science, 293 2425

–2430

(2001). https://doi.org/10.1126/science.1063736 Google Scholar

T. A. Carlson, P. Schrater, and

S. He,

“Patterns of activity in the categorical representations of objects,”

J. Cogn. Neurosci., 15 704

–717

(2003). https://doi.org/10.1162/jocn.2003.15.5.704 Google Scholar

D. D. Cox and

R. L. Savoy,

“Functional magnetic resonance imaging (fMRI) ‘brain reading’: detecting and classifying distributed patterns of fMRI activity in human visual cortex,”

Neuroimage, 19 261

–270

(2003). https://doi.org/10.1016/S1053-8119(03)00049-1 Google Scholar

V. N. Vapnik, The Nature of Statistical Learning Theory, Springer Verlag, Berlin

(2000). Google Scholar

J. MacQueen,

“Some methods for classification and analysis of multivariate observations,”

281

–297

(1967) Google Scholar

M. S. Gazzaniga and

C. S. Smylie,

“Facial recognition and brain asymmetries: clues to underlying mechanisms,”

Ann. Neurol., 13 536

–540

(1983). https://doi.org/10.1002/ana.410130511 Google Scholar

J. Sergent, S. Ohta, and

B. MacDonald,

“Functional neuroanatomy of face and object processing: a positron emission tomography study,”

Brain, 115

(Pt 1), 15

–36

(1992). https://doi.org/10.1093/brain/115.1.15 Google Scholar

N. Kanwisher, J. McDermott, and

M. M. Chun,

“The fusiform face area: a module in human extrastriate cortex specialized for face perception,”

J. Neurosci., 17 4302

–4311

(1997). Google Scholar

M. Boly, E. Balteau, C. Schnakers, C. Degueldre, G. Moonen, A. Luxen, C. Phillips, P. Peigneux, P. Maquet, and

S. Laureys,

“Baseline brain activity fluctuations predict somatosensory perception in humans,”

Proc. Natl. Acad. Sci. USA, 104 12187

–12192

(2007). https://doi.org/10.1073/pnas.0611404104 Google Scholar

T. Eichele, S. Debener, V. D. Calhoun, K. Specht, A. K. Engel, K. Hugdahl, D. Y. von Cramon, and

M. Ullsperger,

“Prediction of human errors by maladaptive changes in event-related brain networks,”

Proc. Natl. Acad. Sci. USA, 105 6173

–6178

(2008). https://doi.org/10.1073/pnas.0708965105 Google Scholar

Y. B. Sirotin and

A. Das,

“Anticipatory haemodynamic signals in sensory cortex not predicted by local neuronal activity,”

Nature, 457 475

–479

(2009). https://doi.org/10.1038/nature07664 Google Scholar

R. Desimone and

J. Duncan,

“Neural mechanisms of selective visual attention,”

Annu. Rev. Neurosci., 18 193

–222

(1995). https://doi.org/10.1146/annurev.ne.18.030195.001205 Google Scholar

L. Itti and

C. Koch,

“Computational modelling of visual attention,”

Nat. Rev. Neurosci., 2 194

–203

(2001). https://doi.org/10.1038/35058500 Google Scholar

L. G. Dominguez,

“On the risk of extracting relevant information from random data,”

J. Neural Eng., 6 058001

(2009). https://doi.org/10.1088/1741-2560/6/5/058001 Google Scholar

X. Cui, S. Bray, D. M. Bryant, G. H. Glover, and

A. L. Reiss,

“A quantitative comparison of NIRS and fMRI across multiple cognitive tasks,”

Neuroimage, 54 2808

–2821

(2011). https://doi.org/10.1016/j.neuroimage.2010.10.069 Google Scholar

T. Muehlemann, D. Haensse, and

M. Wolf,

“Wireless miniaturized in-vivo near infrared imaging,”

Opt. Express, 16 10323

–10330

(2008). https://doi.org/10.1364/OE.16.010323 Google Scholar

H. Atsumori, M. Kiguchi, A. Obata, H. Sato, T. Katura, T. Funane, and

A. Maki,

“Development of wearable optical topography system for mapping the prefrontal cortex activation,”

Rev. Sci. Instrum., 80 043704

(2009). https://doi.org/10.1063/1.3115207 Google Scholar

X. Cui, S. Bray, and

A. L. Reiss,

“Speeded near infrared spectroscopy (NIRS) response detection,”

PLoS One, 5 e15474

(2010). https://doi.org/10.1371/journal.pone.0015474 Google Scholar

S. Fazli, F. Popescu, M. Danóczy, B. Blankertz, K. R. Müller, and

C. Grozea,

“Subject-independent mental state classification in single trials,”

Neural Networks, 22 1305

–1312

(2009). https://doi.org/10.1016/j.neunet.2009.06.003 Google Scholar

|