|

|

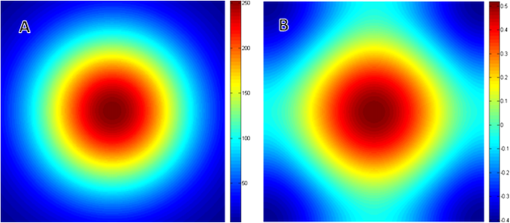

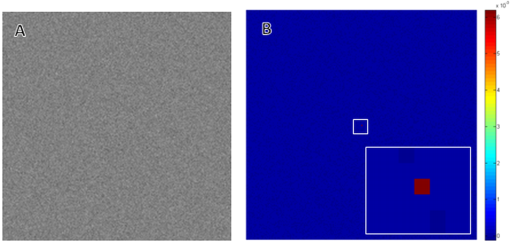

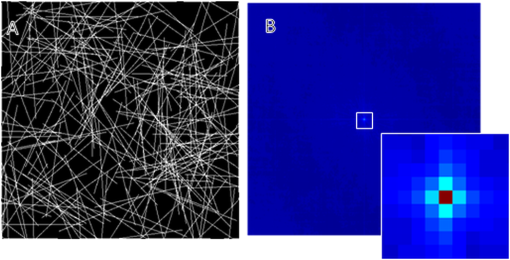

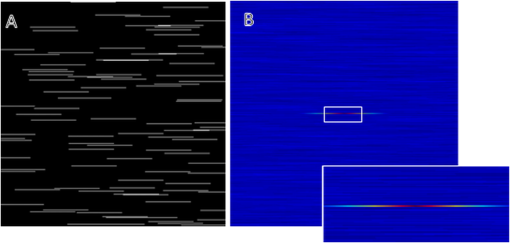

1.IntroductionImage correlation spectroscopy (ICS) has gained acceptance for its ability to quantitatively analyze fluorophore distribution in a user-independent manner from relatively noisy images. Originally described in 1993 using math from statistical mechanics,1 it has since gained acceptance for analyzing biological macromolecule organization ( publications using the term in the title). ICS is especially adept at distinguishing images with a homogeneous fluorophore (macromolecule) distribution from those with the same mean intensity, but uneven, clustered fluorophore distribution in an automated and user-independent fashion. The ability to distinguish these two states is extremely useful in biological systems where clustering of macromolecules can differentiate cellular processes (e.g., receptor aggregation2–4 and collagen fiber organization).5 ICS has since been extended to analyze fluorophore colocalization (cross correlation ICS),6–8 dispersion and diffusion in time (spatiotemporal9–11 and raster ICS),12–16 and macromolecular structure.5,17 More information about these variants can be found in several recent reviews,6 showing the versatility of this mathematical technique. ICS, in all its variants, relies on calculation of the image autocorrelation followed by a fit of the autocorrelation function to a two-dimensional (2-D) Gaussian function to extract quantitative parameters. Few previous works have analyzed the effects of image structure or artifacts on the fidelity of this process, and those that have rely heavily on simulations.17,18 Both autocorrelation and Gaussian curve fitting can be analyzed analytically via estimation theory; however, no previous work has established the theory necessary or described the effect of imaging artifacts in this manner. It is important to understand the mathematical theory underlying autocorrelation-based analysis for two reasons. Firstly, preprocessing, curve fitting, and noise reduction induce significant variance in ICS analyses. The lack of consensus in preprocessing and analysis makes comparing results across publications difficult, and may bias results. Secondly, the peak of the autocorrelation function is inversely related to particle number, modified by particle size and shape; however, the autocorrelation function also encodes further information about image structure. By expanding on autocorrelation theory, quantitative image analysis can be advanced. Instead of using simulations to model autocorrelation-based image analysis, we seek instead to analytically describe the effects of artifacts commonly seen in laser-based microscopy and image processing operations, followed by a series of practical recommendations that will provide greater uniformity and accuracy in applying ICS to fluorescent images. 2.Three Definitions of AutocorrelationAutocorrelation itself is logically equivalent to comparing all possible pixel pairs and reporting the likelihood that both will be bright as a function of the distance and direction of separation. In a more mathematical definition, autocorrelation is the convolution of a function with itself. For a digital image , of size (images are discretely spatially defined, 2- to 4-dimensional, of finite extent, and have real, bounded, digital values), autocorrelation can be calculated by Eq. (1) where is the autocorrelation function, is the image intensity at position , and and represent the distance (or lag) from the corresponding and position. The analysis in this work assumes that the image is homogeneous (or ergodic). For example, if an image has boundaries like a cell on a background it should be cropped to be homogeneous. As the mean value and range of real digital images are based on acquisition system and parameters instead of the underlying structure, the normalized autocorrelation, denoted , is used for analyses such as ICS. It is calculated by dividing by the square of the mean intensity and subtracting 1 from that quantity, where is the Fourier transform of . For images which are isotropic (i.e., no orientation), and will be radially symmetric, which thus produces , where is the length to .Practically, Eqs. (1) and (2) are almost never used; autocorrelations can be calculated far more efficiently via fast Fourier transforms using the Weiner-Khinchin theorem, where is the power spectrum of the image. This powerful theorem states that the Fourier transform of the autocorrelation of an image is equal to the inverse Fourier transform of . The power spectrum can be calculated by squaring the magnitude of the Fourier transform of , which for real-valued functions is equivalent to multiplying the Fourier transform by its converse. This theorem is important for two reasons. First, it reduces processing time [from to ], and, second, it links the autocorrelation operator to the more commonly understood (and blessedly linear) Fourier transform.The last formulation for the autocorrelation operator we will use for this analysis is a heuristic formulation of the probability that two points will accord based on the vector between them. We will focus on the expected autocorrelations for image classes which are defined statistically (i.e., white noise with a certain spatial and intensity profile). For these image classes, autocorrelation can be understood as the product of the predicted intensity distribution of a pixel in the image and the predicted intensity distribution of pixels a certain distance away . This gives a predicted image autocorrelation, , and predicted normalized autocorrelation (assuming the probability density sums to 1), The normalized probability density function of a real image can be calculated by dividing the intensity distribution of the image by the sum of the image values, giving a probability function which sums to 1.3.Creation of Test ImagesCreating images to test new autocorrelation-based techniques requires understanding of the acquisition system that will be used for experimental images. Ideally these test images would be created on the acquisition system itself, in which case, the correct noise and point spread fuction (PSF) artifacts will already be part of the image. If this is not feasible, algorithms should be tested against test images with artificially created artifacts and blur of the same magnitude as expected in real images. 4.Effects of Common Artifacts and Processing Steps4.1.ScalingMultiplication of all image values by a constant, impacts the autocorrelation nonlinearly: , but it does not affect the normalized autocorrelation , as . For digital images, scaling may improve autocorrelation fidelity as decimation errors are minimized. Empirically, scaling an image to use the entire range of the detector system will offer the best data fidelity. 4.2.Linear FilteringLinear filtering is defined by the convolution of image with the discrete filter kernel , and is equivalent to (and normally calculated by) multiplying their Fourier transforms: . It is then trivial to see that applying a filter to an image before autocorrelation would be equivalent to convolving the autocorrelation of the image with the autocorrelation of the filter. From a practical point of view, if an image is filtered before autocorrelation is performed, roundoff error may be worth considering. Repeated transformations into Fourier space can degrade the image if bit-depth is insufficient. Similarly, real optical systems have a finite (PSF, commonly modeled as a Gaussian function), which serves as a low-pass filter for the acquired image. Therefore, the autocorrelation of a confocal or multiphoton image will equal that of the true image convolved with the autocorrelation of the optical system point spread function. Convolving two radially symmetric 2-D Gaussians (with amplitudes and and deviations and ) in two dimensions results in a third Gaussian function with a standard deviation equal to and amplitude . If one standard deviation, say that of the optical system, is much larger than the other, it will tend to dominate the deviation of the resulting function. As a result, for images of small particles (smaller than the diffraction limit), the deviation of the Gaussian fit will relate to the PSF for confocal or multiphoton systems, limited by the spot size of the laser (laser beam waist).1 On the other hand, this will not be the case for images which have broader autocorrelations, like images of fibers longer than the diffraction limit (see Sec. 5.4 on randomly oriented fibers). Comparing the standard deviation and the beam waist (or optical system point spread function) has been used in the past as a check on the processing fidelity. Thus, if the standard deviation from the Gaussian fit approximately equals the beam waist (i.e., a positive result) then acquisition and optimization are satifactory, but a negative result does not necessitate image rejection. 4.3.Addition of Two ImagesThe importance of adding two images lies in the ability to model noise as an image added to the information-containing image. For Gaussian white noise [e.g., thermal fluctuations in a charge coupled device (CCD)], this assumption is commonly made and reasonably accurate. The autocorrelation of the superposition of two images and is Therefore, the autocorrelation of the sum of two images is equal to the autocorrelation of each plus the convolution of image with image . If noise is added to an image, the autocorrelation of the noisy image will be affected both by the autocorrelation of the noise with itself and the convolution of the noise with the image. More details of the implications are described below in Sec. 5.2 on white noise.4.4.ThresholdingThresholding reduces the intensity information of an image to 0 or 1, allowing use of binary image processing algorithms, and increasing the image contrast. Theoretically, thresholding should minimally affect the spatial distribution (which autocorrelation principally analyzes) of the image. By definition, it will alter the intensity probability density function, which will affect the scaling of the normalized autocorrelation. The maximum of the autocorrelation relates to both image structure and image probability distribution. A binary image has a mean intensity of one times the fraction of pixels which are bright. Once normalized, the thresholded image will result in a mean normalized probability distribution of 1. Any unthresholded image with a broad normalized intensity probability function would have a lower average. In theory, therefore, thresholding before autocorrelation could allow for analysis of spatial distribution independent of intensity. In practice, thresholding will affect spatial distribution as well as normalized probability distribution. For example, thresholding will enhance the edges of images that have some finite intensity roll off (soft edges), serving as a high-pass filter. Depending on the threshold chosen, particle size may be over- or underestimated, which will affect the roll off of the autocorrelation function and its maximum value. In cases where thresholding is hard, (relatively poor signal to noise ratio or high background to signal ratio) thresholding may obviate the underlying signal. In experimental work, the threshold level was shown to greatly affect autocorrelation maximum.17 Likewise, if the image is relatively noisy, thresholding can amplify noise, reducing autocorrelation fidelity. For these reasons, thresholding before autocorrelation analysis should be done judiciously and documented well. 4.5.Background SubtractionBackground subtraction is a nonlinear noise reduction technique. An image of an empty field is taken to assess the noise levels of the detector system, and the mean value of this noise is set to be the 0 value for actual images. This will ensure that the background of real images is close to zero, while maintaining the fidelity of information-containing regions.5 This technique is an inherently nonlinear technique (making it difficult to quantitatively analyze) that affects only relatively dark pixels. The effect should be relatively minimal, however, as bright pixels dominate the autocorrelation. 4.6.SamplingAs digital images have defined pixel sizes, many of the concepts presented here will hold true only in a statistical sense. Smaller images are more likely to differ from statistical norms due to smaller sample size. Autocorrelation has been performed on image subsets down to pixel size, however, this is not recommended. On the other hand, the region analyzed should be homogeneous. Edges (e.g., cell borders) will tend to dominate the Fourier transform and hence the autocorrelation. Thus, larger image regions minimize statistical deviations, with the caveat that the region should be homogeneous. Lastly, for laser-based imaging techniques, if the image pixel size is greater than the laser beam waist, sampling will serve as a form of low-pass filtering. If the pixel size is less than the laser beam waist, then the laser itself will serve as the primary filter. Previous work has used the laser beam waist as an independent validation of the fitting process19; however, to do so the detector must oversample the field of view relative to the laser beam waist. 5.Autocorrelation of Example Image Classes5.1.Monochrome FieldA monochrome field image is equal to a constant for all and . The power spectrum of a flat field is equal to the sum of the image at the origin and zero elsewhere (akin to the Dirac delta function). The inverse Fourier transform of this power spectrum is equal to zero, so the normalized autocorrelation will be a flat field. A monochrome image, , superimposed on an information containing image, , will not affect the autocorrelation of . That is, , as and . 5.2.White NoiseWhite noise is spatially random, which gives it an even power spectrum across all frequencies (i.e., ). White describes the spatial correlation of intensity, whereas the intensity distribution of any given pixel can vary (e.g., Gaussian or Poisson). Many types of noise can be modeled as white, including background thermal noise (which is commonly modeled as Gaussian white noise.) An image comprised of white noise, by definition, has a flat power spectrum with a variance from the mean proportional to the intensity profile of the noise squared. A flat power spectrum will give a normalized autocorrelation equal to the Dirac delta function. In theory, therefore, ideal white noise will have an autocorrelation equal to zero everywhere but the origin. The value at the origin is equal to the normalized power spectrum squared. A value of 1 corresponds to “salt and pepper” noise; for Gaussian white noise the value will be the mean amplitude of the noise squared. (See Fig. 1.) Fig. 1Autocorrelation of Gaussian white noise. Frame (a) shows the original image and (b) its autocorrelation. The autocorrelation is very close to zero except at the origin.  When white noise is added to an information containing image, the autocorrelation of the resulting image should theoretically be unchanged. The autocorrelation of the information-containing image plus that of the white noise (equal to zero except at the origin), plus the convolution of the noise with the image (which should be similar to zero if the noise and the image are dissimilar) all sum to just the autocorrelation of the information-containing image: , as except at the origin and as noise and the information containing image should be uncorrelated. In practice, however, adding random noise to an image will blur the resulting autocorrelation. The values and are equal to zero only in a statistical sense: has mean of 0 and a standard deviation related to the noise distribution. The correlation between the noise and the information containing image, , should be close to zero for most image classes, though this depends on the information containing image. For these reasons it is important to acquire images with the best signal to noise ratio possible, and to omit the autocorrelation data at the origin from the Gaussian fits. It should be noted that using low-pass filtering to reduce noise for autocorrelation analysis will adversely affect autocorrelation fidelity. Theoretically, the autocorrelation of white noise should be confined to the origin; however, low-pass filtering will artificially broaden , the autocorrelation of the noise, which thus affects the curve fitting process. Lastly, it should be noted that for images of white noise, limited image size will increase the chance that the calculated autocorrelation will deviate from statistical norms (due to limited sample size). The mathematical framework described thus far deals with a representative image obeying statistical norms. Statistical chance may generate images which vary from the expected described above with likelihood inversely proportional to image size, once again highlighting the importance of acquiring appropriately large images. 5.3.Gaussian FunctionGaussian functions are commonly used as low-pass filters or to model the point spread function of a laser. A 2-D Gaussian function centered at the origin with deviations in the and directions of and is defined by the equation . The autocorrelation of this function is another Gaussian: , with smaller standard deviations and a different amplitude (Fig. 2). Since the integral of over the whole real plane is , the normalized autocorrelation . Note that this normalized autocorrelation is equal to at the origin, and is equal to -1when or approach infinity. 5.4.Randomly Dispersed and Randomly Oriented FibersThis image will be comprised of randomly dispersed narrow fibers ( fibers per unit area) of length (and width of 1 pixel), all aligned randomly. To start, we will assume that the image is comprised of perfectly dark background pixels with evenly bright fibers. The probability of any pixel being part of fiber is therefore . Given that the first pixel is part of a fiber, the probability that a second pixel at will also be part of the fiber is equal to the probability that the fiber lies in direction times the probability that if the fiber lies in the same direction, will not exceed the fiber’s length . therefore is equal to or . For digital images, which are discretized, where the number of points pixels away from a center point is better modeled as (as opposed to ). therefore becomes . The image intensity is which therefore gives the following predicted autocorrelation for digital images, (See Fig. 3). If the center pixel of this function is omitted, the Gaussian function fit will be underestimated, and underestimated more severely the more the peripheral regions of the autocorrelation are emphasized.Fig. 3Autocorrelation of randomly dispersed fibers. Frame (a) shows the test image and (b) its autocorrelation. Note the non-Gaussian profile of this image: the falloff is faster than Gaussian.  For a laser-based image, assuming no noise, the autocorrelation will equal of the information-containing image convolved with that of the optical system, which we will model as a Gaussian with deviation .The solution, while closed form, is not simple. We can state, however, that the autocorrelation of an information containing image convolved with a Gaussian beam has an autocorrelation which is not strictly Gaussian as the falloff in the central regions will be faster, and in the outlying regions slower. The peak of this function will equal , however, as the falloff is faster than Gaussian, the fit will underestimate this value. If using ICS to analyze images of fibers, changes in number density and length will both affect the result, and will underestimate particle number. 6.Anisotropic Images6.1.Horizontal Lines of Random IntensityThe next image to be considered is anisotropic (i.e., image properties depend on orientation) characterized by parallel lines with random intensity. Note that this image is equivalent to a line of white noise, expanded horizontally to fill the image size. This anisotropic image will have an anisotropic autocorrelation: the central line of the autocorrelation, which evaluates the concordance of any two pixels on the horizontal line, will be maximal while the rest of the image will be zero. If we assume that this image of streaks is binary (i.e., it has been thresholded such that any pixel has a value of either 0 or 1), the value of the normalized autocorrelation along the horizontal axis will be 1. If we allow the value of the streaks to vary, the value along the horizontal axis will equal the mean of the normalized image intensity probability density function (since the probability that a first pixel will be bright is equal to , and the probability that a pixel next to it will be bright is 1). If an image of horizontal white streaks is acquired with an optical system with a Gaussian point spread function, the resulting autocorrelation will have a Gaussian profile along the -axis (horizontal axis) with deviation equal to that of the optical point spread function. 6.2.Aligned White FibersAutocorrelation is sensitive to alignment, as demonstrated in the horizontal lines of random intensity. To start, assume all fibers are equally bright, 1 pixel wide, have a length of and a density of fibers per area, and aligned horizontally. Unlike the previous case, the fibers in this image have a finite length of . The probability of a first point being part of a fiber is equal to , and the probability of a second point at a horizontal distance away being part of the same fiber is equal to . Note that if the direction of interest is not parallel to the horizontal the probability of that point also being part of a fiber is akin to the white noise scenario, e.g., equal to 0. The mean value of the image is , so after normalization the following equation is obtained We can see that if we were to model this function with a 2-D Gaussian function, the standard deviation in the minor axis will approach zero, whereas in the major axis it will be proportional to as noted in previous work that used simulations (Fig. 4).18Fig. 4Autocorrelation of aligned fibers. Frame (a) shows whitely distributed aligned fibers and frame (b) its autocorrelation. Note that the horizontal axis contains the only nonzero elements.  It is important to note that for images acquired with a real optical system, the deviation on the minor axis is bounded by that of the point spread function of the system. As such, reporting the ratio of the major and minor axis standard deviation (elipticity20 or skew5) may be misleading. If the autocorrelation of the true image has uneven deviations ( and ) and is convolved with a radially symmetric Gaussian PSF with deviation , the resulting function will have uneven distributions equal to Comparing deviation of the major and minor axes will give If is small relative to and , ellipticity will be accurate, but if not, elipticity will not relate linearly to the ratio of and .7.Curve FittingAll ICS analysis uses nonlinear optimization to fit a 2-D Gaussian to the autocorrelation function, with wide variation in the method and equation used for fitting. Having described predicted image autocorrelations for several image classes, we can analytically describe some aspects of the curve fitting process and offer some recommendations. A full description of methods for Gaussian curve fitting is beyond the scope of this work (Refs. 21 and 22); nonetheless, we can provide a review of the strengths and weaknesses of previously published methods. When ICS was first introduced in 1993, nonlinear optimization was time consuming and complicated, necessitating a reductionist approach. As a result, the autocorrelation was fit to 1-D Gaussian functions along the two axes only. More modern work crops the information-containing regions of the autocorrelation function and then fits the entire region to a 2-D Gaussian function. Cropping varies in different publications, ranging from the central pixels to three times the laser beam width.23 Likewise, several different Gaussian functions have been used, ranging from simple models which assume the same standard deviation in both and (see Ref. 1) to more complicated models which allow different deviations on the major and minor axes.20 Despite these advances, accurate curve fitting remains tricky, given that several image classes have non-Gaussian autocorrelations. 7.1.CroppingCropping speeds curve fitting and rejects regions with low signal to noise ratio. As processing power increases and user-friendly curve fitting packages become more widely dispersed, cropping for speed becomes less important. However, rejecting regions with low signal to noise remains a fundamental reason to consider cropping. If the autocorrelation of an image is Gaussian with some noise, the central regions with higher values will have higher signal to noise ratio. Likewise, points further from the center of the autocorrelation have a smaller signal to noise ratio, but there are more of these points which provides an intrinsic weighting of these points. Cropping reduces this intrinsic bias, which explains its widespread adoption. For laser based images with Gaussian autocorrelations dominated by the laser point spread function, cropping to three times the radius of the point spread function will provide an image containing the useable data while rejecting the regions with low signal to noise.23 However, most interesting images have structures larger than the laser point spread function in them (at least if the laser was correctly selected), which will lead to images with broader autocorrelations. Cropping at three times the beam waist will weight the fit to shorter standard deviations. For images that have non-Gaussian autocorrelations, such as the randomly oriented fibers case discussed above, the amplitude of the Gaussian will be higher for more severe cropping. Alternatives to cropping followed by fitting include maximum likelihood estimation fitting21,22 and weighted least squares optimization. 7.2.Gaussian ModelEarly work was most interested in the amplitude alone of the function, and thus a simple equation assuming radial symmetry was used. More recent work, investigating either alignment or cross correlation, have used more complicated formulations of the Gaussian. A particularly attractive Gaussian form is, This form copes with both center-misalignment and oriented images, but necessitates a seven parameter fit, which may be unwieldy. However, if the autocorrelation is known to be well-centered, and can be set a priori, which reduces the parameter space by two. 7.3.Data Omission for Noise ReductionAs white noise exclusively affects the origin of the autocorrelation, the origin is normally omitted from the fit, as is the data from any known artifacts. If an image of a dark field is taken, the quantity and distribution of noise can be analyzed. Therefore it is good practice to acquire a blank image (picture of nothing) to determine system noise, and to use the autocorrelation of this image to inform artifact rejection. Note that optimization of Eq. (10) is guaranteed to not be convex, which makes fitting difficult (as , for example). One can either rely on constraints to minimize the parameter space to create a convex subspace, or work on creating good initial conditions to aid convergence. Empirically, good initial conditions are needed for efficient, correct optimization. Theoretically ideal initial conditions have been the subject of previous work.24 8.ConclusionsAutocorrelation offers a tool to analyze image structure in a user-independent manner and has proven useful in analysis of several macromolecule systems. Given the variation in image preprocessing and autocorrelation analysis, we felt it necessary to describe the effects of several common processes and offer recommendations for greater uniformity and accuracy. ReferencesN. O. Petersenet al.,

“Quantitation of membrane receptor distributions by image correlation spectroscopy: concept and application,”

Biophys. J., 65

(3), 1135

–1146

(1993). http://dx.doi.org/10.1016/S0006-3495(93)81173-1 BIOJAU 0006-3495 Google Scholar

T. SungkawornY. LenburyV. Chatsudthipong,

“Oxidative stress increases angiotensin receptor type I responsiveness by increasing receptor degree of aggregation using image correlation spectroscopy,”

Biochim. Biophys. Acta, 1808

(10), 2496

–2500

(2011). http://dx.doi.org/10.1016/j.bbamem.2011.07.007 BBACAQ 0006-3002 Google Scholar

J. BonorA. Nohe,

“Image correlation spectroscopy to define membrane dynamics,”

Methods Mol. Biol., 591 353

–364

(2010). http://dx.doi.org/10.1007/978-1-60761-404-3 1064-3745 Google Scholar

E. KeatingA. NoheN. O. Petersen,

“Studies of distribution, location and dynamic properties of EGFR on the cell surface measured by image correlation spectroscopy,”

Eur. Biophys. J., 37

(4), 469

–481

(2008). http://dx.doi.org/10.1007/s00249-007-0239-y EBJOE8 0175-7571 Google Scholar

C. B. Raubet al.,

“Image correlation spectroscopy of multiphoton images correlates with collagen mechanical properties,”

Biophys. J., 94

(6), 2361

–2373

(2008). http://dx.doi.org/10.1529/biophysj.107.120006 BIOJAU 0006-3495 Google Scholar

D. L. KolinP. W. Wiseman,

“Advances in image correlation spectroscopy: measuring number densities, aggregation states, and dynamics of fluorescently labeled macromolecules in cells,”

Cell Biochem. Biophys., 49

(3), 141

–164

(2007). http://dx.doi.org/10.1007/s12013-007-9000-5 CBBIFV 1085-9195 Google Scholar

J. V. RocheleauP. W. WisemanN. O. Petersen,

“Isolation of bright aggregate fluctuations in a multipopulation image correlation spectroscopy system using intensity subtraction,”

Biophys. J., 84

(6), 4011

–4022

(2003). http://dx.doi.org/10.1016/S0006-3495(03)75127-3 BIOJAU 0006-3495 Google Scholar

S. Semrauet al.,

“Quantification of biological interactions with particle image cross-correlation spectroscopy (PICCS),”

Biophys. J., 100

(7), 1810

–1818

(2011). http://dx.doi.org/10.1016/j.bpj.2010.12.3746 BIOJAU 0006-3495 Google Scholar

J. Boveet al.,

“Magnitude and direction of vesicle dynamics in growing pollen tubes using spatiotemporal image correlation spectroscopy and fluorescence recovery after photobleaching,”

Plant Physiol., 147

(4), 1646

–1658

(2008). http://dx.doi.org/10.1104/pp.108.120212 PLPHAY 0032-0889 Google Scholar

B. HebertS. CostantinoP. W. Wiseman,

“Spatiotemporal image correlation spectroscopy (STICS) theory, verification, and application to protein velocity mapping in living CHO cells,”

Biophys. J., 88

(5), 3601

–3614

(2005). http://dx.doi.org/10.1529/biophysj.104.054874 BIOJAU 0006-3495 Google Scholar

M. RossowW. W. MantulinE. Gratton,

“Spatiotemporal image correlation spectroscopy measurements of flow demonstrated in microfluidic channels,”

J. Biomed. Opt., 14

(2), 024014

(2009). http://dx.doi.org/10.1117/1.3088203 JBOPFO 1083-3668 Google Scholar

C. M. Brownet al.,

“Raster image correlation spectroscopy (RICS) for measuring fast protein dynamics and concentrations with a commercial laser scanning confocal microscope,”

J. Microsc., 229

(1), 78

–91

(2008). http://dx.doi.org/10.1111/jmi.2008.229.issue-1 JMICAR 0022-2720 Google Scholar

E. Gielenet al.,

“Measuring diffusion of lipid-like probes in artificial and natural membranes by raster image correlation spectroscopy (RICS): use of a commercial laser-scanning microscope with analog detection,”

Langmuir, 25

(9), 5209

–5218

(2009). http://dx.doi.org/10.1021/la8040538 LANGD5 0743-7463 Google Scholar

S. C. Norriset al.,

“Raster image correlation spectroscopy as a novel tool to study interactions of macromolecules with nanofiber scaffolds,”

Acta Biomaterilas, 7

(12), 4195

–4203

(2011). Google Scholar

M. J. Rossowet al.,

“Raster image correlation spectroscopy in live cells,”

Nat. Protoc., 5

(11), 1761

–1774

(2010). http://dx.doi.org/10.1038/nprot.2010.122 NPARDW 1750-2799 Google Scholar

M. VendelinR. Birkedal,

“Anisotropic diffusion of fluorescently labeled ATP in rat cardiomyocytes determined by raster image correlation spectroscopy,”

Am. J. Physiol. Cell Physiol., 295

(5), C1302

–1315

(2008). http://dx.doi.org/10.1152/ajpcell.00313.2008 0363-6143 Google Scholar

P. W. Wisemanet al.,

“Counting dendritic spines in brain tissue slices by image correlation spectroscopy analysis,”

J. Microsc., 205

(2), 177

–186

(2002). http://dx.doi.org/10.1046/j.0022-2720.2001.00985.x JMICAR 0022-2720 Google Scholar

S. Costantinoet al.,

“Accuracy and dynamic range of spatial image correlation and cross-correlation spectroscopy,”

Biophys. J., 89

(2), 1251

–1260

(2005). http://dx.doi.org/10.1529/biophysj.104.057364 BIOJAU 0006-3495 Google Scholar

A. M. PetersenB. K. Pedersen,

“The role of IL-6 in mediating the anti-inflammatory effects of exercise,”

J. Physiol. Pharmacol., 57

(10), 43

–51

(2006). JPHPEI 0867-5910 Google Scholar

S. M. MirB. BaggettU. Utzinger,

“The efficacy of image correlation spectroscopy for characterization of the extracellular matrix,”

Biomed. Opt. Express, 3

(2), 215

–224

(2012). http://dx.doi.org/10.1364/BOE.3.000215 BOEICL 2156-7085 Google Scholar

N. HagenE. L. Dereniak,

“Gaussian profile estimation in two dimensions,”

Appl. Opt., 47

(36), 6842

–6851

(2008). http://dx.doi.org/10.1364/AO.47.006842 APOPAI 0003-6935 Google Scholar

N. HagenM. KupinskiE. L. Dereniak,

“Gaussian profile estimation in one dimension,”

Appl. Opt., 46

(22), 5374

–5383

(2007). http://dx.doi.org/10.1364/AO.46.005374 APOPAI 0003-6935 Google Scholar

A. NoheN. O. Petersen,

“Image correlation spectroscopy,”

Sci. STKE, 2007

(417), l7

–33

(2007). http://dx.doi.org/10.1126/stke.4172007pl7 Google Scholar

D. JukićR. Scitovski,

“Least squares fittingGaussian type curve,”

Appl. Math. Comput., 167

(1), 286

–298

(2005). http://dx.doi.org/10.1016/j.amc.2004.06.084 AMHCBQ 0096-3003 Google Scholar

|