|

|

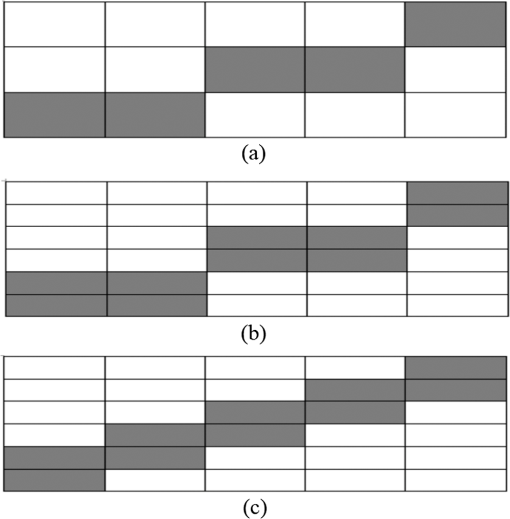

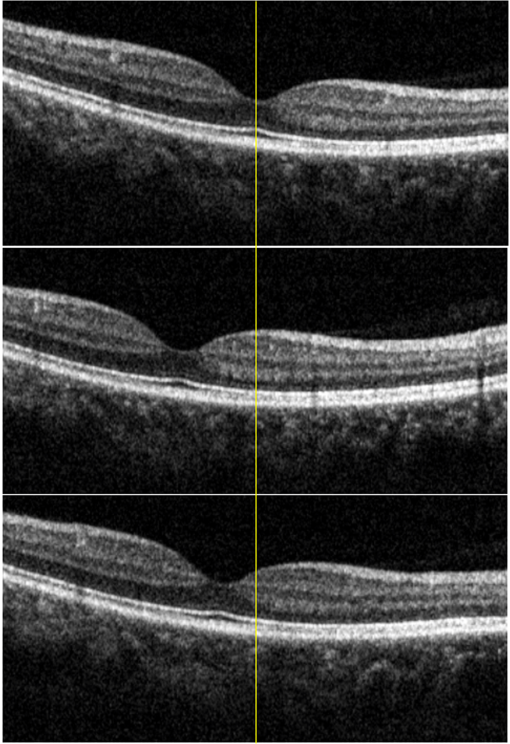

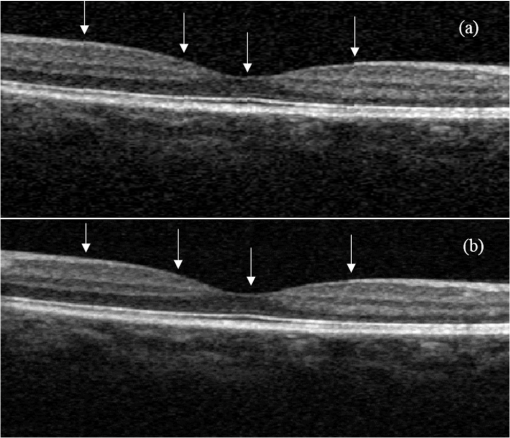

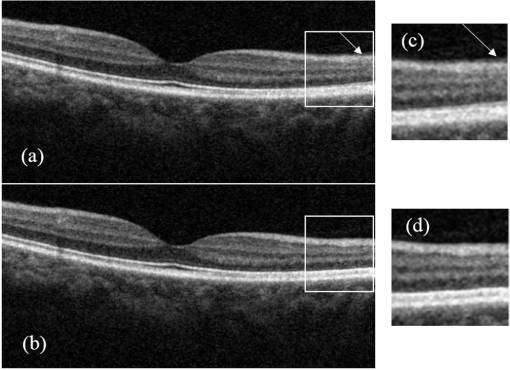

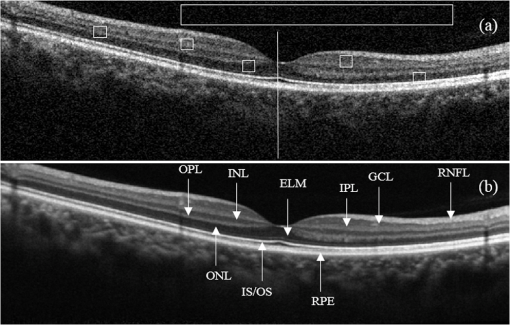

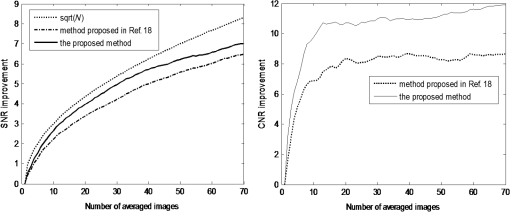

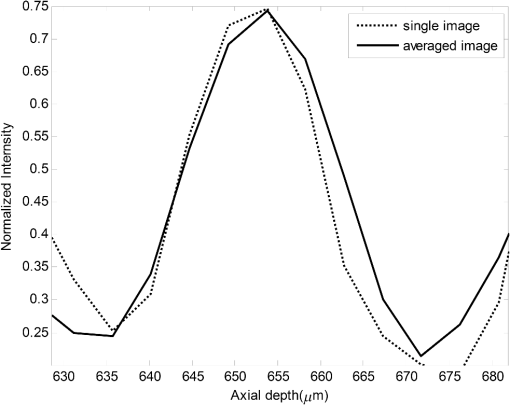

1.IntroductionOptical coherence tomography (OCT) is a noninvasive high-resolution imaging technology that has been widely applied in probing the microstructure of biological tissue.1–3 Human retinal imaging is the most successful clinical application of OCT. OCT has greatly improved the early diagnosis of retinal diseases and is highly valuable in the diagnosis of macular holes, cystoid macular edema, diabetic retinopathy, and age-related macular degeneration. OCT is based on the principle of low-coherence interferometry, the imaging results are sensitive to speckle noise (i.e., signal-degrading speckle),4,5 which reduces the quality and blurs the feature details of obtained images.6,7 Many speckle noise reduction techniques involving hardware-based and software-based methods have been proposed. Hardware-based methods aim to acquire multiple tomograms with uncorrelated speckle patterns. Uncorrelated speckle patterns can be obtained with different angles, positions, and frequencies or by compressing the sample such as by angular compounding,8 spatial compounding,9 frequency compounding,10 and strain compounding.11 However, these hardware-based methods require additional optical components or modification of the sample arm, and these methods cannot be easily applied to a commercial OCT system. Software-based methods are postprocessing techniques used after an image is acquired. These methods use some form of smoothing filter in an image or by multiple uncorrelated images averaging to reduce speckle. One problem of these software-based methods is that it may reduce resolution of the image. Given that the software-based methods do not change the optical configuration and can be directly applied to an OCT system, many image postprocessing methods, such as median and wiener filters,12 wavelet transformations,13,14 curvelet shrinkage,15,16 iterative sparse reconstruction,17 and multiple uncorrelated B-scans averaging,18 have been proposed. Under the B-scan averaging method, the signal-to-noise ratio (SNR) of the resulting image is increased as the square root of the number of averaged B-scans if the speckle is uncorrelated between images.4 When OCT retinal imaging is being performed, the human eye will move unconsciously such as nystagmus and microsaccades. Even during conscious visual fixation, the “fixational eye movements” are unavoidable.19 Such movements lead to the change of speckle patterns of a series of successive recorded images and decorrelation of the speckle noise of the recorded retinal B-scans. The speckle noise can be reduced by averaging these images. The main challenge of the multiple uncorrelated B-scans averaging method is the alignment of recorded multiple B-scans to compensate for sample motion. Any misalignment reduces the spatial resolution of the average image and affects the identification of clinical features. Image registration accuracy can be improved by combination with a real-time eye tracking system.20 However, this additional system can only compensate for lateral displacements and increases scanning times. Jorgensen and Thomadsen18 proposed an OCT image registration method based on the regularized shortest path algorithm with an axial-to-lateral registration iterative scheme. However, this method cannot align images with large lateral displacements21 and cannot correct for rotation. Alonso-Caneiro et al.22 introduced a registration method by affine-motion model, which can account for higher degrees of image transformations. However, it requires a high level of computational complexity and thus may be unsuitable for real-time operation. Antony et al.23 presented a registration method where the surface between the inner and outer segments of the photoreceptor cells is used with two stage thin-plate splines to correct for axial artifacts. Chen et al.24 introduced a method that uses the top and bottom boundaries of the retina to aid in registration with a deformable axial registration using a one-dimensional (1-D) radial basis functions. These two registration methods required a segmentation of the B-scan image to guide the registration method. This requirement restricts the registration accuracy because of the accuracy of segmentation algorithm. In this paper, we propose a two-step method for OCT image registration that combines global and local registrations. In the first step, we register the images based on the rigid transformation model. In the second step, a graph-based algorithm is used to find the optimal shifts along the axial direction of the individual A-scans in the image to align both scans. The results of this method in the imaging of the human retina are discussed in this paper. 2.PrinciplesFor two given images, where one is a reference image and the other is a warped image , the main registration task is to determine an optimal spatial transformation model such that the images and are as similar as possible. is defined as where () and () are the coordinates of the reference and warped images, respectively. The transformation model can be classified into rigid, affine, projective, or nonlinear.25 Each transformation model can be defined by a set of real parameters . Only when the number of parameters is few can the transformation model can be achieved by the optimization of a similarity measure method such as rigid or affine transformation model. Because of the complex motion of the human eye, local displacements usually occur among B-scan images. Large displacements can be corrected through global registration, but local displacements caused by nonlinear distortion cannot be corrected by the rigid or affine transformation model of global registration only. Our method aims to perform registration via two steps. The first step corrects the overall large displacements through global registration, and in the second step we correct for local displacements and determine the optimal translation for each A-scan in the image.2.1.Global RegistrationGlobal registration involves shifting the warped image and at each shift position determining the similarity between the reference and warped images and determining the shift position where the similarity between the reference and warped images is maximum. The content of the warped image is adapted by a transformation model. The rigid transformation model is defined by three parameters : and the affine transformation model is defined by six parameters :and are the motion parameters that define the rigid transformation model and the affine transformation model, respectively. The problem of image registration is to find the parameters (i.e., or ) defining the transformation model. Registration based on the rigid transformation model only involves translation and rotation, and registration based on the affine transformation model includes not only translation and rotation, but also scaling and shearing. Successive B-scans do not have image scaling, and shearing can be modified through local registration in the second step. Furthermore, the number of parameters based on the affine transformation model is larger than that based on the rigid transformation model. More parameters mean more time consumed during registration. Hence, the rigid transformation model is used during global registration. After the transformation model is selected, a suitable cost function that directly affects the performance of the registration algorithm is required to determine the similarity between the reference and warped images. Various cost functions have been introduced previously.25 Here, the cross-correlation coefficient is selected as the cost function that determines the similarity between the reference image and warped image , because the cross-correlation coefficient has a better performance when the images have noises. The cross-correlation coefficient is given by where , , is a set of parameters of the transformation model, is the image size, and and are the grayscale averages of the reference and warped images, respectively. The cross-correlation coefficient varies between and 1. A larger coefficient means more similarity between the reference and warped images. The parameter set of the optimal transformation model , which mostly aligns the images, is determined by maximizing the cross-correlation coefficient. The optimal transformation model isPowell’s multidimensional method and the 1-D golden section search algorithm are used to seek the maximum value of .26 The optimal transformation model transforms the warped image into a new image , which results from global registration. 2.2.Local RegistrationLocal displacements are corrected by determining axial shifts at each corresponding A-scan in B-scan images. In local registration, we are concerned about axial local shifts because the retinal images have an inherent horizontal structure, and the lateral local shifts are not obvious after global registration. A graph-based algorithm is applied to find the optimal relative shifts of the individual A-scans between the reference and warped images. The cost image used to construct the graph is based on the cross-correlation between aligning A-scans in both images at different shifts. Given the presence of speckle noise and other noises, the relative shifts obtained by searching for the maximum cross-correlation are not always the optimum registration values. Considering the spatial continuity of the retinal structure, axial shifts of the adjacent A-scan must be very continuous and smooth. So, we take the relative shifts of adjacent A-scans into account while finding the optimal relative shifts of each A-scan. The graph is formed by calculating the negative cross-correlation for possible shifts of each corresponding column in the reference image and warped image . The size of images and is , where are the rows and are the columns of each image. The image of the ’th column is defined as where is the relative shift of the ’th column and the value range of is (, ). The graph search method finds the cost path in the cost image for which the total negative cross-correlation value is minimal.We do not allow large jumps in the found path, so a penalty term for roughness is added to the cost path to constrain roughness. The roughness of the cost path is defined as A new definition of the cost path is where is a regularization constant. When is minimal, the axial shifts of each column are obtained. The penalty term shown in Eq. (9) is minimized, and the cost path is considered smooth according to the definition; however, the path is not really “smooth.” Smoothness is influenced by the discrete spatial nature of the digital image.To improve the smoothness of the path, a method based on pixel subdivision is proposed. Figure 1 shows the effect of pixel subdivision. Figure 1(a) shows a simplified path composed of a series of gray boxes. The rows of gray boxes in each column indicate the axial shifts . According to Eq. (9), the roughness of the path in Fig. 1(a) is . Pixels in the vertical direction subdivided by the subdivision factor are shown in Fig. 1(b). The new path obtained after pixel subdivision is shown in Fig. 1(c), and the roughness of the new path is zero. The basic idea of pixel subdivision is the axial division of each pixel (vertically) into subpixels, where is the subdivision factor, followed by the determination of all columns with different axial shifts compared with the closest column to the left (). Finally, shifts are allocated to pixels at the left of the column. The new axial shifts of each column are Because the new axial shifts of each column are not always integers, we must calculate the new gray value of each pixel using the interpolation method. 3.Experiments and ResultsThe algorithm was tested on an independent dataset of retinal OCT images centered on the macula taken from 8 healthy subjects and 13 patients, in which each subject had 70 successive B-scans. The OPKO Spectral OCT/SLO instrument was used to record the OCT data. The center wavelength of the light source is 830 nm with a bandwidth of 20 nm. The proposed image registration method was used to align 70 successive B-scans. Figure 2 shows an example of three consecutive B-scan images of the human retina. Displacements in retinal structure among the images are highly apparent. Large displacements are more likely to occur if the patient has problems in fixating on a spot. The average of two B-scan images after global registration is shown in Fig. 4(a). The arrow shows the local displacements of two B-scans. Large displacements between B-scan images are corrected via global registration. Figure 3(a) shows a B-scan image obtained through the graph-based algorithm during local registration. The smoothness of the path is influenced by the discrete spatial nature of the digital image. This is embodied by “break” phenomena occurring at the two adjacent columns, where the relative axial shifts differ . “Break” phenomena are shown by arrows in Fig. 3(a). These phenomena cause discontinuities in the layered retinal structure and influence estimations of the retinal thickness of each layer in the average image. The layered retinal structure becomes smoother after pixel subdivision, as shown in Fig. 3(b). Figure 4(b) shows the average image of the two B-scans after local registration. Figures 4(c) and 4(d), respectively, show the magnified images of the rectangular boxes in Figs. 4(a) and 4(b). The layered retinal structure is blurred in Figs. 4(c) and 4(d). Local displacements are compensated and the images are aligned after local registration. Fig. 3(a) Optical coherence tomography (OCT) image obtained through the regularized shortest path algorithm during local registration; the arrows denote “break” phenomena. (b) Image obtained through pixel subdivision (subdivision factor).  Fig. 4(a) Average of two images obtained after global registration; the arrow denotes the local displacement of the two images. (b) Average of two images obtained after global and local registrations. (c) and (d) show the magnified images of the rectangular boxes in (a) and (b), respectively.  The computational cost of the local registration algorithm is , where () is the value range of . Given that nearly all the local displacements are less than 10 pixels, we can limit the value range of to improve the algorithm efficiency. Here, we chose to reduce computational costs ( is usually much larger than 10). A single B-scan from a healthy subject is shown in Fig. 5(a), and the final aligned average of 30 B-scans is shown in Fig. 5(b). The structure of the retinal layers is shown more clearly in the averaged image. To assess the performance of the proposed method, we used signal-to-noise ratio (SNR) and contrast-to-noise ratio (CNR) to measure the image quality quantitatively. The SNR is defined as8 where and are the respective mean and standard deviation of the background region in the image. Also, the CNR is defined as15 where and are the respective mean and standard deviation of the regions of interest (ROIs), which are the OCT signals of the image. We manually selected five small rectangles with pixels as ROIs () and a large rectangle as the background region, as shown in Fig. 5(a).Fig. 5OCT B-scan images of the human retina from a healthy subject: (a) single B-scan and (b) average image obtained after global and local image registrations. NFL: nerve fiber layer; GCL: ganglion cell layer; IPL: inner plexiform layer; INL: inner nuclear layer; OPL: outer plexiform layer; ONL: outer nuclear layer; ELM: external limiting membrane; IS/OS: inner and outer segment junctions of the photoreceptor; and RPE: retinal pigmented epithelium.  Figure 6 shows the improvements in SNR and CNR as functions of the number of averaged images. The method proposed in this study performs better than that proposed in Ref. 18 in terms of both SNR and CNR. SNR improvement in our method closely resembles the square root of , which is the number of averaged images. A factor of around 11 can be obtained during CNR improvement when the number of averaged B-scan images is around 15. The CNR does not show obvious improvement when over 15 images are used, as shown in the figure. Fig. 6Improvements in signal-to-noise ratio (SNR) and contrast-to-noise ratio (CNR) as functions of the number of averaged images.  To assess the performance of the loss of spatial resolution brought by our technique, we compared the profile of the inner and outer segment junctions (IS/OS) layer taken from a single A-scan of a single image and an averaged image. Figure 7 presents a region of the IS/OS layer taken from a single A-scan at the foveal region as the white line shown in Fig. 5(a). We measured the full-width-at-half-maximum of the profile, and the profile broadens by about in the averaged 30 B-scan images. It shows that the loss of spatial resolution in the proposed technique is very small compared with the resolution of the system (i.e., ). Although there is some slight loss of spatial resolution in the averaged image, the reduction of noise makes the structure of the retinal layers clearer [Fig. 5(b)]. Fig. 7The IS/OS layer taken from a single A-scan at the foveal region [as the white line shown in Fig. 5(a)] of single image and averaged image.  4.ConclusionsA method for speckle reduction in OCT by combining global and local image registrations has been proposed in this paper. The method does not rely on any information about the retinal layer boundaries and is able to correct translation, rotation, and local deformation in the axial direction. The experimental results showed that the proposed method is an efficient way to align successive images and provides speckle reduction. An SNR improvement of nearly and a CNR improvement of around 11 were obtained. The application of this method to align and average OCT images leads to improvements in image quality, which could be beneficial for the identification of retinal structures and clinical diagnosis. AcknowledgmentsThis work was supported by the Science and Technology Commission of Shanghai Municipality (13441900500) and the National Natural Science Foundation of China (61205102 and 61275207). ReferencesD. Huang et al.,

“Optical coherence tomography,”

Science, 254

(5035), 1178

–1181

(1991). http://dx.doi.org/10.1126/science.1957169 SCIEAS 0036-8075 Google Scholar

J. G. Fujimoto,

“Optical coherence tomography for ultrahigh resolution in vivo imaging,”

Nat. Biotechnol., 21

(11), 1361

–1367

(2003). http://dx.doi.org/10.1038/nbt892 NABIF9 1087-0156 Google Scholar

M. Wojtkowski,

“High-speed optical coherence tomography: basics and applications,”

Appl. Opt., 49

(16), 30

–61

(2010). http://dx.doi.org/10.1364/AO.49.000D30 APOPAI 0003-6935 Google Scholar

J. M. Schmitt, S. H. Xiang and K. M. Yung,

“Speckle in optical coherence tomography,”

J. Biomed. Opt., 4

(1), 95

–105

(1999). http://dx.doi.org/10.1117/1.429925 JBOPFO 1083-3668 Google Scholar

B. Karamata et al.,

“Speckle statistics in optical coherence tomography,”

J. Opt. Soc. Am. A, 22

(4), 593

–596

(2005). http://dx.doi.org/10.1364/JOSAA.22.000593 JOAOD6 0740-3232 Google Scholar

R. J. Zawadzki et al.,

“Ultrahigh-resolution optical coherence tomography with monochromatic and chromatic aberration correction,”

Opt. Express, 16

(11), 8126

–8143

(2008). http://dx.doi.org/10.1364/OE.16.008126 OPEXFF 1094-4087 Google Scholar

Y. T. Pan et al.,

“Subcellular imaging of epithelium with time-lapse optical coherence tomography,”

J. Biomed. Opt., 12

(5), 050504

(2007). http://dx.doi.org/10.1117/1.2800007 JBOPFO 1083-3668 Google Scholar

A. E. Desjardins et al.,

“Angle-resolved optical coherence tomography with sequential angular selectivity for speckle reduction,”

Opt. Express, 15

(10), 6200

–6209

(2007). http://dx.doi.org/10.1364/OE.15.006200 OPEXFF 1094-4087 Google Scholar

B. J. Huang et al.,

“Speckle reduction in parallel optical coherence tomography by spatial compounding,”

Opt. Laser Technol., 45 69

–73

(2013). http://dx.doi.org/10.1016/j.optlastec.2012.07.031 OLTCAS 0030-3992 Google Scholar

M. Pircher et al.,

“Speckle reduction in optical coherence tomography by frequency compounding,”

J. Biomed. Opt., 8

(3), 565

–569

(2003). http://dx.doi.org/10.1117/1.1578087 JBOPFO 1083-3668 Google Scholar

B. F. Kennedy et al.,

“Speckle reduction in optical coherence tomography by strain compounding,”

Opt. Lett., 35

(14), 2445

–2447

(2010). http://dx.doi.org/10.1364/OL.35.002445 OPLEDP 0146-9592 Google Scholar

A. Ozcan et al.,

“Speckle reduction in optical coherence tomography images using digital filtering,”

J. Opt. Soc. Am. A, 24

(7), 1901

–1910

(2007). http://dx.doi.org/10.1364/JOSAA.24.001901 JOAOD6 0740-3232 Google Scholar

D. C. Adler, T. H. Ko and J. G. Fujimoto,

“Speckle reduction in optical coherence tomography images by use of a spatially adaptive wavelet filter,”

Opt. Lett., 29

(24), 2878

–2880

(2004). http://dx.doi.org/10.1364/OL.29.002878 OPLEDP 0146-9592 Google Scholar

M. A. Mayer et al.,

“Wavelet denoising of multiframe optical coherence tomography data,”

Biomed. Opt. Express, 3

(3), 572

–589

(2012). http://dx.doi.org/10.1364/BOE.3.000572 BOEICL 2156-7085 Google Scholar

Z. P. Jian et al.,

“Speckle attenuation in optical coherence tomography by curvelet shrinkage,”

Opt. Lett., 34

(10), 1516

–1518

(2009). http://dx.doi.org/10.1364/OL.34.001516 OPLEDP 0146-9592 Google Scholar

Z. P. Jian et al.,

“Three-dimensional speckle suppression in optical coherence tomography based on the curvelet transform,”

Opt. Express, 18

(2), 1024

–1032

(2010). http://dx.doi.org/10.1364/OE.18.001024 OPEXFF 1094-4087 Google Scholar

X. Liu and J. U. Kang,

“Iterative sparse reconstruction of spectral domain OCT signal,”

Chin. Opt. Lett., 12

(5), 051701

(2014). http://dx.doi.org/10.3788/COL COLHBT 1671-7694 Google Scholar

T. M. Jorgensen and J. Thomadsen,

“Enhancing the signal-to-noise ratio in ophthalmic optical coherence tomography by image registration—method and clinical examples,”

J. Biomed. Opt., 12

(4), 041208

(2007). http://dx.doi.org/10.1117/1.2772879 JBOPFO 1083-3668 Google Scholar

S. Martinez-Conde, S. L. Macknik and D. H. Hubel,

“The role of fixational eye movements in visual perception,”

Nat. Rev. Neurosci., 5

(3), 229

–240

(2004). http://dx.doi.org/10.1038/nrn1348 NRNAAN 1471-0048 Google Scholar

M. Sugita et al.,

“Motion artifact and speckle noise reduction in polarization sensitive optical coherence tomography by retinal tracking,”

Biomed. Opt. Express, 5

(1), 106

–122

(2014). http://dx.doi.org/10.1364/BOE.5.000106 BOEICL 2156-7085 Google Scholar

M. Szkulmowski et al.,

“Efficient reduction of speckle noise in optical coherence tomography,”

Opt. Express, 20

(2), 1337

–1359

(2012). http://dx.doi.org/10.1364/OE.20.001337 OPEXFF 1094-4087 Google Scholar

D. Alonso-Caneiro, S. A. Read and M. J. Collins,

“Speckle reduction in optical coherence tomography imaging by affine-motion image registration,”

J. Biomed. Opt., 16

(11), 116027

(2011). http://dx.doi.org/10.1117/1.3652713 JBOPFO 1083-3668 Google Scholar

B. Antony et al.,

“Automated 3-D method for the correction of axial artifacts in spectral-domain optical coherence tomography images,”

Biomed. Opt. Express, 2 2403

–2416

(2011). http://dx.doi.org/10.1364/BOE.2.002403 BOEICL 2156-7085 Google Scholar

M. Chen et al.,

“Analysis of macular OCT images using deformable registration,”

Biomed. Opt. Express, 5

(7), 2196

–2214

(2014). http://dx.doi.org/10.1364/BOE.5.002196 BOEICL 2156-7085 Google Scholar

A. A. Goshtasby, 2-D and 3-D Image Registration: For Medical, Remote Sensing, and Industrial Applications, Wiley, Hoboken, New Jersey

(2005). Google Scholar

J. Nocedal and S. Wright, Numerical Optimization, Springer, New York

(2006). Google Scholar

BiographyHang Zhang is an MS student at Shanghai Institute of Optics and Fine Mechanics, Chinese Academy of Sciences. He received his BE degree in optoelectronic information engineering from Huazhong University of Science and Technology, China, in 2012. His research interests include biomedical optical imaging and image-processing techniques. Zhongliang Li received his BE degree in 2004 from Naikai University, China, and his PhD degree in 2009 from Shanghai Institute of Optics and Fine Mechanics, Chinese Academy of Sciences. He is an associate professor of optical engineering at Shanghai Institute of Optics and Fine Mechanics, Chinese Academy of Sciences. His research interests include biomedical optical imaging and optical measurement. Xiangzhao Wang received his BE degree in electrical engineering from Dalian University of Science and Technology, China, in 1982, and his ME and DrEng degrees in electrical engineering from Niigata University, Japan, in 1992 and 1995, respectively. He is a professor of optical engineering at Shanghai Institute of Optics and Fine Mechanics, Chinese Academy of Sciences. His research interests include optical metrology and optical information processing. Xiangyang Zhang received his PhD degree in 2004 from Shanghai Institute of Optics and Fine Mechanics, Chinese Academy of Sciences, and later became an associate professor in the School of Science, Jiangnan University. He completed postdoctoral research at Southern California University. His research interests include biomedical optical imaging, optical measurement, and quantum optics. |