|

|

1.IntroductionThe World Health Organization (WHO) estimated that in 2010 there were 285 million people worldwide with visual impairments from which 39 million suffered blindness.1 Diabetic retinopathy (DR) and glaucoma are among the dominant silent ocular diseases causing the increasing, yet potentially avoidable, vision problems. In 2010, there was an estimate of 932 and 60.53 million people worldwide with DR and glaucoma, respectively. Silent diseases have mild slowly progressing early symptoms that usually pass unnoticed by patients. Patients commonly become aware of these silent diseases in their late stages after severe vision damage has already occurred. In their early stages, the medical treatment of silent ocular diseases is relatively simple and can help prevent disease progression. If left untreated, pathological complications occur leading to severe visual impairments requiring complex treatment procedures that are mostly ineffective.4 Accordingly, the early detection and proper treatment of silent ocular disease symptoms are necessary to limit the growth of avoidable visual impairments worldwide. For example, studies have shown that early detection of DR by periodic screening reduces the risk of blindness by about 50%.5 Early disease detection requires frequent medical inspection of millions of candidate patients such as those with family history of glaucoma or patients of diabetes. A recent study also found a strong relation between chronic kidney diseases and ophthalmic diseases causing treatable visual impairments. This study strongly recommended the eye screening of all patients with reduced kidney functions.6 The manual screening of such a large population has the drawback of producing an enormous overhead on ophthalmologists. Moreover, manual screening can be limited by the low ratio of ophthalmologists to patients specifically in developing countries and rural areas. Automatic retinal screening systems (ARSS) can help overcome these limitations. ARSS capture and analyze the retinal images without the need of human intervention. Based on the automated diagnosis, a subject is advised to consult an ophthalmologist if disease symptoms are detected. Substantial research efforts were put into the development of ARSS, however, their performance was found to be strongly reliant on the quality of the processed retinal images.7,8 Generally, medically suitable retinal images are characterized by two main aspects:

Retinal images are acquired using digital fundus cameras that capture the illumination reflected from the retinal surface. Studies have shown that the percentage of retinal images unsuitable for ARSS is about 10% and 20.8% for dilated and nondilated pupils, respectively.7 Medically unsuitable retinal images can result from several factors including inadequate illumination, poor focus, naturally curved structure of the retina, variation of pupil dilation, and pigmentation among patients, along with patient movement or blinking.10–12 The processing of poor quality images could result in an image recapture request by the ophthalmologist, which costs both money and time. In a worse scenario, analysis of medically unsuitable retinal images by the ARSS can cause a diseased eye to be falsely diagnosed as healthy leading to delayed treatment. Consequently, retinal image quality assessment (RIQA) is a crucial preliminarily step to assure the reliability of ARSS. In this work, an RIQA algorithm suitable for ARSS is proposed that considers various clarity and content quality issues in retinal images. The rest of this paper is organized as follows: Section 2 gives a comprehensive survey of the previous work in quality assessment considering both retinal image clarity and content. Section 3 summarizes the details of the datasets used for the analysis and testing of the proposed algorithm. In Sec. 4, the suggested feature sets addressing sharpness, illumination, homogeneity, field definition, and outliers are introduced. Then in Sec. 5, initially each of the five quality feature sets is individually validated. Next, all the feature sets are used to create an overall quality feature vector that is tested using a dataset with a wide range of quality issues. Section 6 includes the discussion and analyses of each of the presented feature sets and the overall quality algorithm. Finally, conclusions are drawn in Sec. 7. 2.Literature ReviewIn the field of retinal image processing, most research efforts have been directed toward the development of segmentation, enhancement, and disease diagnosis techniques. Recently, studies have shown that the performance of these algorithms significantly relied on the quality of the processed retinal image.8 Poor image clarity or missing retinal structures can render the image inadequate for medical diagnosis. In this section, a survey and categorization of clarity and content-based RIQA methods are provided. 2.1.Retinal Image Clarity Assessment ReviewRetinal image clarity assessment techniques can be generally divided into spatial and transform-based depending on the domain from which the quality features are calculated. Although spatial RIQA techniques were more widely adopted in literature, recently several transform-based algorithms have been introduced and shown to be highly effective in RIQA. 2.1.1.Spatial retinal image quality assessmentSpatial RIQA techniques can be further divided into generic and segmentation-based approaches. Generic RIQA approaches rely on general features that do not take into account the retinal structures within the images. In the early literature, Lee and Wang13 compared the test image’s histogram to a template histogram created from a group of high-quality retinal images for quality assessment. Shortly after, Lalonde et al.14 argued that good quality retinal images do not necessarily have similar histograms and proposed using template edge-histograms instead. Nevertheless, both these approaches depended on templates created from a small set of excellent retinal images, which do not sufficiently consider the natural variance in retinal images. Recently, generic approaches have evolved to use a combination of sharpness, statistical, and textural features15–17 to evaluate image sharpness and illumination. Segmentation or structural-based RIQA approaches define high-quality retinal images as those having adequately separable anatomical structures. Among the distinct works is that presented by Niemeijer et al.18 who introduced image structure clustering. Retinal images were segmented into five structural clusters including dark and bright background regions, high contrast regions, vessels, and the optic disc (OD). A feature vector was then constructed from these clusters to be used for retinal image quality classification. More commonly, segmentation-based approaches relied solely on blood vessel information from either the entire image19–22 or the macular region.23–25 The latter approaches argued that macular vessels are more diagnostically important as well as being the first to be affected by slight image degradation.24 Nevertheless, some retinal images were found to be of sufficient quality although their macular vessels were too thin or unobservable.26 Generic methods have the advantage of being simple while giving reliable results. However, they have the disadvantages of completely ignoring retinal structure information as well as becoming computationally expensive when textural features are employed.27 Segmentation methods are usually more complex and can be prone to errors when dealing with poor quality images.28 However, Fleming et al.29 have shown that both generic and segmentation-based features were equally useful for RIQA. Recently, hybrid techniques are being adopted in literature that combine both generic and structural features for RIQA.7,28,30 Moreover, an interesting emerging approach for sharpness assessment compares retinal images to their blurred versions based on the intuition that unsharp images will be more similar to their blurred versions.9,16,31 2.1.2.Transform domain retinal image quality assessmentWavelet transform (WT) has been successfully utilized in several image processing applications including image compression, enhancement, segmentation, and face recognition. WT has also been widely used in general image quality assessment,32–36 yet only limited work explored its application in RIQA. Multiresolution analysis performed with WT has the advantage of being localized in both time and frequency.37 WT decomposes images into horizontal (H), vertical (V), and diagonal (D) detail subbands along with an approximation (A) subband. Detail subbands include the image high-frequency information representing edges. The approximation subband carries the low-frequency information of the image. Further image decomposition can be performed by recursively applying WT to the approximation subband. Generally, there are two types of WTs: Decimated wavelet transform (DecWT) and undecimated or stationary wavelet transform (UWT). DecWT is the shift-variant and is more widely used as it is very efficient from the computational point of view.38 UWT is the shift-invariant due to the elimination of the decimation step making it more suitable for image enhancement algorithms.39 Although WT is not yet widely deployed in RIQA, both DecWT and UWT have been successfully applied in RIQA algorithms. In the context of full-reference RIQA in which image quality is assessed through a comparison to a high-quality reference image, significant work was presented by Nirmala et al.40,41 Nirmala et al. introduced a wavelet weighed full-reference distortion measure relying on extensive analysis of the relation between retinal structures and wavelet levels. In the context of no-reference RIQA in which quality of the test image is blindly assessed without the usage of any reference image, several relevant researches were introduced in literature. Bartling et al.42 assessed retinal image quality by considering its sharpness and illumination. Image sharpness was evaluated by computing the mean of the upper quartile values of the matrix returned by the WT. This measure was calculated only for the nonoverlapping image blocks found to have relevant structural content. Katuwal et al.43 summed the detail subband coefficients of three UWT levels for blood vessel extraction. They assessed retinal image quality based on the density and symmetry of the extracted blood vessel structure. Veiga et al.26 used a wavelet-based focus measure defined as the mean value of the sum of detail coefficients along with moment and statistical measures. However, Veiga et al. performed only one level of wavelet decomposition thus not fully exploiting the WT multiresolution potential. In the previous work by AbdelHamid et al.,44 decimated wavelet-based sharpness and contrast measures were introduced to evaluate retinal image quality. Proposed sharpness features were extracted from five wavelet levels. The significance of each level with respect to retinal image size and quality grade was explored using two datasets of different resolutions and blurring severity. In each case, the most relevant wavelet level was used for feature computation. The wavelet-based sharpness features were found to be efficient for RIQA regardless of image size or degree of blur. The algorithm’s short execution time made it fairly eligible for real-time systems. Nevertheless, only image sharpness was considered for quality assessment. 2.2.Content Literature ReviewSeveral RIQA works considered retinal field definition and nonretinal image differentiation alongside clarity issues. For field definition evaluation, Fleming et al.23 presented a two-step algorithm in which the vessel arcades, OD, and the fovea were initially detected. Then, several distances related to these structures were measured to assess the image’s field of view (FOV). Other algorithms relied only on validating the presence of the OD,43 fovea,9 or both30 in their expected locations. As for outlier detection, Giancardo et al.19 and Sevik et al.30 used RGB information for the identification of outliers. Yin et al.31 used a two-stage approach to detect outlier images in which a bag of words approach first identified nonretinal images. Next, these nonretinal images were rechecked by comparing them to a set of retinal images using the structural similarity index measure.45 In this work, a no-reference RIQA algorithm intended for automated DR screening systems is proposed. The introduced algorithm evaluates five clarity and content features: sharpness, illumination, homogeneity, field definition, and outliers. Sharpness and illumination features are calculated from the UWT detail and approximation subbands, respectively. For homogeneity assessment, wavelet features are utilized in addition to features from the retinal saturation channel that is specifically designed for retinal images. Field definition and outlier evaluation are performed using the proposed sharpness/illumination features and color information, respectively. All proposed feature sets are separately validated using several datasets. Then, all the features are combined into a larger quality feature vector and used to classify a dataset having various quality degradation issues into good and bad quality images. Finally, the overall performance of the introduced RIQA algorithm is thoroughly analyzed and compared to other algorithms from literature. 3.MaterialsMany publicly available retinal image datasets are available, however, only a few of them include quality information. In this work, images from five different publicly available datasets are used for the development and testing of the proposed quality features. The datasets were captured using different camera setups and are of varying resolutions and diverse quality problems.

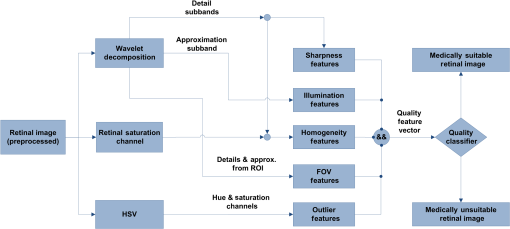

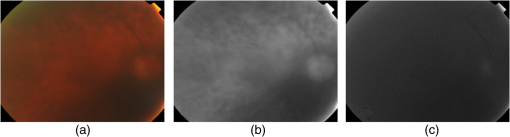

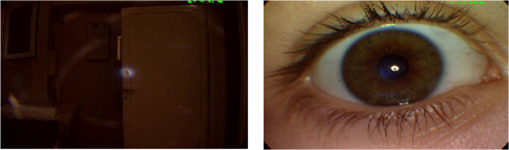

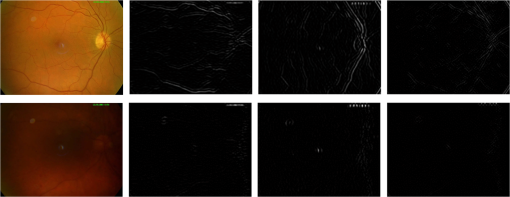

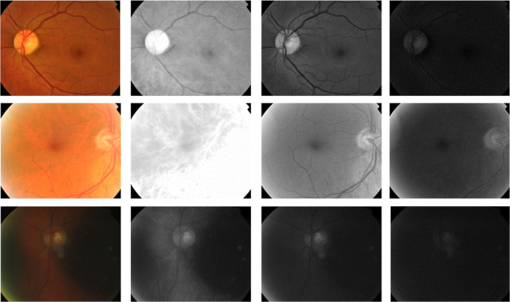

4.MethodsThe proposed RIQA algorithm exploits the multiresolution characteristics of WT for the computation of several quality features to assess the sharpness, illumination, homogeneity, and field definition of the image. Furthermore, the retinal saturation channel and color information are used to calculate image homogeneity and outlier features, respectively. Finally, the five quality feature sets are concatenated to create the final quality feature vector that forms the input to a classifier for the overall image quality assessment. Generally, the scale of the wavelet subbands depends on image resolution. In order to ensure resolution consistency when computing wavelet-based features, images with different resolutions were resized to . All image resizing in this work was performed using bicubic interpolation. The presented RIQA algorithm is summarized in Fig. 1 and will be thoroughly described in the subsequent sections. 4.1.SharpnessMedically suitable retinal images must include sharp retinal structures to facilitate segmentation in automatic systems. Generally, sharp structures are equivalent to high-frequency image components. Wavelet decomposition can separate an image’s high-frequency information into its detail subbands. Thus, sharp retinal images would have more information content within their wavelet detail subbands corresponding to larger absolute wavelet coefficients compared to blurred images. Figure 2 shows the level 3 detail subbands of sharp and blurred retinal images. More retinal structure-related information appears in the different detail subbands of the sharp image in comparison to the amount of information within the blurred image’s detail subbands. Fig. 2Level 3 green channel detail subbands of sharp (first row) and blurred (second row) retinal images from DRIMDB.30 From left to right: color image, horizontal, vertical, diagonal subbands.  In the previous work by AbdelHamid et al.,44 retinal quality assessment was based on the intuition that good quality images have sharp retinal blood vessels. Extensive analysis was made to find the single wavelet level having the most relevant blood vessel information. Sharpness quality evaluation was then performed using features calculated solely from that single wavelet level. However, the analysis demonstrated the dependence of the relevant wavelet level on the amount of blurring within the image. In this work, a more generic approach for retinal image sharpness assessment is adopted. Retinal images are decomposed into five wavelet levels (L1, L2, L3, L4, and L5) and all levels are involved in the computation of the sharpness features. Nirmala et al.41 have shown that there is a relation between retinal structures and different wavelet levels. They showed that for images, blood vessel and OD information was more relevant in the detail subbands of levels 2 to 4, whereas information related to the macular region was more significant in levels 1 to 3. Hence, considering L1 to L4 for sharpness features computation has the advantage of taking into account sharpness information of all the different retinal structures. Level 5 has also been used for sharpness feature calculation to investigate its relevance to retinal image sharpness assessment. Another modification to our previous work is the use of UWT, which preserves more image information than the DecWT due to the absence of the decimation step.48 Sharpness RIQA algorithms commonly use only the green channel for feature computation because of its high contrast.7,17–19,28–30,43,49 However, observation of retinal images has shown that retinal structures in the red channel of sharp images were generally clearer than in those of blurred images. In this work, the proposed sharpness feature set includes four wavelet-based measures computed from both the red and green detail subbands. The wavelet Shannon entropy,50 mean, and interquartile range (IQR) were used to measure the information content, expectation, and dispersion of the detail subband coefficients, respectively. The three sharpness features are given by the following equations: where is the number of coefficients in the wavelet subband, is the wavelet coefficient having index and subband is the horizontal, vertical, or diagonal subband, is the first quartile, and is the third quartile.The fourth sharpness measure used is the difference in wavelet entropies between the test image and its blurred version (). Sharp images are generally more affected by blurring than unsharp images. separates sharp from unsharp images by measuring the effect of blurring on the test images. Gaussian filters are commonly used in RIQA literature9,15,26 as a degradation function to produce blurring effect. Several Gaussian filter sizes with different standard deviations were tested to compare their effect on retinal image sharpness starting by a Gaussian filter then incrementally increasing the filter size. In our final experiments, a Gaussian filter of size and standard deviation 7 was used to get the blurred version of the test image as it achieved noticeable blurring within the retinal images affecting both the thin and thick blood vessels. Smaller filters were found to scarcely cause any visually detectable blurring within the images. The sharpness feature set includes the wavelet-based entropy, mean, IQR, and WEntropydiff. All features were calculated from levels 1 to 5 of both the red and green detail subbands. Further feature selection will be performed in Sec. 5.1 based on performance analysis of the sharpness feature set on two different datasets. 4.2.IlluminationAn increase or decrease in the overall image illumination could affect the visibility of retinal structures and some disease lesions. The contrast of the image is also decreased, which could negatively affect the suitability of the image for medical diagnosis. It has been shown by Foracchia et al.51 that illumination in retinal images has low spectral frequency. Low-frequency information of the multiresolution decomposed images can be found in their approximation subbands. Most image details are separated in the detail subbands of higher wavelet levels. Hence, the L4 approximation subband is almost void of sharpness information consisting mainly of illumination information. In this paper, the adequacy of using L4 RGB wavelet-based features for retinal illumination assessment is explored. Figure 3 shows examples of the RGB channels of well-illuminated, over-illuminated, and under-illuminated retinal images. The overall image illumination is seen to be directly reflected in the exposure of each of the RGB channels. RGB color information has been used before for retinal image illumination evaluation by several researchers. Niemeijer et al.18 created a feature set including information from five histogram bins for each of the RGB channels. Yu et al.7 calculated seven statistical features (mean, variance, skewness, kurtosis, and quartiles of cumulative density function) from each of the RGB channels. The illumination feature set used includes the mean and variance L4 approximation subband of the RGB channels. Fig. 3Examples of retinal images of varying illumination from DR2:9 well-illuminated (first row), over-illuminated (second row), and under-illuminated (third row). From left to right: color image, red channel, green channel, blue channel.  4.3.HomogeneityThe complexity of the retinal imaging setup along with the naturally concave structure of the retina can result in nonuniformities in the illumination of retinal images. As a result, the sharpness and visibility of the retinal structures can become uneven across the image. This in turn would affect the reliability of the image for medical diagnosis. Retinal image homogeneity is commonly addressed in the literature using textural features15,17,28,30 that are not adapted for retinal images and can be computationally expensive.27 In this section, homogeneity measures specifically designed for retinal images are introduced. Retinal images from DR2, DRIMDB, and MESSIDOR datasets were inspected and analyzed in the process of the homogeneity features development. All images used for the development of the homogeneity features were excluded from any further homogeneity tests to avoid classification bias. 4.3.1.Retinal saturation channel homogeneity featuresBasically, the colors observed in retinal images depend on the reflection of light from the different eye structures. The radiation transport model (RTM)52,53 shows that light transmission and reflection within the eye mainly depend on the concentration of hemoglobin and melanin pigments within its structures.54 Red light is scarcely absorbed by either hemoglobin or melanin and is reflected by layers beyond the retina. This results in the usually over-illuminated, low-contrast red channel as well as the reddish appearance of the retinal images. Blue light is strongly absorbed by hemoglobin and melanin as well as by the eye lens.55 This results in the commonly dark blue channel of retinal images. Green light is also absorbed by both pigments but less than the blue light. Specifically retinal structures containing hemoglobin, such as blood vessels, absorb more green light than the surrounding tissues resulting in the high vessel contrast of the green channel. The RTM directly explains the theory behind the well-known notion of retinal images having overexposed red channels, high contrast green channels, and dark blue channels. Based on this theory, we propose a saturation channel specifically suitable for retinal images. The proposed saturation channel was inspired by the saturation channel of the HSV model () and is referred to as the retinal saturation channel (). By definition, saturation is the colorfulness of a color relative to its own brightness.56 In the HSV color model, saturation is calculated as the most dominant of the RGB channels with respect to the least prevailing color as given by the following equation: The considers the findings of the RTM in creating a saturation channel better suited for retinal images. Essentially, the has two main modifications over :

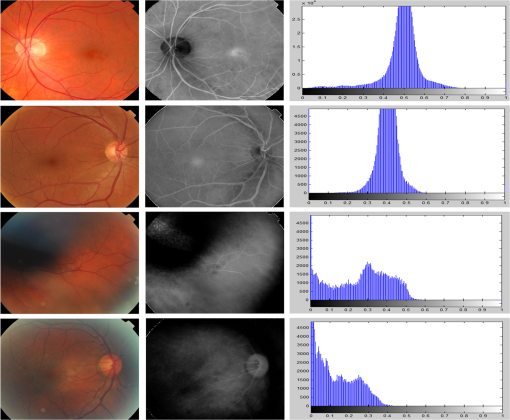

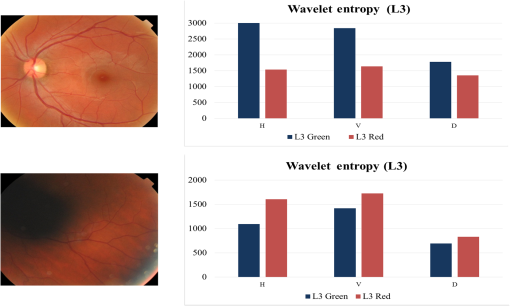

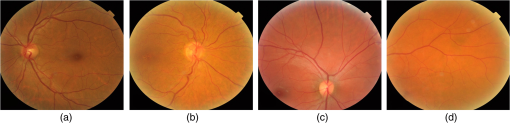

The equation of the newly proposed is then given by where the R, G, B denote the red, green, and blue components of the retinal image, respectively.Figure 4 shows examples of retinal images of various homogeneities along with their channel and histogram. Evenly illuminated retinal images as in Fig. 4 (rows 1 and 2) resulted in Gaussian-like shaped distributions centered within the middle region of the histogram. On the other hand, for nonhomogeneous retinal images as in Fig. 4 (rows 3 and 4), the distribution shape of the was altered as well as being shifted leftward. The difference in histogram positioning between the homogenous and nonhomogenous images is accounted for by measuring the percentage of pixels in middle range of the (). A seventy-five percent middle intensity range was considered to take into consideration different lightening conditions of homogeneous retinal images. Furthermore, several features were used to evaluate the images’ homogeneity due to depicted differences between the histogram distributions of homogenous and nonhomogenous retinal images. Fig. 4Examples of homogeneous retinal images from (row 1) MESSIDOR,46 (rows 2) DR2,9 and (rows 3 and 4) nonhomogeneous retinal images from DR2.9 From left to right: color image, channel, and channel histogram.  The homogeneity feature set includes the mean, variance, quartiles, IQR, skewness, kurtosis, coefficient of variance (CV), , energy, and entropy. More features will be added to the homogeneity feature set in the next section. 4.3.2.Wavelet-based homogeneity featuresWavelet-based features are introduced in this section to complement the features derived from the channel to comprehensively address retinal image homogeneity.

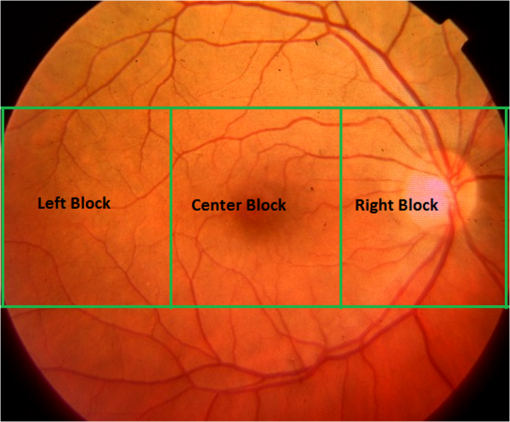

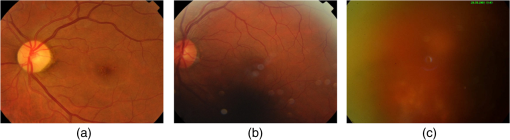

Fig. 6Homogeneous (first row) and nonhomogenous (second row) retinal images from DR2.9 Then from left to right: color image, green and red channel wavelet entropies of the L3 horizontal, vertical, and diagonal subbands.  Fig. 7Examples of (a) homogenous (from DR2)9 (b) nonhomogeneous sharp (from DR2)9 and (c) nonhomogenous blurred (from DRIMDB)30 retinal images with WaveletEntropyL3/WaveletEntropyL1 ratios of (a) 37, (b) 11, and (c) 7.5.  The overall homogeneity feature set contains the features (mean, variance, IQR, skewness, kurtosis, CV, , energy, and entropy) along with the difference between red and green L3 wavelet entropies and the WaveletEntropyL3/WaveletEntropyL1 ratio. 4.4.Field DefinitionSharp and well-illuminated retinal images can be regarded as medically unsuitable if they have missing or incomplete retinal structures. Inadequate field definition can be caused by patient movement, latent squint, or insufficient pupil dilation.23 In this study, we are concerned with macula centered retinal images captured at 45 deg FOV commonly recommended in DR screening.9,23,24 Macula centered retinal images should have the macula centered horizontally and vertically within the image with the OD to its left or right for left and right eyes, respectively.23 Such an orientation assures the diagnostically important macular region is completely visible within the retinal images. Figure 8 shows examples of macula and nonmacula centered retinal images. Fig. 8Retinal images with different field definition (a) macula centered, (b)–(d) nonmacula centered from DR2.9  The proposed algorithm assesses an image’s field definition by checking whether or not the OD is located in its expected position. Initially, the middle region within the image is divided into three blocks: left, center, and right as shown in Fig. 9. A relatively large center region (50% of the image height) is considered to take into account cases in which the OD is slightly shifted upward or downward while adequate field definition is still maintained. The algorithm evaluates the image’s field definition by inspecting only these three blocks taking into account the following two observations:

Based on these two observations, four measures are proposed for field definition validation where , , and are the sharpness measures calculated for the left, center, and right blocks, respectively. , , and refer to the illumination measures calculated for the left, center, and right blocks, respectively.Macula centered images are expected to have the macula within their center block and the OD in one of the edge blocks (left or right). Thus, based on the two former observations, macula centered images will be characterized by and that are larger than and , respectively. Furthermore, the difference features exploit the fact that the macula region, expected to be in the image’s center block, has lower vessel concentration and darker illumination relative to the block including the OD. The field definition feature set includes the measures defined by Eqs. (6)–(9). The sharpness and illumination features were calculated using the L4 wavelet-based entropy and RGB features described in Secs. 4.1 and 4.2, respectively. 4.5.OutliersOutlier images can be captured during retinal image acquisition due to incorrect camera focus or false patient positioning.30 They consist of nonretinal information making them irrelevant for medical diagnosis. If outlier images are processed by ARSS, they can be falsely classified as being medically suitable images leading to unreliable or incorrect diagnosis. Generally, retinal images are characterized by their unique coloration having a dominating reddish-orange background along with some dark red vessels and the yellowish OD. This characteristic dominating reddish-orange color of the retinal images can be explained by the RTM model detailed in Sec. 4.3.1. On the other hand, nonretinal images tend to have more generalized colorations as shown in Fig. 10. Color information from the RGB channels has been commonly used to discriminate retinal from nonretinal images.19,30 Nevertheless, several color models exist that separate color and luminance information such as the CIELab and HSV models. In the CIELab model, images are represented by three color channels including image luminance, difference between red and green as well as difference between yellow and blue. In the HSV, image color, saturation, and value information are separated into three different channels. In this work, color information is also used to differentiate between retinal and nonretinal images. Statistical features calculated from three different color models are compared for outlier image detection. The mean, variance, skewness, kurtosis, and energy calculated from the RGB channels, HSV hue and saturation channels, and the CIELab color channels will be compared in the next section to choose the color model more suitable for outlier features calculation. 5.ResultsIn this section, the analyses and classification results of the proposed RIQA measures are presented. Initially, each of the five different feature sets (sharpness, illumination, homogeneity, field definition, and outliers) are separately evaluated. Next, the final quality feature vector is constructed and used to classify the DR1 dataset which includes retinal images having various quality issues. Finally, the overall quality results from the proposed RIQA algorithm are compared to other algorithms from the literature. Table 1 shows a summary of the datasets used in the analysis of each of the individual feature sets and the overall quality feature vector. Table 1Summary of the datasets used in testing the proposed quality features.

The field definition dataset was created using randomly selected images from the already categorized macula centered and nonmacula centered images within DR2. Furthermore, three datasets were created for the evaluation of the sharpness, illumination, and homogeneity feature sets. Bad quality images in each of these datasets were chosen to convey degradations related to the quality issue under consideration. Two experts individually annotated each of the four created datasets for sharpness, illumination, homogeneity, and field definition. The interrater agreement between the two raters was measured using Cohen Kappa’s coefficient.57 Kappa’s coefficient is a statistical measure of interrater agreement for categorical items. It is generally thought to be more robust than simple percentage agreement since it takes into account the agreement occurring by chance. Kappa’s coefficient was found to be 0.946, 0.967, 1.000, and 1.000 for the sharpness, illumination, homogeneity, and field definition datasets, respectively. This indicates the high level of reliability of the customized data sets. The sharpness feature set was also tested on the HRF dataset, whereas the outliers feature set was evaluated using DRIMDB. Wavelet-based features were calculated using the Daubechies4 (db4) UWT. All images were cropped to reduce the size of the dark region surrounding the retinal region of interest (ROI) within the image prior to feature calculation. Image cropping has the advantage of decreasing the images’ size and in turn the processing time by removing the irrelevant nonretinal regions within the image.16 The cropping algorithm employed is an adaptation of the method illustrated in Ref. 58. First, a binary mask image is created through hard thresholding then the ROI is determined by identifying the four corners of this mask image. The computed boundaries are used to create the cropped retinal image utilized for feature calculations. Feature computations were performed using MATLAB software (Mathworks, Inc., Natick, Massachusetts). All classification results were obtained using fivefold cross validation in the Weka platform.59 For the analyses of the individual feature sets, the -nearest neighbor (kNN) classifier was utilized as it can be easily tuned and provides consistent results. In all kNN classification experiments within this work, the parameter was varied over the range from 1 to 10 and best classification results were reported. Final tests on the DR1 dataset were performed using the support vector machines (SVM) classifier tuned to give optimal performance. 5.1.Sharpness Algorithm Classification PerformanceIn order to test the proposed sharpness algorithm, two datasets of different resolutions and degree of blur were used. The first dataset is a subset from DRIMDB and the second dataset is HRF. Each of the datasets consists of 18 good quality and 18 bad quality images. Unlike the severely blurred DRIMDB images, the unsharp images in the HRF dataset are only slightly blurred with the main vessels still visibly clear. Images of the DRIMDB and HRF datasets were resized to and , respectively. Image resizing was performed to make them suitable for five-level wavelet decomposition. For the first dataset, classification using the complete sharpness feature set resulted in an area under the receiver operating characteristic curve (AUC) of 0.997. In further experiments, only the wavelet entropy, mean, IQR, or were used for sharpness classification. AUCs higher than 0.99 were achieved when any of the four sharpness measures derived from either the green, red, or both channels was separately used. Moreover, the elimination of L5 sharpness features did not degrade the results in any of the previous cases. The high classification performance can be attributed to the ability of the wavelet-based features to easily separate sharp images from severely blurred images. The second dataset is considered more challenging since its poor quality images are only slightly blurred. An AUC of 0.951 was achieved using the entire sharpness feature set. Several observations can be made from further performed experiments whose results are summarized in Tables 2 and 3. First, all red channel sharpness features resulted in higher classification performance than green channel features. Nevertheless, the best results were achieved when both the red and green channels were used for sharpness feature computations. Second, Table 2 shows that a slight improvement in classification results (AUC of 0.954) is depicted upon the omission of L5 sharpness features when both red and green channel features are combined. Third, the comparison between absolute and classification results summarized in Table 3 shows an advantage to using . Table 2AUC for the HRF dataset using wavelet-based sharpness features.

Table 3Comparison between the AUCs using wavelet entropy versus WEntropydiff (L1 to L4) for the HRF dataset.

Based on the experiments performed on the two sharpness datasets, several modifications to the sharpness feature set were made. Previous research has shown that L5 scarcely had any sharpness-related information.41,44 Furthermore, experiments have shown that the elimination of L5 sharpness features had minimal effect on classification performance. Consequently, only four-level wavelet decompositions will be employed in sharpness computations. Moreover, absolute wavelet entropy will be omitted from the sharpness vector as the performance was shown to be either equivalent or better than the absolute wavelet entropy. The final quality feature vector will thus include the wavelet-based mean, IQR, and sharpness features. All sharpness features are to be calculated from both the red and green channels of L1 to L4 detail subbands. 5.2.Illumination Algorithm Classification PerformanceA dataset consisting of 60 well-illuminated and 60 poorly illuminated images from DR1 was constructed to test the illumination algorithm. The poorly illuminated images had the overall image exposure issues of being either over or under illuminated. Images were resized to to make them suitable for four-level wavelet decomposition. An AUC of 1 was achieved for the proposed illumination feature vector. Table 4 shows classification results when utilizing separate and combined color channels for feature computation. Higher results were obtained by the green channel features followed by the red then the blue. Superior performance was achieved when all color channel features were combined. The final quality feature vector will contain the complete illumination feature set. Table 4AUC for the illumination dataset using L4 wavelet illumination features.

5.3.Homogeneity Algorithm Classification PerformanceIn order to test the proposed homogeneity algorithm, a dataset including 60 homogeneous and 60 nonhomogeneous retinal images from DR1 was created. Homogeneity classification results using the different proposed features are summarized in Table 5. Classification with features, wavelet entropy R–G, and WaveletEntropyL3/WaveletEntropyL1 resulted in AUCs of 0.981, 0.899, and 0.982, respectively. The complete homogeneity feature set resulted in AUC of 0.999. All proposed homogeneity features will be added to the final quality vector. Table 5AUC for the homogeneity dataset using Sretina and wavelet features.

5.4.Field Definition Algorithm Classification PerformanceA dataset consisting of 60 macula centered and 60 nonmacula centered retinal images was constructed from DR2 for assessment of the proposed field definition features. All images were resized to to make them suitable for four-level wavelet decomposition. Results using the proposed field definition features resulted in a perfect classification with an AUC of 1. 5.5.Outlier Algorithm Classification PerformanceIn order to test the outlier detection algorithm, the DRIMDB dataset was used, which includes 194 retinal images and 22 nonretinal images. The RGB channels, HSV model’s hue and saturation channels, and CIELab model’s a (red–green) and b (blue–yellow) channels were compared for feature computation. As illustrated in Table 6, the lowest classification results were attained by the RGB features giving an AUC of 0.968. The HSV and CIELab features resulted in slightly higher AUCs of 0.993 and 0.991, respectively. Based on classification results, only the outlier features derived from the HSV color space will be included in the final quality vector. Table 6AUC for DRIMDB outlier classification using RGB, HSV, and CIELab features.

5.6.Overall Quality Classification ResultsIn Sec. 5.1–5.5, each of the five different quality feature sets for sharpness, illumination, homogeneity, field definition, and outlier assessment were separately tested and evaluated. Based on performed analyses, the overall quality feature vector is composed from features described in Table 7. In this section, the DR1 dataset is used for the evaluation of the overall quality feature vector. It is important to note that images from the DR1 dataset were not utilized in any analysis that could affect or bias the final classification results. The good quality images within the DR1 demonstrate varying degrees of sharpness and illumination. Moreover, the DR1 bad quality images suffer from a wide range of quality issues including slight/severe blur, over/under illumination, nonhomogeneity, nonmacula centering, and nonretinal images. Table 7Overall quality feature vector description.

Several preprocessing steps were performed on the DR1 dataset prior to feature extraction. Initially, a median filter was applied to all images for noise reduction. Next, unsharp masking and contrast adjustment were utilized to enhance the overall sharpness and contrast of the images, respectively. Generally, retinal image enhancement is especially useful for retinal images of borderline quality. Image enhancement improves the quality of these images further differentiating them from bad quality images. Furthermore, all images were resized to to make them suitable for four-level wavelet decomposition. Table 8 compares classification results of the proposed RIQA algorithm to other existing techniques. Two generic RIQA algorithms were reimplemented by the authors for the sake of this comparison. The first algorithm was introduced by Fasih et al.17 who combined the cumulative probability of blur detection (CPBD) sharpness feature60 with run length matrix (RLM) texture features61 for quality assessment. Fasih et al. calculated their features only from the OD and macular regions of the retinal image. The second algorithm is the work of Davis et al.15 who computed spatial frequency along with CIELab statistical and Haralick textural features62 from seven local regions covering the entire retinal image. Moreover, the results of the hybrid approach by Pires et al.9 are also included in the comparison. Pires et al. used the area occupied by retinal blood vessels, visual word features along with quality assessment measures estimated from comparing the test image to both blurred and sharpened versions of itself. Table 8AUC results comparing proposed clarity and content RIQA algorithm with other algorithms for DR1.

An SVM classifier with an radial basis function (RBF) kernel was used for the classification of the proposed and reimplemented algorithms. Generally, the performance of the SVM classifier is dependent on its cost and gamma parameters. A grid search strategy was performed to find the optimal classifier parameters in each case. Among the previous works, best classification results were obtained by Pires et al.’s hybrid algorithm achieving an AUC of 0.908. Nevertheless, the results of the proposed RIQA algorithm exceed all the methods in comparison with an AUC of 0.927. 6.DiscussionA no-reference RIQA algorithm was proposed that relied on both clarity (sharpness, illumination, and homogeneity) and content (field definition and outliers) evaluation. Wavelet-based sharpness and illumination features were calculated from the detail and approximation subbands, respectively. The proposed channel specifically created for retinal images was used along with two wavelet-based features for homogeneity assessment. Moreover, the wavelet-based sharpness and illumination features were utilized to assure that images have an adequate field definition while color information differentiated between retinal and nonretinal images. 6.1.Sharpness Algorithm AnalysisWavelet decomposition has the advantage of separating image details of different frequencies in subsequent wavelet levels. Generally, wavelet-based sharpness features were shown to have the advantages of being simple, computationally inexpensive, and considering structural information while giving reliable results for datasets of varying resolutions and degree of blur. Several wavelet-based features were computed from the red and green image channels for sharpness assessment. The green channel is often used solely for RIQA due to its high blood vessel contrast. However, the visibility of the retinal structures within the red channel can also be affected by the image’s overall sharpness. Furthermore, the OD is generally more prominent in the red channel than in the green channel of retinal images.63 Hence, combining the red and green channels for sharpness assessment allows for better consideration of the different retinal structures. In this work, it was shown that both green and red channels were relevant to retinal image sharpness assessment and that their combination can improve the classification performance. The usefulness of the retinal images’ red channel has been previously depicted in segmentation and enhancement algorithms. In OD segmentation, the red channel has been used either separately63 or alongside the green channel64 since the OD boundary is more apparent in the red channel. In retinal image enhancement, information from the red channel was used along with the green channel to improve results.65 Generic RIQA algorithms commonly use a combination of sharpness, textural, and statistical features. Spatial frequency15 and CPBD17 are among the sharpness features used in generic methods. Classification of the sharpness dataset drawn from DRIMDB using spatial frequency and CPBD resulted in AUCs of 0.948 and 0.81, respectively. Wavelet-based sharpness features were shown in Sec. 5.1 to give an AUC higher than 0.99 for the same dataset showing the superiority of the wavelet-based sharpness measures. Earlier research has shown that there is a relation between the different wavelet levels and the various retinal structures.41 In order to study the wavelet level significance for the different wavelet-based sharpness features, the proposed features were computed from individual levels and results are summarized in Table 9. Classifications were performed by the kNN classifier using features calculated from both the red and green channels of the HRF dataset resized to . In the given case, results show that L3 features produced the highest results for wavelet entropy, whereas L4 features gave the highest results for mean and IQR. Consequently, the wavelet level significance is found to be feature dependent. The approach adopted in this work combines sharpness features from different wavelet levels instead of finding the most relevant levels. For each of the three sharpness features, best results were achieved when L1 to L4 were combined. Hence, combining sharpness features from several wavelet levels was found to give more consistent classification performance than relying on a single-wavelet level for the feature calculation. Table 9AUC for sharpness classification using different individual and combined wavelet levels on the HRF dataset.

6.2.Illumination and Homogeneity Algorithms AnalysisModern theories on color vision explain that the human eye views color in a two-stage process described by the trichromatic and opponent theories.66 The trichromatic theory states that the retina has three types of cones sensitive to red, green, and blue. The opponent theory indicates that somewhere between the optic nerve and the brain, this color information is translated into distinctions between light and dark, red and green, as well as blue and yellow. RGB and CIELab color models are the closest to trichromatic and opponent theories, respectively. Nevertheless, other models such as HSV and HSI also separate the image color and illumination information. In this work, RGB illumination features were computed from the approximation wavelet subbands since the image’s illumination component resides within its wavelet approximation subband.67 The utilization of the RGB model for retinal illumination feature calculation is compared to using the luminance information of the CIELab, HSV, and HSI models. For the previously created illumination dataset, the mean and variance were computed from the four color models. Classification results using kNN are summarized in Table 10. Initially, only the illumination channels were used for classification resulting in AUCs of 0.953, 0.932, and 0.944 for the CIELab, HSV, and HSI color models, respectively. Next, the color and saturation channel features were added resulting in an increase in AUCs by approximately 4% to 6% for the different color models to become 0.992, 0.987, and 0.99 for the CIELab, HSV, and HSI color models, respectively. Hence, it is demonstrated that utilizing all color channels improves the illumination classifier performance for all the color models. The proposed wavelet-based illumination features achieve an AUC of 1 hence demonstrating competitive performance as compared to other color models. Table 10AUC for the illumination dataset using the RGB, CIELab, HSV, and HSI color models.

Retinal image homogeneity was assessed using a combination of measures that were especially customized for retinal images. The proposed channel was inspired by the saturation channel of the HSV model () along with the RTM. The previously created homogeneity dataset was used to compare the homogeneity features computed from , , and channels. Table 11 summarizes the results performed by the kNN classifier in which an AUC of 0.981 was achieved by the proposed features, which is 5% and 9% higher than the AUCs from and , respectively. These results indicate the efficiency of the proposed channel for retinal image homogeneity assessment as compared to saturation channels from different color models. Table 11AUC for the homogeneity dataset using different saturation channels.

The most previous work in RIQA did not discriminate between overall image illumination and homogeneity. Instead, various statistical and textural features were combined to consider different retinal image luminance aspects. For example, Yu et al.7 used seven RGB histogram features (mean, variance, kurtosis, skewness, and quartiles of cumulative distribution function) to measure the retinal image brightness, contrast, and homogeneity. They also added entropy, spatial frequency, and five Haralick features to measure image complexity and texture. Davis et al.15 used the kurtosis, skewness, and mean of the three CIELab channels for complete characterization of retinal image luminance. In order to compare retinal image luminance assessment algorithms, the illumination and homogeneity datasets were combined. Table 12 compares the kNN classifier results of the proposed illumination and homogeneity features to the measures suggested by Yu et al.7 and Davis et al.15. AUCs of 0.985, 0.933, and 0.943 were achieved for the proposed features, the RGB features,7 and the CIELab features,15 respectively. The AUC of the algorithm by Yu et al.7 was increased by to become 0.975 when texture and complexity features were added. Nevertheless, the introduced features achieved the highest classification results with an AUC of 0.985. Overall, analyses summarized in Tables 10–12 show that the newly proposed illumination and homogeneity features are of high relevance to retinal image luminance assessment. Table 12Comparison between luminance features based on AUC for combined illumination and homogeneity datasets.

Studies have shown that retinal structures captured within color images as well as the phenotype and prevalence of some ocular diseases varied with ethnicity.68,69 For example, pigmentation variations among ethnic groups can affect the observed ocular image.70 Giancardo et al.71 have noticed that color retinal images of Caucasians have a strong red component whereas those of African Americans had a much stronger blue component. This could have an effect on the proposed retinal saturation channel when dealing with different ethnic groups. A possible solution to this issue could be the introduction of a parameterized saturation channel that could be adjusted according to the ethnic group under consideration. In conclusion, the study of the effect of ethnicity on retinal image processing techniques is essential to ensure reliability of ARSS for heterogeneous population screening. 6.3.Field Definition and Outliers Algorithms AnalysisRIQA algorithms usually classify retinal images into good or bad quality images relying solely on image clarity features assuming processed images to be retinal and having adequate field definition. In real systems, such an assumption cannot be made in which images with inadequate content can be captured. Fleming et al.23 have observed that of medically unsuitable retinal images fail due to inadequate field definition. Further processing of images with inadequate content by automatic RIQA systems could result in misdiagnosis. Thus, validating retinal image content along with its clarity is an indispensable step in RIQA specifically in automated systems. Macula-centered retinal images are commonly considered in field definition assessment algorithms targeting DR screening systems.9,23,24 The macula is the part of the retina responsible for detailed central vision. DR signs thus become more serious if located near the center of the macula.72 Hence, the macula and surrounding regions are specifically important in the diagnosis of DR. In this study, we were concerned with macula centered retinal images for DR screening. Nevertheless, RIQA is an application specific task.22 Different considerations in the RIQA algorithm might be necessary if different FOV or retinal diseases were of interest. The introduced field definition assessment algorithm operates by checking that the OD is in its expected position. It is based on the observations that the OD has a density of thick vessels as well as being overly illuminated. Therefore, the introduced algorithm exploits the proposed wavelet-based sharpness and illumination features. Comparing L3 and L4 for field definition feature calculation resulted in AUCs of 0.991 and 1, respectively. Thus both levels were found closely significant for field definition assessment. Finally, image color information was employed to differentiate between retinal and nonretinal image. Comparison between features calculated from the RGB, CIELab, and HSV has shown that generally color information is adequate for outlier detection. 6.4.Overall RIQA Algorithm AnalysisIn this work, RIQA was performed based on the combination of five quality feature sets used to evaluate image sharpness, illumination, homogeneity, field definition, and outliers. The proposed quality algorithm relies on the computation of several transform and statistical-based features. Generally, transform-based approaches have the advantage of being consistent with the human visual system73 stating that the eye processes high- and low-frequencies through different optical paths.74 Furthermore, both transform and statistical-based features have the advantage of being computationally inexpensive while giving reliable results. Initially each of the five feature sets was separately evaluated using custom datasets. Classification results showed the efficiency of the presented features in which all the feature sets achieved AUCs greater than 0.99 (with the only exception of the sharpness features applied to the slightly blurred HRF dataset giving a maximum AUC of 0.954). Finally, all proposed clarity and content RIQA features were combined for the classification of the DR1 dataset achieving an AUC of 0.927 which is between to 4.5% higher than other RIQA algorithms from the literature. The overall RIQA algorithm required for preprocessing and overall feature calculation of a single image using MATLAB. Several methods can be adopted to further reduce the overall processing time of the algorithm. The implementation of the algorithm using more efficient programming languages can help enhance the run time. Parallel processing of the feature computations as well as feature selection algorithms can also reduce the execution time. Furthermore, the DecWT can be used for image decomposition instead of UWT. DecWT is characterized by its subsampling nature resulting in halving of image size with each level of decomposition. As a result, DecWT has the advantages of being fast and storage efficient. However, DecWT is shift variant such that any modification of its coefficients results in the appearance of a large number of artifacts in the reconstructed image.75 UWT was designed to overcome the DecWT limitation by avoiding image shrinking with multilevel decomposition leading to the preservation of more relevant image information.48 Furthermore, UWT is shift invariant, making it more suitable for wavelet-based image enhancement and denoising than DecWT.39 The advantages of UWT over DecWT come with the trade-off of increased processing time. Comparison between UWT and DecWT timing performance for the proposed algorithm is summarized in Table 13. DecWT is shown to be more than five times faster than UWT for wavelet decomposition and fifteen times faster in sharpness wavelet entropy calculation. Overall, the preprocessing and final quality feature vector computation was found to be approximately four times faster when DecWT was used instead of UWT for image decomposition. Furthermore, classification performance was similar whether DecWT or UWT was used for image decomposition (AUC of 0.927 in both cases for RBF kernel in SVM). Thus, the proposed wavelet-based features are shown to be equally efficient when calculated from either the decimated or undecimated wavelet decompositions. In conclusion, for the proposed RIQA algorithm, DecWT is more suitable for real-time systems as it is more computationally efficient. Table 13Timing comparisona in seconds between UWT and DecWT in RIQA.

7.ConclusionsAvoidable visual impairments and blindness can be limited if early disease symptoms are detected and properly treated. Accordingly, periodic screening of candidate patients is essential for early disease diagnosis. Automatic screening systems can help reduce the resulting work load on ophthalmologists. Substantial research efforts were invested for the development of automatic screening systems, yet their performance was found dependent on the quality of the processed images. Consequently, the development of RIQA algorithms suitable for automatic real time systems is becoming of crucial importance. In this work, a no-reference transform-based RIQA algorithm was proposed that is suitable for ARSS. The algorithm comprehensively addresses five main quality issues in retinal images using computationally inexpensive features. Wavelet-based features were used to assess the sharpness, illumination, homogeneity, and field definition of retinal images. Furthermore, the retinal saturation channel was designed to efficiently discriminate between homogeneous and nonhomogeneous retinal images. Nonretinal images were excluded based on color information. Extensive analyses were performed on each of the five quality feature sets demonstrating their efficiency in the evaluation of their respective quality issues. The final RIQA algorithm uses the concatenated feature sets for overall quality evaluation. Classification results demonstrated superior performance of the presented algorithm compared to other RIQA methods from literature. Generally, transform-based RIQA algorithms combine the advantages of both generic and segmentation approaches by considering the retina’s structural information while remaining computationally inexpensive. Transform-based algorithms were recently introduced in the field of RIQA and shown to have great potential for such applications. The analyses performed in this work demonstrate the ability of features derived from the wavelet transform to effectively assess different retinal image-related quality aspects. This suggests that transform-based techniques are highly suitable for real-time RIQA systems. Future work includes the development of an automatic image enhancement algorithm as a subsequent step to the quality evaluation. Specific quality issues within the retinal image are to be automatically enhanced based on the comprehensive assessment performed by the introduced RIQA algorithm. Both the proposed RIQA algorithm along with the enhancement procedure are to be integrated within a real-time ARSS. ReferencesD. Pascolini and S. P. Mariotti,

“Global estimates of visual impairment: 2010,”

Br. J. Ophthalmol., 96

(5), 614

–618

(2011). http://dx.doi.org/10.1136/bjophthalmol-2011-300539 Google Scholar

J. W. Y. Yau et al.,

“Global prevalence and major risk factors of diabetic retinopathy,”

Diabetes Care, 35

(3), 556

–564

(2012). http://dx.doi.org/10.2337/dc11-1909 DIAEAZ 0012-1797 Google Scholar

H. A. Quigley and A. T. Broman,

“The number of people with glaucoma worldwide in 2010 and 2020,”

Br. J. Ophthalmol., 90

(3), 262

–267

(2006). http://dx.doi.org/10.1136/bjo.2005.081224 Google Scholar

O. Faust et al.,

“Algorithms for the automated detection of diabetic retinopathy using digital fundus images: a review,”

J. Med. Syst., 36

(1), 145

–157

(2012). http://dx.doi.org/10.1007/s10916-010-9454-7 Google Scholar

A. Sopharak et al.,

“Automatic microaneurysm detection from non-dilated diabetic retinopathy retinal images,”

in Proc. of the World Congress on Engineering,

6

–8

(2011). Google Scholar

C. W. Wong et al.,

“Increased burden of vision impairment and eye diseases in persons with chronic kidney disease-a population-based study,”

EBioMedicine, 5 193

–197

(2016). http://dx.doi.org/10.1016/j.ebiom.2016.01.023 Google Scholar

H. Yu et al.,

“Automated image quality evaluation of retinal fundus photographs in diabetic retinopathy screening,”

in Proc. of Southwest Symp. on Image Analysis and Interpretation (SSIAI),

125

–128

(2012). Google Scholar

N. Patton et al.,

“Retinal image analysis: concepts, applications and potential,”

Prog. Retinal Eye Res., 25

(1), 99

–127

(2006). http://dx.doi.org/10.1016/j.preteyeres.2005.07.001 Google Scholar

R. Pires et al.,

“Retinal image quality analysis for automatic diabetic retinopathy detection,”

in Proc. of the 25th SIBGRAPI Conf. on Graphics, Patterns and Images (SIBGRAPI),

229

–236

(2012). http://dx.doi.org/10.1109/SIBGRAPI.2012.39 Google Scholar

A. G. Marrugo and M. S. Millan,

“Retinal image analysis: preprocessing and feature extraction,”

J. Phys. Conf. Ser., 274

(1), 012039

(2011). http://dx.doi.org/10.1088/1742-6596/274/1/012039 Google Scholar

A. Sopharak, B. Uyyanonvara and S. Barman,

“Automated microaneurysm detection algorithms applied to diabetic retinopathy retinal images,”

Maejo Int. J. Sci. Technol., 7

(2), 294

(2013). Google Scholar

T. Teng, M. Lefley and D. Claremont,

“Progress towards automated diabetic ocular screening: a review of image analysis and intelligent systems for diabetic retinopathy,”

Med. Biol. Eng. Comput., 40

(1), 2

–13

(2002). http://dx.doi.org/10.1007/BF02347689 Google Scholar

S. C. Lee and Y. Wang,

“Automatic retinal image quality assessment and enhancement,”

Proc. SPIE, 3661 1581

–1590

(1999). http://dx.doi.org/10.1117/12.348562 Google Scholar

M. Lalonde, L. Gagnon and M. Boucher,

“Automatic visual quality assessment in optical fundus images,”

in Proc. of Vision Interface,

259

–264

(2001). Google Scholar

H. Davis et al.,

“Vision-based, real-time retinal image quality assessment,”

in Proc. of the 22nd IEEE Int. Symp. on Computer-Based Medical Systems (CBMS),

1

–6

(2009). http://dx.doi.org/10.1109/CBMS.2009.5255437 Google Scholar

J. M. P. Dias, C. M. Oliveira and L. A. da Silva Cruz,

“Retinal image quality assessment using generic image quality indicators,”

Inf. Fusion, 19 73

–90

(2014). http://dx.doi.org/10.1016/j.inffus.2012.08.001 Google Scholar

M. Fasih et al.,

“Retinal image quality assessment using generic features,”

Proc. SPIE, 9035 90352Z

(2014). http://dx.doi.org/10.1117/12.2043325 PSISDG 0277-786X Google Scholar

M. Niemeijer, M. D. Abramoff and B. van Ginneken,

“Image structure clustering for image quality verification of color retina images in diabetic retinopathy screening,”

Med. Image Anal., 10

(6), 888

–898

(2006). http://dx.doi.org/10.1016/j.media.2006.09.006 Google Scholar

L. Giancardo et al.,

“Elliptical local vessel density: a fast and robust quality metric for retinal images,”

in Proc. of 30th Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society (EMBS),

3534

–3537

(2008). http://dx.doi.org/10.1109/IEMBS.2008.4649968 Google Scholar

T. Kohler et al.,

“Automatic no-reference quality assessment for retinal fundus images using vessel segmentation,”

in Proc. of the IEEE 26th Int. Symp. On Computer-Based Medical Systems (CBMS),

95

–100

(2013). http://dx.doi.org/10.1109/CBMS.2013.6627771 Google Scholar

D. B. Usher, M. Himaga and M. J. Dumskyj,

“Automated assessment of digital fundus image quality using detected vessel area,”

in Proc. of Medical Image Understanding and Analysis, British Machine Vision Association (BMVA),

81

–84

(2003). Google Scholar

R. A. Welikala et al.,

“Automated retinal image quality assessment on the UK Biobank dataset for epidemiological studies,”

Comput. Biol. Med., 71 67

–76

(2016). http://dx.doi.org/10.1016/j.compbiomed.2016.01.027 Google Scholar

A. D. Fleming et al.,

“Automated assessment of diabetic retinal image quality based on clarity and field definition,”

Invest. Ophthalmol. Visual Sci., 47

(3), 1120

–1125

(2006). http://dx.doi.org/10.1167/iovs.05-1155 Google Scholar

A. Hunter et al.,

“An automated retinal image quality grading algorithm,”

in Proc. of the Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society (EMBC),

5955

–5958

(2011). http://dx.doi.org/10.1109/IEMBS.2011.6091472 Google Scholar

H. A. Nugroho et al.,

“Contrast measurement for no-reference retinal image quality assessment,”

in Proc. of the 6th Int. Conf. on Information Technology and Electrical Engineering (ICITEE),

1

–4

(2014). Google Scholar

D. Veiga et al.,

“Quality evaluation of digital fundus images through combined measures,”

J. Med. Imaging, 1

(1), 014001

(2014). http://dx.doi.org/10.1117/1.JMI.1.1.014001 Google Scholar

Y. J. Cho and S. Kang, Emerging Technologies for Food Quality and Food Safety Evaluation, CRC Press, Boca Raton, Florida

(2011). Google Scholar

J. Paulus et al.,

“Automated quality assessment of retinal fundus photos,”

Int. J. Comput. Assisted Radiol. Surg., 5

(6), 557

–564

(2010). http://dx.doi.org/10.1007/s11548-010-0479-7 Google Scholar

A. D. Fleming et al.,

“Automated clarity assessment of retinal images using regionally based structural and statistical measures,”

Med. Eng. Phys., 34

(7), 849

–859

(2012). http://dx.doi.org/10.1016/j.medengphy.2011.09.027 Google Scholar

U. Sevik et al.,

“Identification of suitable fundus images using automated quality assessment methods,”

J. Biomed. Opt., 19

(4), 046006

(2014). http://dx.doi.org/10.1117/1.JBO.19.4.046006 Google Scholar

F. Yin et al.,

“Automatic retinal interest evaluation system (ARIES),”

in Proc. of 36th Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society (EMBC),

162

–165

(2014). http://dx.doi.org/10.1109/EMBC.2014.6943554 Google Scholar

M. J. Chen and A. C. Bovik,

“No-reference image blur assessment using multiscale gradient,”

EURASIP J. Image Video Process., 2011

(1), 1

–11

(2011). http://dx.doi.org/10.1155/2011/790598 Google Scholar

E. Dumic, S. Grgic and M. Grgic,

“New image-quality measure based on wavelets,”

J. Electron. Imaging, 19

(1), 011018

(2010). http://dx.doi.org/10.1117/1.3293435 Google Scholar

R. Ferzli, L. J. Karam and J. Caviedes,

“A robust image sharpness metric based on kurtosis measurement of wavelet coefficients,”

in Proc. of Int. Workshop on Video Processing and Quality Metrics for Consumer Electronics,

(2005). Google Scholar

P. V. Vu and D. M. Chandler,

“A fast wavelet-based algorithm for global and local image sharpness estimation,”

IEEE Signal Process. Lett., 19

(7), 423

–426

(2012). http://dx.doi.org/10.1109/LSP.2012.2199980 Google Scholar

H. Zhao, B. Fang and Y. Y. Tang,

“A no-reference image sharpness estimation based on expectation of wavelet transform coefficients,”

in Proc. of the 20th IEEE Int. Conf. on Image Processing (ICIP),

374

–378

(2013). http://dx.doi.org/10.1109/ICIP.2013.6738077 Google Scholar

S. G. Mallat,

“A theory for multiresolution signal decomposition: the wavelet representation,”

IEEE Trans. Pattern Anal. Mach. Intell., 11

(7), 674

–693

(1989). http://dx.doi.org/10.1109/34.192463 Google Scholar

E. Matsuyama et al.,

“A modified undecimated discrete wavelet transform based approach to mammographic image denoising,”

J. Digital Imaging, 26

(4), 748

–758

(2013). http://dx.doi.org/10.1007/s10278-012-9555-6 Google Scholar

J. L. Starck, J. Fadili and F. Murtagh,

“The undecimated wavelet decomposition and its reconstruction,”

IEEE Trans. Image Process., 16

(2), 297

–309

(2007). http://dx.doi.org/10.1109/TIP.2006.887733 Google Scholar

S. R. Nirmala, S. Dandapat and P. K. Bora,

“Wavelet weighted blood vessel distortion measure for retinal images,”

Biomed. Signal Process. Control, 5

(4), 282

–291

(2010). http://dx.doi.org/10.1016/j.bspc.2010.06.005 Google Scholar

S. R. Nirmala, S. Dandapat and P. K. Bora,

“Wavelet weighted distortion measure for retinal images,”

Signal Image Video Process., 7

(5), 1005

–1014

(2013). http://dx.doi.org/10.1007/s11760-012-0290-8 Google Scholar

H. Bartling, P. Wanger and L. Martin,

“Automated quality evaluation of digital fundus photographs,”

Acta Ophthalmol., 87

(6), 643

–647

(2009). http://dx.doi.org/10.1111/aos.2009.87.issue-6 Google Scholar

G. J. Katuwal et al.,

“Automatic fundus image field detection and quality assessment,”

in IEEE Western New York Image Processing Workshop (WNYIPW),

9

–13

(2013). Google Scholar

L. S. AbdelHamid et al.,

“No-reference wavelet based retinal image quality assessment,”

in Proc. of the 5th Eccomas Thematic Conf. on Computational Vision and Medical Image Processing (VipIMAGE),

123

–130

(2015). http://dx.doi.org/10.1201/b19241-22 Google Scholar

Z. Wang et al.,

“Image quality assessment: from error visibility to structural similarity,”

IEEE Trans. Image Process., 13

(4), 600

–612

(2004). http://dx.doi.org/10.1109/TIP.2003.819861 Google Scholar

E. Decenciere et al.,

“Feedback on a publicly distributed image database: the Messidor database,”

Image Analysis and Stereology, 231

–234

(2014). Google Scholar

T. Liu, W. Zhang and S. Yan,

“A novel image enhancement algorithm based on stationary wavelet transform for infrared thermography to the de-bonding defect in solid rocket motors,”

Mech. Syst. Sig. Process., 62 366

–380

(2015). http://dx.doi.org/10.1016/j.ymssp.2015.03.010 Google Scholar

E. Imani, H. R. Pourreza and T. Banaee,

“Fully automated diabetic retinopathy screening using morphological component analysis,”

Comput. Med. Imaging Graphics, 43 78

–88

(2015). http://dx.doi.org/10.1016/j.compmedimag.2015.03.004 Google Scholar

R. R. Coifman and M. V. Wickerhauser,

“Entropy-based algorithms for best basis selection,”

IEEE Trans. Inf. Theory, 38

(2), 713

–718

(1992). http://dx.doi.org/10.1109/18.119732 Google Scholar

M. Foracchia, E. Grisan and A. Ruggeri,

“Luminosity and contrast normalization in retinal images,”

Med. Image Anal., 9

(3), 179

–190

(2005). http://dx.doi.org/10.1016/j.media.2004.07.001 Google Scholar

J. B. Walsh,

“Hypertensive retinopathy. Description, classification and prognosis,”

Ophthalmology, 89

(10), 1127

–1131

(1982). http://dx.doi.org/10.1016/S0161-6420(82)34664-3 OPANEW 0743-751X Google Scholar

H. D. Schubert,

“Ocular manifestations of systemic hypertension,”

Curr. Opin. Ophthalmol., 9

(6), 69

–72

(1998). http://dx.doi.org/10.1097/00055735-199812000-00012 Google Scholar

T. Walter and J. C. Klein,

“Automatic analysis of color fundus photographs and its application to the diagnosis of diabetic retinopathy,”

Handbook of Biomedical Image Analysis, 2 315

–368 Kluwer, New York(2005). Google Scholar

H. Jelinek and M. J. Cree, Automated Image Detection of Retinal Pathology, CRC Press, Boca Raton, Florida

(2009). Google Scholar

M. D. Fairchild, Color Appearance Models, John Wiley & Sons, Hoboken, New Jersey

(2013). Google Scholar

J. Cohen,

“A coefficient of agreement for nominal scales,”

Educ. Psychol. Meas., 20

(1), 37

–46

(1960). http://dx.doi.org/10.1177/001316446002000104 Google Scholar

F. ter Haar, Automatic Localization of the Optic Disc in Digital Colour Images of the Human Retina, Utrech University(2005). Google Scholar

M. Hall et al.,

“The WEKA data mining software: an update,”

ACM SIGKDD Explorations Newsletter, 11

(1), 10

–18

(2009). http://dx.doi.org/10.1145/1656274 Google Scholar

N. D. Narvekar and L. J. Karam,

“A no-reference perceptual image sharpness metric based on a cumulative probability of blur detection,”

in Int. Workshop on Quality of Multimedia Experience (QoMEx),

87

–91

(2009). Google Scholar

X. Tang,

“Texture information in run-length matrices,”

IEEE Trans. Image Process., 7

(11), 1602

–1609

(1998). http://dx.doi.org/10.1109/83.725367 Google Scholar

R. M. Haralick, K. Shanmugam and I. H. Dinstein,

“Textural features for image classification,”

IEEE Trans. Syst. Man Cybern.,

(6), 610

–621

(1973). Google Scholar

S. Badsha et al.,

“A new blood vessel extraction technique using edge enhancement and object classification,”

J. Digital Imaging, 26

(6), 1107

–1115

(2013). http://dx.doi.org/10.1007/s10278-013-9585-8 Google Scholar

S. Lu,

“Accurate and efficient optic disc detection and segmentation by a circular transformation,”

IEEE Trans. Med. Imaging, 30

(12), 2126

–2133

(2011). http://dx.doi.org/10.1109/TMI.2011.2164261 Google Scholar

N. M. Salem and A. K. Nandi,

“Novel and adaptive contribution of the red channel in pre-processing of colour fundus images,”

J. Franklin Inst., 344

(3), 243

–256

(2007). http://dx.doi.org/10.1016/j.jfranklin.2006.09.001 Google Scholar

R. L. De Valois and K. K. De Valois,

“A multi-stage color model,”

Vision Res., 33

(8), 1053

–1065

(1993). http://dx.doi.org/10.1016/0042-6989(93)90240-W Google Scholar

Y. Z. Goh et al.,

“Wavelet-based illumination invariant preprocessing in face recognition,”

J. Electron. Imaging, 18

(2), 023001

(2009). http://dx.doi.org/10.1117/1.3112004 Google Scholar

R. R. A. Bourne,

“Ethnicity and ocular imaging,”

Eye, 25

(3), 297

–300

(2011). http://dx.doi.org/10.1038/eye.2010.187 12ZYAS 0950-222X Google Scholar

E. Rochtchina et al.,

“Ethnic variability in retinal vessel caliber: a potential source of measurement error from ocular pigmentation?—the Sydney Childhood Eye Study,”

Invest. Ophthalmol. Vis. Sci., 49

(4), 1362

–1366

(2008). http://dx.doi.org/10.1167/iovs.07-0150 Google Scholar

A. Calcagni et al.,

“Multispectral retinal image analysis: a novel non-invasive tool for retinal imaging,”

Eye, 25

(12), 1562

–1569

(2011). http://dx.doi.org/10.1038/eye.2011.202 12ZYAS 0950-222X Google Scholar

L. Giancardo et al.,

“Quality assessment of retinal fundus images using ELVD,”

New Developments in Biomedical Engineering, 201

–224 IN-TECH(2010). Google Scholar

M. J. Cree and F. J. Herbert,

“Image analysis of retinal images,”

Medical Image Process., 249

–268

(2011). http://dx.doi.org/10.1007/978-1-4419-9779-1 Google Scholar

Z. Gao and Y. F. Zheng,

“Quality constrained compression using DWT-based image quality metric,”

IEEE Trans. Circuits Syst. Video Technol., 18

(7), 910

–922

(2008). http://dx.doi.org/10.1109/TCSVT.2008.920744 Google Scholar

S. Shah and M.D. Levine,

“Visual information processing in primate cone pathways. I. A model,”

IEEE Trans. Syst. Man Cybern. B, 26

(2), 259

–274

(1996). http://dx.doi.org/10.1109/3477.485837 Google Scholar

P. Feng et al.,

“Enhancing retinal image by the Contourlet transform,”

Pattern Recognit. Lett., 28

(4), 516

–522

(2007). http://dx.doi.org/10.1016/j.patrec.2006.09.007 Google Scholar