|

|

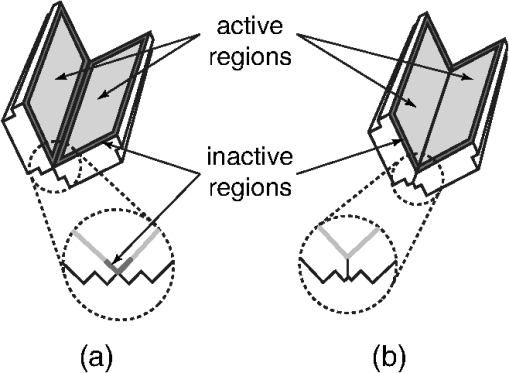

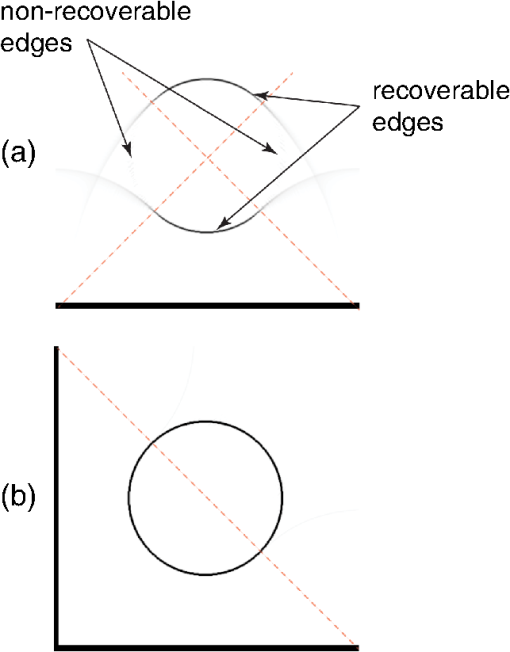

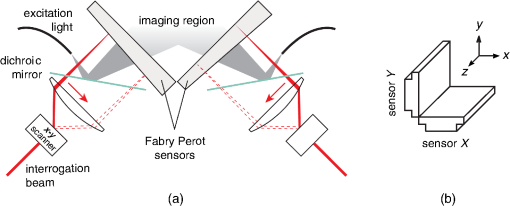

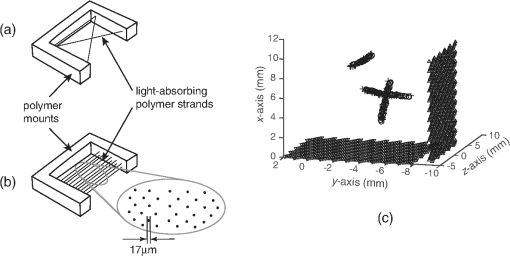

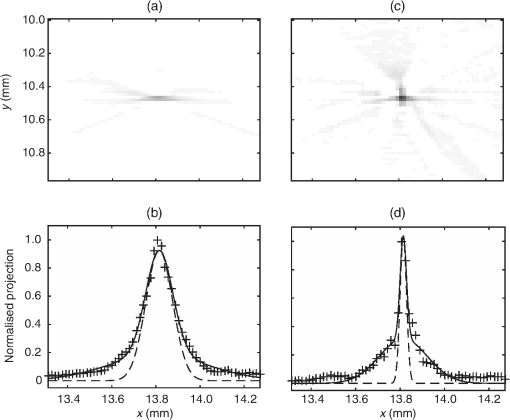

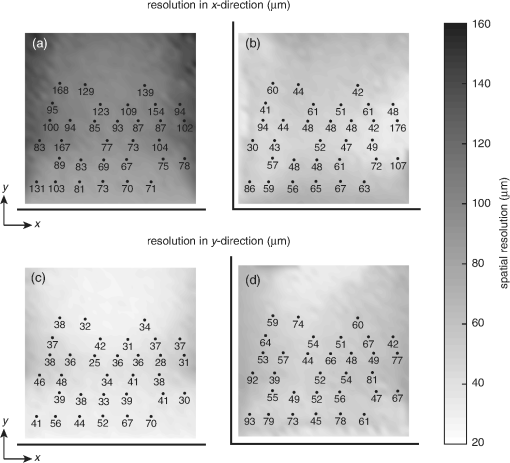

1.IntroductionPhotoacoustic tomography has been demonstrated using several different sensor array geometries, including one-dimensional (1-D) linear, two-dimensional (2-D) planar, circular, cylindrical, spherical, and hemispherical.1–7 While spherical and hemispherical arrays have the advantage of capturing the full acoustic field, the fabrication of such arrays with suitably small detection elements and sufficient sensitivity and bandwidth can be challenging. Fabry–Pérot (FP) sensor arrays,2 on the other hand, retain their sensitivity as the element size—the optically interrogated region—is reduced. However, for simplicity of production and interrogation, FP sensors are planar. When used for photoacoustic tomography, planar arrays suffer from an incomplete view of the acoustic field, known as the limited-aperture or limited-view problem8,9 [Fig. 1(a)], which results in artifacts in the photoacoustic images. Fig. 1Limited aperture problem. (a) With planar arrays, not all the wavefronts reach the sensor plane, resulting in edges close to perpendicular being undetectable. (b) Using a second, orthogonal array to capture the previously undetected components.  Three different strategies have been proposed to reduce the limited-aperture artifacts. One group of techniques introduced additional structure into the initial pressure distribution so that they become “visible” to a planar array. Gateau et al.10 used speckle illumination to pattern the fluence and hence the initial pressure distribution. Wang et al.11 used focused ultrasound to increase the temperature-dependent Grüneisen parameter point by point and thereby add structure to the initial pressure distribution. Recently, Deán-Ben et al.12 proposed the nonlinear combination of many images of sparsely distributed exogenous contrast agents to achieve the same goal. All of these techniques rely on the acquisition of many partial images, which slows the overall acquisition speed. Another group of techniques uses acoustic reflectors13–15 to redirect the acoustic waves that would have otherwise not been detected due to the limited aperture back onto the array. While this does improve the visibility, the range of applications for which this arrangement is suitable is restricted by the presence of the reflectors surrounding the array. The third approach, which does not depend on acquiring more data, is the use of prior information in the image reconstruction, although this remedy is not specific to ameliorating limited-view artifacts. A total-variation penalty term16 has been used to promote piecewise constant features in the image; for example, vessel filtering17 has also been applied to photoacoustic images as a postprocessing step. The challenge here, of course, is having accurate prior information about the target. Finally, Frikel and Quinto18,19 have shown that apodizing the data can reduce the presence of sharp image artifacts, although this will not recover the missing edges. Despite the ingenuity of these various approaches at overcoming the limited-view problem, they are still no substitute for having measured the data directly. This paper investigates the possibility of retaining the advantages of the planar FP sensor but ameliorating the limited-view problem through the use of two planar sensor arrays mounted orthogonally: an L-shaped sensor array [Fig. 1(b)].20,21 By using two orthogonal planar arrays, many of the wavefronts that would not have been detected by a single planar array will be detected. To achieve “full visibility,”8,9 three mutually orthogonal arrays would be required. However, aside from the additional scanner complexity that this would entail, it would restrict the system’s applicability, e.g., to preclinical imaging. It has previously been shown that a similar approach using 1-D linear arrays can result in improved images22–24 or line detectors scanned in an L-shaped pattern,9,25 but these approaches can only produce 2-D photoacoustic images. Here, 3-D images have been obtained using static 2-D arrays. The paper is structured as follows. The construction and calibration of a system to interrogate two orthogonal FP sensors is discussed in Sec. 2. Numerical simulation and experimental measurements investigating the quantitative improvement in terms of resolution of using the L-shaped sensor over using a planar array are presented in Sec. 3. Photoacoustic images of a leaf phantom, demonstrating the improved visibility of features within the imaging volume, and ex-vivo images of a murine flank, demonstrating the feasibility of using this technique to image deep anatomical structures in small animals, are shown in Sec. 4. 2.System ConfigurationA diagram of the L-shaped FP system is shown in Fig. 2. This section describes the system, the registration of the two planar sensors, and the image reconstruction procedures used. Fig. 2Scanner for the L-shaped FP sensor. (a) The L-shaped scanner consists of two orthogonal planar sensors, X and Y, mounted near-perpendicular and interrogated using optical scanners. The photoacoustic excitation light can be transmitted through the sensor for backward-mode imaging. (b) The coordinate system used throughout the paper.  2.1.L-shaped Fabry–Pérot SensorFP sensors consist of mirrors separated by a polymer spacer layer.2 The FP sensor mirrors are dichroic and designed to allow wavelengths in the 600 to 1200-nm range to be transmitted while reflecting the longer wavelengths used for interrogation (1500 to 1600 nm). This allows the photoacoustic excitation light to be introduced through the sensor to achieve backward mode illumination. The FP sensors were interrogated by a focused wavelength tunable laser beam, which was scanned to different spatial locations across the sensor to synthesize array measurements. At each location, the wavelength of the interrogation beam is tuned to the maximum slope of the interferometer transfer function (ITF), measured prior to acquisition. When an acoustic wave passes through the FP sensor, it modulates the spacer thickness, and, through the ITF, the power of the reflected light from the sensor changes proportionately.2 The slope of the ITF determines the sensitivity of the sensor at each location. To correct for variation in sensitivity from location to location, the data from each sensor are normalized to the slope of the ITF. Previous work on FP sensors has concentrated on planar arrays.2 In this work, an L-shaped sensor was developed, consisting of two orthogonal, planar FP sensor arrays. Due to practical fabrication considerations, planar FP sensors typically exhibit an inactive region around their outer edge, where the sensor is sealed to the mounting point of the water tank [Fig. 3(a)]. However, when used in conjunction with a second, orthogonal, sensor, the inactive regions prevent the detection of the acoustic field in a strip close to the vertex between the sensors, which can have a detrimental effect on the reconstructed image.20 To overcome this, new sensors were produced with part of the substrate removed to reduce the insensitive area between the active regions [Fig. 3(b)]. To seal these sensors, a custom tray was produced. The sensors produced for this system have a spacer thickness of , giving a nominal bandwidth of 39 MHz. 2.2.Interrogation SystemTwo identical optical systems were used to interrogate the orthogonal sensors. Each interrogation system consisted of a custom scanner2 that scanned the position of a focused beam from a wavelength tunable laser (1500 to 1610 nm, Yenista Optics Tunics- T100S-HP) across the FP. Two interrogation lasers were used, one for each sensor plane, and the light reflected from each sensor was detected using identical custom photodiodes. Each photodiode consisted of an InGas photodiode with a transimpedance amplifier with a nominal bandwidth of 100 MHz and AC and DC coupled outputs. The DC-coupled outputs from each photodiode were connected to separate 16-bit analogue-to-digital (A/D) cards (NI PCIe-6323) in the PC to record the transfer functions of the sensors, as part of the wavelength-biasing scheme. The AC-coupled outputs from each photodiode were captured on separate channels of a single digitizer card (125 MHz bandwidth, NI PCI-5114) to record the acoustic signals. The sensors were rigidly connected by the water-tight tray and could not be moved relative to each other. To align the scanners to their respective sensors simultaneously, therefore, the positions of the scanners, rather than the sensors, had to be adjusted. To ensure that each scanner was held mechanically stable relative to its sensor, they were mounted on custom mechanical armatures that allowed each system to be positioned through six degrees of freedom relative to the sensors. 2.3.Photoacoustic Excitation SourceThe excitation source for all the phantom studies was a Q-switched Nd:YAG laser (Big Sky, Ultra) operating at 1064 nm (8-ns pulse length). For the mouse imaging in Sec. 4.2, to ensure optimum SNR, two Nd-YAG pumped optical parametric oscillators (OPO’s), a GWU VisIR pumped by a Spectra-Physics Quanta Ray LAB170 and an Innolas Spitlight 600 with pulse widths of 8 and 6 ns, respectively, were used to generate the photoacoustic signals. The two OPOs had pulse energies of 20 and 12 mJ, and a pulse repetition rate of 10 Hz. The output of each OPO source was coupled into the sample in backward mode, through each sensor, via a dichroic mirror, as shown in Fig. 2(a). The beams were expanded so the fluence on the tissue surface was about , well below the maximum permissible exposure. The OPOs were triggered such that the pulses they emitted were synchronized to within of each other using a combination of custom electronics and an Agilent 33522A signal generator with a repetition rate of 10 Hz. Both OPOs were configured to a wavelength of 755 nm. 2.4.Registration ProcedureTo locate the relative positions of the detection points on the two sensor planes in a unified coordinate system, a registation procedure was developed. A registration phantom was produced consisting of three black polymer strands mounted to a custom acrylic mount [Fig. 4(a)]. The phantom was imaged over a region of by each sensor. Care was taken to ensure the registration phantom was thermally stable during the registration procedure. A step size of 50 or was used. The data from each sensor were then reconstructed separately using time reversal.26,27 The sound speed was selected automatically.28 Thresholding was used to generate point clouds from each. A registration algorithm29 was then applied to these point clouds to obtain the rigid transformation between their local, on-plane, coordinate systems. In Fig. 4(c), an example of the point cloud from each sensor aligned to a common coordinate system is shown; the circles relate to data from sensor X and the crosses from sensor Y [see Fig. 2(b)]. The relative positions of the detection points on sensors X and Y are also shown in Fig. 4(c). The accuracy of this alignment procedure was of the order of a pixel width (100 or dependent on the acquisition step size). Fig. 4(a) The phantom, consisting of three black polymer strands on a transparent acrylic mount, used for registration. (b) The phantom with multiple parallel polymer strands used in the resolution measurements (Sec. 3). (c) The positions of the sensor detection points (triangles) and the registration phantom postregistration. The registration phantom is shown as seen by sensor X (circles) and sensor Y (crosses), showing they are well-registered. (The number of sensor points was downsampled for visual clarity.)  2.5.Image ReconstructionTo reconstruct an image using the L-shaped sensor, an iterative time reversal technique was employed,30,31 incorporating the dual constraints of positive initial pressure and zero initial particle velocity. This technique effectively adds successive terms in a Neumann series and will converge on the correct solution given sufficient data. The sequence of images was given by Eq. (1): where is the measured data, T is the time reversal imaging operator, and A is the forward operator mapping the initial acoustic pressure distribution to time series at the detection points. The first image of the initial pressure distribution was calculated using the time reversal operator:Although there was strictly no “visible region” in which an exact image can be obtained due to the absence of a third orthogonal detection plane, the artifacts in the planes orthogonal to the L-shaped sensor were reduced considerably. It is important to note that the image resulting from the iterative reconstruction will not be just the sum of the two images obtained using the planar sensors. Instead, it will have fewer artifacts due to the L-shaped sensor’s improved visibility and the suppression of the artifacts in the iterative reconstruction due to the non-negativity and zero initial particle velocity constraints (note there is no exact noniterative reconstruction for this geometry). The numerical model used for both the forward and time reversal operations was a k-space pseudospectral time-domain propagation model, k-wave.32,33 When using only a single planar sensor, for comparison, a time reversal technique26,27 was used to reconstruct the image. 3.Spatial Resolution3.1.Numerical SimulationsSeveral factors affect the resolution of the images obtained using a FP sensor, including the spatial sampling interval, the effective element size, the bandwidth of the sensor (primarily related to the spacer thickness), and the detection aperture size and configuration.2 The calculated effective element diameter in the case of these sensors is , based on a sensor spacer thickness of and a Gaussian waist diameter of at an acoustic frequency of 25 MHz.34 To investigate the spatial resolution of the L-shaped sensor in comparison to a planar sensor, several simulations were carried out using k-wave.32,33 A numerical phantom consisting of absorbing lines parallel to the -axis was created. As the absorbing lines were parallel to the sensor, a 2-D simulation was sufficient to generate the predicted acoustic fields due to the translational invariance in the -direction. A 2-D domain of was discretized into a grid with a step size of ; a time step of 4 ns was used. The sound speed of the medium was set to the value for water, .35 Sensor arrays consisting of elements with a width of were placed on two sides of the domain. The time series were filtered to be representative of the frequency response of a sensor with a spacer.36,37 The 2-D simulation was then extended into the -dimension by including multiple copies of the time series along the -axis to simulate the effect of a detection array with width 0.4 mm in the -direction. This 3-D dataset was then used to reconstruct an image using iterative time reversal (10 iterations). The central slice through the reconstructed image was extracted, and profiles in the and -direction were taken through each point. A Gaussian was fitted to the profile, and the full-width half-maximum (FWHM) of this Gaussian was determined using the relationship: 3.2.Experimental ResultsThe point spread functions (PSFs) for the planar and L-shaped arrays were measured and compared to the simulation results. A phantom with a cross-section significantly smaller than the expected lateral resolution is required to measure the PSF. Based on previous measurements using a -thick planar FP sensor,2 which demonstrated a lateral resolution of , absorbing polymer strands with a diameter of were judged to meet this criterion. A target was constructed consisting of 32 of these polymer strands mounted within a custom polymer mount designed to avoid blocking the acoustic path between the sensors and the strands [Fig. 4(b)]. The strands were dyed using India ink for contrast. The phantom was imaged photoacoustically over an area of with a step size of . Each dataset was then reconstructed as outlined in Sec. 2.5. Slices of the 3-D images were taken. Figures 5(a) and 5(c) show a region of these images containing a single strand for the planar and L-shaped sensors, respectively. To obtain the resolutions in the and directions, a two term Gaussian model was fitted to the maximum intensity projections of these images (in the and directions, respectively), and the FWHM of the narrower Gaussian was taken as the resolution. This is shown for the -resolution (horizontal resolution) in Figs. 5(b) and 5(d) for the planar and L-shaped sensors, respectively. Maximum intensity projections of the images, rather than profiles along a line through the centre of the feature, were used to ameliorate the noncircular shape of the PSFs and give profiles more suitable for the Gaussian fitting. Fig. 5Spatial resolution measurement method. (a) Cross-sectional image of one absorbing strand obtained with a planar sensor. (b) Vertical maximum intensity projection of image (a), giving the horizontal profile shown by crosses to which a two-term Gaussian was fitted (solid line) and the FWHM of the narrower Gaussian (dashed line) was taken as the resolution. (c) Reconstruction of the same feature as in (a) obtained with the L-shaped sensor. (d) The equivalent to (c) for the L-shaped sensor.  Figure 6 shows the - and -resolutions for the planar and L-shaped sensors, from both the simulation (grayscale map) and the experiment (points with values in microns below). Figure 6(a) shows the resolution in the -direction using data from a planar sensor (sensor X). In this case, the resolution decreases with increasing distance from the sensor, largely due to the limited detection aperture. Figure 6(c) shows the resolution in the -direction using data from planar sensor X. It is notable that it is considerably better than the -direction resolution for the same sensor. This is due, in part, to the fact that the data were recorded with a higher temporal sampling than the equivalent spatial sampling. As the vertical wavenumber components of the initial pressure distribution (those in the direction in this case) are encoded onto the temporal waveforms, a higher temporal bandwidth results in improved spatial resolution in the -direction. Fig. 6Spatial resolution. Simulated results shown in grayscale, and dots with values below show the experimental values in . Domain size . Resolution in the -direction when using (a) planar sensor X and (b) the L-shaped sensor. Resolution in the -direction when using (c) planar sensor X and (d) the L-shaped sensor.  Figure 6(b) shows the -resolution for the L-shaped sensor [compare Fig. 6(a)]. In this case, a more uniform resolution was achieved throughout the field of view, with higher resolution everywhere compared to the planar sensor. However, there is, in general, an increase in the -resolution close to sensor Y. This is another manifestation of the higher temporal than spatial sampling. In this case, the temporal sampling of sensor Y is higher than the spatial sampling of sensor X, so the -resolution improves closer to sensor Y. [And vice-versa: the -resolution improves closer to sensor X, Fig. 6(d).] This effect was also observed by Paltauf et al.9 and Li et al.23 Figure 6(d) shows the -resolution for the L-shaped sensor. Considering the symmetry of the L-shaped sensor, it is no surprise that the simulated resolution map in Fig. 6(d) is the same as Fig. 6(b), flipped about the axis. The variance in the experimental data makes this harder to discern, but in general a similar pattern is observed. Table 1 shows a comparison between the mean values of resolution for the simulation and experimental data. The measured data matches well to the simulation for the L-shaped sensor, although the experimental values are slightly higher than the simulation due to noise in the experimental data. The reduction in the lateral resolution is also significant: the average -resolution in the field of view of the L-shaped sensor was , whereas the average -resolution for the planar sensor in the same region was . Closer to the planar sensor, the resolution achieved more closely matches that of the L-shaped sensor. Finally, the improved isotropy in the resolution of the L-shaped sensor compared to the planer sensor is clear from these averages: the - and -resolutions for the L-shaped sensor are comparable, but for the planar sensor, they differ by more than a factor of 2. While on average, the resolutions are similar in both directions, at many points in the image, some anisotropy will remain due to the difference in the spatial and temporal sampling rates. Table 1Comparison of mean values of resolution, in both x and y directions, for the simulated and experimental data for both sensor geometries.

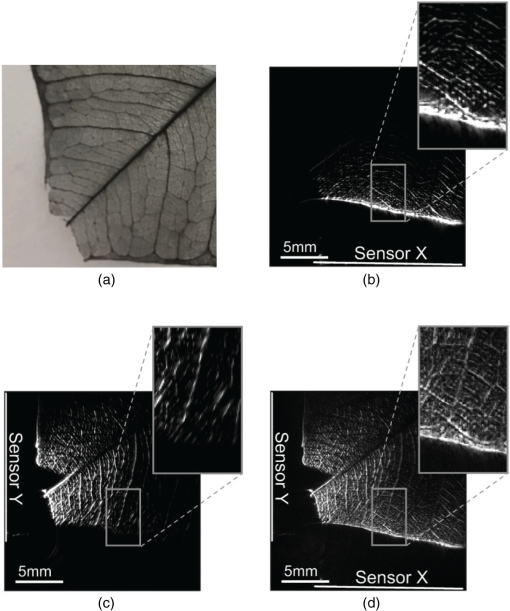

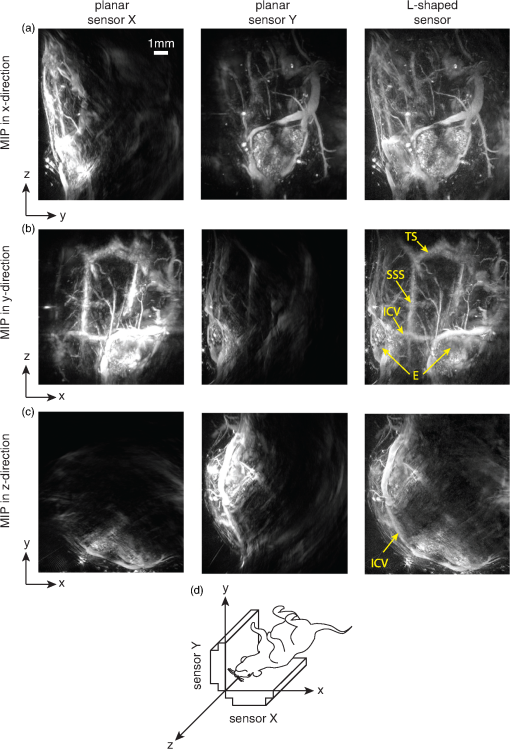

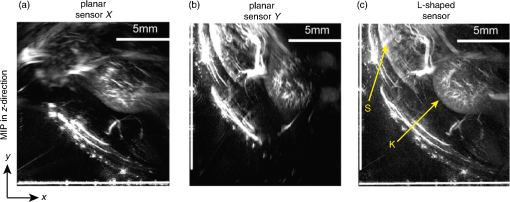

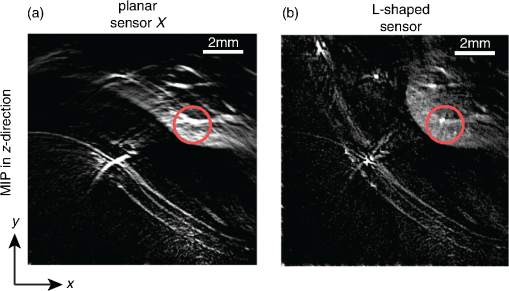

4.Phantom and Ex-Vivo Imaging StudiesTo demonstrate photoacoustic imaging using the L-shaped sensor, images of a leaf phantom and the head and abdominal region of a mouse ex-vivo were obtained. The latter demonstrates the feasibility of using this technique to image deep anatomical structures in small animals. Both targets were acoustically coupled to the sensors using deionized water, and a sound speed of was used for reconstructions. 4.1.Leaf PhantomA skeletal leaf was used as a phantom to demonstrate the ability of the system to provide improved view of features oriented in both the and directions. The contrast was enhanced by submerging the leaf in India ink for an hour, after which it was removed and allowed to dry for 24 h. The slightly curved leaf skeleton was positioned approximately perpendicular to both sensors and orientated so that there were veins visible to one sensor while not being visible to the other sensor and vice-versa. The leaf phantom, shown in Fig. 7(a), was scanned over an area of on each sensor, with a step size of , giving an overall imaged volume of (including the gap region between the sensors, see Fig. 3). Reconstructions were performed on an upscaled grid of . Figures 7(b) and 7(c) show the reconstructions for each independent planar sensor. The data from each sensor were separately reconstructed using time reversal.26,27 Clearly, in both cases, those veins that were perpendicular to the sensor were not recovered, but the veins that ran parallel were recovered. The data from the L-shaped sensor were reconstructed using 10 iterations of an iterative time reversal reconstruction,30,31 as described in Sec. 2.5. Figure 7(d) shows the resulting image, and it is evident that in this case, the majority of the features in both orientations are recovered well. Fig. 7Photoacoustic images of a leaf phantom. (a) Photograph of leaf phantom. (b) Maximum intensity projection (MIP) of image obtained using a planar sensor along lower side. (c) MIP of image obtained using a planar sensor to the left of the domain. (d) MIP of the image obtained using the L-shaped sensor. Inset in (b), (c) and (d): zoomed image sections.  4.2.Mouse ImagingA mouse, postmortem, was placed into the scanner and imaged over a volume around the head region with a step size of . The mouse was orientated such that the top of the head was pointing toward the joint between the sensor planes. All experiments were performed in accordance with the UK Home Office Animals Scientific Procedures Act (1986). Sufficient deionized water was used to couple just the part of the mouse being imaged. Excitation light () was introduced into the volume in backward mode through both sensors. Three reconstructions were completed, one for each planar sensor independently and another for the L-shaped sensor. Figure 8 shows maximum intensity projections of the same region in three different directions: -projection (looking through sensor Y), -projection (looking through sensor X), and -projection (looking along between the sensors). For each view, three images are given, corresponding to that formed using data from sensor X only, sensor Y only, and both sensors X and Y, i.e., the L-shaped sensor. As with the leaf phantom, the reconstructions from the planar sensors show structures that run parallel to the sensor while suppressing perpendicular structures. The L-shaped sensor gives a more complete reconstruction. Several features of the murine cerebral vasculature are visible in this image, including the superior sagittal sinus, transverse sinus, and the inferior cerebral vein, as well as the retinal vasculature of the eyes. Fig. 8Ex-vivo images of mouse head. Row (a) MIPs along the -direction obtained using sensor X, sensor Y, and the L-shaped sensor, respectively. Row (b) MIPs along the -direction obtained using sensor X, sensor Y, and the L-shaped sensor, respectively. Row (c) MIPs along the -direction obtained using sensor X, sensor Y, and the L-shaped sensor, respectively. The schematic (d) shows the respective orientation of the mouse, sensor, and coordinate system. Major vessels and structures in the mouse head are clearly visible, including the transverse sinus (TS), superior sagittal sinus (SSS), inferior cerebral vein (ICV), and the retina of the eyes (E).  For a second demonstration, the abdominal region of the mouse was imaged using each planar sensor independently and the L-shaped sensor. The mouse was positioned such that one side of the abdomen sat between the two sensors. Figure 9 shows three maximum intensity projections in the -direction (looking along between the sensor planes) of the same region. Figures 9(a) and 9(b) use data from a planar sensor X and Y, respectively. Different features—those parallel to the sensor used—are visible in each. When the L-shaped sensor is used, a clear improvement in the sharpness of the structures at depth can be seen. The boundary of the kidney, arrowed in Fig. 9(c), and its internal structure are particularly clear, with the interior vasculature more completely resolved. The spleen is also well recovered, labeled S [Fig. 9(c)]. The surface and deep vasculature are also imaged more clearly in this case, compared to either planar sensor alone. Fig. 9Ex-vivo images of mouse abdomen. (a) MIP along the z-direction, obtained using the data from sensor X. (b) MIP obtained using the data from sensor Y. (c) MIP obtained using the L-shaped sensor (i.e., data from sensors X and Y). Both the kidney (arrowed) and spleen (S) can be clearly seen.  Figure 10 shows slices through the images of the same region of the abdomen obtained by (a) the planar and (b) L-shaped sensors, respectively. First, it is clear that the shape of the kidney is distorted in Fig. 10(a) compared to Fig. 10(b). Second, comparing the structure circled in both images, it is clear from Fig. 10(b) but not from Fig. 10(a) that this is a vessel running in the z-direction (into the image). The reduction in artifacts demonstrates that images produced using the L-shaped sensor are not just the sum of two images from the planar sensors. This exemplifies the type of image improvement that an L-shaped sensor can provide over a planar sensor and shows that it can facilitate higher fidelity images, especially of deeper lying anatomical structures. Fig. 10Slices through ex-vivo kidney image. (a) A slice through the image whose MIP is shown in Fig. 9(a), obtained using planar sensor X. (b) A slice through the image whose MIP is shown in Fig. 9(c), obtained using the L-shaped sensor. The circled feature and the improved recovery of the kidney shape demonstrate the reduction in artifacts that can be achieved using the L-shaped sensor.  5.SummaryThe work presented here details the development of a new system for photoacoustic tomography, combining two planar FP sensors into a single L-shaped sensor. An iterative image reconstruction algorithm based on time reversal was used to fully exploit the improved visibility of the L-shaped sensor. The combination of the L-shaped sensor and iterative reconstruction algorithm was demonstrated to give superior imaging results, compared to planar sensors, through both phantom and ex-vivo experiments. A numerical and experimental study of the image resolution showed that the L-shaped sensor generated images with near-isotropic resolution and much improved uniformity compared to planar sensors. To demonstrate the feasibility of using this sensor to image deep anatomical structures in small animals, the head and abdomen of an ex-vivo mouse was imaged. Features invisible to a single planar sensor were clearly visible, and there was a marked improvement in image sharpness and reduction in artifacts. DisclosuresNo conflicts or competing interests, financial or otherwise, apply to any of the authors. AcknowledgmentsThis work was supported by the Engineering and Physical Sciences Research Council, United Kingdom (EP/K009745/1) and the European Union project FAMOS (FP7 ICT, Contract 317744). ReferencesC. Kim et al.,

“Deeply penetrating in vivo photoacoustic imaging using a clinical ultrasound array system,”

Biomed. Opt. Express, 1

(1), 278

–284

(2010). http://dx.doi.org/10.1364/BOE.1.000278 BOEICL 2156-7085 Google Scholar

E. Zhang et al.,

“Backward-mode multiwavelength photoacoustic scanner using a planar Fabry-Perot polymer film ultrasound sensor for high-resolution three-dimensional imaging of biological tissues,”

Appl. Opt., 47

(4), 561

–577

(2008). http://dx.doi.org/10.1364/AO.47.000561 APOPAI 0003-6935 Google Scholar

D. Razansky et al.,

“Multispectral opto-acoustic tomography of deep-seated fluorescent proteins in vivo,”

Nat. Photonics, 3 412

–417

(2009). http://dx.doi.org/10.1038/nphoton.2009.98 NPAHBY 1749-4885 Google Scholar

A. Buehler et al.,

“Video rate optoacoustic tomography of mouse kidney perfusion,”

Opt. Lett., 35

(14), 2475

–2477

(2010). http://dx.doi.org/10.1364/OL.35.002475 OPLEDP 0146-9592 Google Scholar

H. Brecht et al.,

“Whole-body three-dimensional optoacoustic tomography system for small animals,”

J. Biomed. Opt., 14

(6), 064007

(2009). http://dx.doi.org/10.1117/1.3259361 JBOPFO 1083-3668 Google Scholar

J. Xia et al.,

“Whole-body ring-shaped confocal photoacoustic computed tomography of small animals in vivo,”

J. Biomed. Opt., 17

(5), 050506

(2012). http://dx.doi.org/10.1117/1.JBO.17.5.050506 JBOPFO 1083-3668 Google Scholar

R. Kruger et al.,

“Photoacoustic angiography of the breast,”

Med. Phys., 37

(11), 6096

–6100

(2010). http://dx.doi.org/10.1118/1.3497677 MPHYA6 0094-2405 Google Scholar

Y. Xu et al.,

“Reconstruction in limited-view thermoacoustic tomography,”

Med. Phys., 31

(4), 724

–33

(2004). http://dx.doi.org/10.1118/1.1644531 MPHYA6 0094-2405 Google Scholar

G. Paltauf et al.,

“Experimental evaluation of reconstruction algorithms for limited view photoacoustic tomography with line detectors,”

Inverse Probl., 23 S81

–S94

(2007). http://dx.doi.org/10.1088/0266-5611/23/6/S07 INPEEY 0266-5611 Google Scholar

J. Gateau et al.,

“Improving visibility in photoacoustic imaging using dynamic speckle illuminiation,”

Opt. Lett., 38

(23), 5188

–91

(2013). http://dx.doi.org/10.1364/OL.38.005188 OPLEDP 0146-9592 Google Scholar

L. Wang et al.,

“Ultrasonic-heating-encoded photoacoustic tomography with virtually augmented detection view,”

Optica, 2

(4), 307

–312

(2015). http://dx.doi.org/10.1364/OPTICA.2.000307 Google Scholar

X. Deán-Ben, L. Ding and D. Razansky,

“Dynamic particl enhancement in limited-view optoacoustic tomography,”

(2015). Google Scholar

B. Huang et al.,

“Improving limited- view photoacoustic tomography with an acoustic reflector,”

J. Biomed. Opt., 18

(11), 110505

(2013). http://dx.doi.org/10.1117/1.JBO.18.11.110505 JBOPFO 1083-3668 Google Scholar

G. Li et al.,

“Tripling the detection view of high-frequency linear-array based photoacoustic computed tomography by using two planar acoustic reflectors,”

Quant. Imaging Med. Surg., 5

(1), 57

–62

(2015). http://dx.doi.org/10.3978/j.issn.2223-4292.2014.11.09 Google Scholar

R. Ellwood et al.,

“Photoacoustic imaging using acoustic reflectors to enhance planar arrays,”

J. Biomed. Opt., 19

(12), 126012

(2014). http://dx.doi.org/10.1117/1.JBO.19.12.126012 JBOPFO 1083-3668 Google Scholar

L. Yao and H. Jiang,

“Photoacoustic image reconstruction from few-detector and limited-angle data,”

Biomed. Opt. Express, 2

(9), 2649

–2654

(2011). http://dx.doi.org/10.1364/BOE.2.002649 BOEICL 2156-7085 Google Scholar

T. Orauganti, J. G. Laufer and B. E. Treeby,

“Vessel filtering of photoacoustic images,”

Proc. SPIE, 8581 85811W

(2013). http://dx.doi.org/10.1117/12.2005988 PSISDG 0277-786X Google Scholar

J. Frikel and E. T. Quinto,

“Artifacts in incomplete data tomography with applications to photoacoustic tomography and sonar,”

SIAM J. Appl. Math., 75 703

–725

(2015). http://dx.doi.org/10.1137/140977709 Google Scholar

J. Frikel and E. T. Quinto,

“Characterization and reduction of artifacts in limited angle tomography,”

Inverse Probl., 29

(12), 125007

(2013). http://dx.doi.org/10.1088/0266-5611/29/12/125007 INPEEY 0266-5611 Google Scholar

R. Ellwood et al.,

“Orthogonal Fabry-Pérot sensor array system for minimal-artifact photoacoustic tomography,”

Proc. SPIE, 9323 932312

(2015). http://dx.doi.org/10.1117/12.2081934 PSISDG 0277-786X Google Scholar

R. Ellwood et al.,

“Orthogonal Fabry-Pérot sensors for photoacoustic tomography,”

Proc. SPIE, 9708 97082N

(2016). http://dx.doi.org/10.1117/12.2212766 PSISDG 0277-786X Google Scholar

M. Schwarz, A. Buehler and V. Ntziachristos,

“Isotropic high resolution optoacoustic imaging with linear detector arrays in bi-directional scanning,”

J. Biophotonics, 8

(1–2), 60

–70

(2015). http://dx.doi.org/10.1002/jbio.201400021 Google Scholar

G. Li et al.,

“Multiview Hilbert transformation for full-view photoacoustic computed tomography using a linear array,”

J. Biomed. Opt., 20

(6), 066010

(2015). http://dx.doi.org/10.1117/1.JBO.20.6.066010 JBOPFO 1083-3668 Google Scholar

W. Shu et al.,

“Broadening the detection view of 2D photoacoustic tomography using two linear array transducers,”

Opt. Express, 24

(12), 12755

–12768

(2016). http://dx.doi.org/10.1364/OE.24.012755 OPEXFF 1094-4087 Google Scholar

G. Paltauf et al.,

“Three-dimensional photoacoustic tomography using acoustic line detectors,”

Proc. SPIE, 6437 64370N

(2007). http://dx.doi.org/10.1117/12.700318 PSISDG 0277-786X Google Scholar

Y. Xu and L. Wang,

“Time reversal and its application to tomography with diffracting sources,”

Phys. Rev. Lett., 92

(3), 3

–6

(2004). http://dx.doi.org/10.1103/PhysRevLett.92.033902 PRLTAO 0031-9007 Google Scholar

P. Burgholzer et al.,

“Exact and approximative imaging methods for photoacoustic tomography using an arbitrary detection surface,”

Phys. Rev. E, 75

(4), 046706

(2007). http://dx.doi.org/10.1103/PhysRevE.75.046706 Google Scholar

B. Treeby et al.,

“Automatic sound speed selection in photoacoustic image reconstruction using an autofocus approach,”

J. Biomed. Opt., 16

(9), 090501

(2011). http://dx.doi.org/10.1117/1.3619139 JBOPFO 1083-3668 Google Scholar

A. Myronenko, X. Song and M. Carreira-Perpinan,

“Non-rigid point set registration: coherent point drift,”

in Neural Information Processing Systems,

1009

–1016

(2006). Google Scholar

G. Paltauf et al.,

“Iterative reconstruction algorithm for optoacoustic imaging,”

J. Acoust. Soc. Am., 112

(4), 1536

–1544

(2002). http://dx.doi.org/10.1121/1.1501898 Google Scholar

P. Stefanov and G. Uhlmann,

“Thermoacoustic tomography with variable sound speed,”

Inverse Probl., 25

(7), 075011

(2009). http://dx.doi.org/10.1088/0266-5611/25/7/075011 INPEEY 0266-5611 Google Scholar

B. E. Treeby and B. T. Cox,

“k-Wave Matlab toolbox,”

(2013) www.k-wave.org January ). 2013). Google Scholar

B. E. Treeby and B. T. Cox,

“k-Wave: MATLAB toolbox for the simulation and reconstruction of photoacoustic wave fields,”

J. Biomed. Opt., 15

(2), 021314

(2010). http://dx.doi.org/10.1117/1.3360308 JBOPFO 1083-3668 Google Scholar

B. Cox and P. Beard,

“The frequency-dependent directivity of a planar fabry-perot polymer film ultrasound sensor,”

IEEE Trans. Ultrason. Ferroelect. Freq. Control, 54

(2), 394

–404

(2007). http://dx.doi.org/10.1109/TUFFC.2007.253 Google Scholar

T. L. Szabo, Diagnostic Ultrasound Imaging: Inside Out, 785 2nd ed.Elsevier Academic Press, Oxford, Amsterdam, and San Diego

(2014). Google Scholar

P. Beard and T. Mills,

“Extrinsic optical-fiber ultrasound sensor using a thin polymer film as a low-finesse Fabry-Perot interferometer,”

Appl. Opt., 35

(4), 663

–675

(1996). http://dx.doi.org/10.1364/AO.35.000663 APOPAI 0003-6935 Google Scholar

P. Beard, F. Perennes and T. Mills,

“Transduction mechanisms of the Fabry-Perot polymer film sensing concept for wideband ultrasound detection,”

IEEE Trans. Ultrason., Ferroelect. Freq. Control, 46

(6), 1575

–1582

(1999). http://dx.doi.org/10.1109/58.808883 Google Scholar

|