|

|

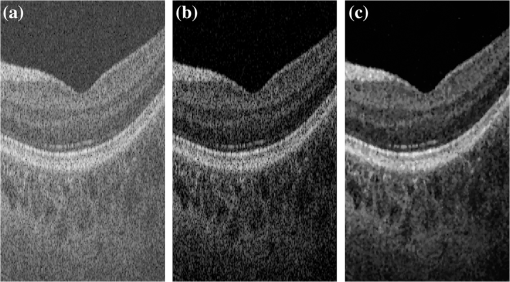

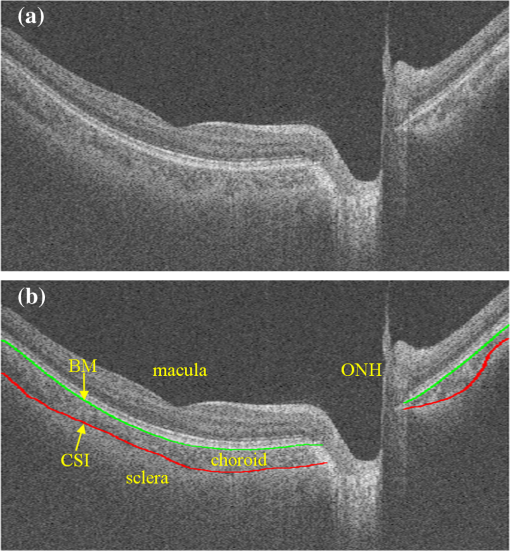

1.IntroductionThe human choroid is a complex vascular structure lying between the retina and the sclera. It provides oxygen and nourishment to the outer layers of the retina, including the photoreceptor cells crucial to visual function.1 The choroid morphology is relevant to many factors, such as age, gender, axial length, and intraocular pressure in healthy eyes.2,3 Changes in the choroid, often manifested as changes in thickness and volume, are also associated with various ocular disorders, such as glaucoma,4 high myopia,5 age-related macular degeneration,6 central serous chorioretinopathy,7 and Vogt–Koyanagi–Harada disease.8 Accurate quantification of the choroid thickness or volume is of great interest in pathophysiology studies of these diseases. Recent development of optical coherence tomography (OCT) has made several techniques available for high-speed and in vivo cross-sectional imaging of the choroid structure. The enhanced depth imaging OCT (EDI-OCT) obtains high signal strength by placing the “zero delay line” near the outer retina and choroid and enhances the signal-to-noise ratio (SNR) by averaging high number of B-scans.9 High-definition OCT (HD-OCT) can also be achieved by averaging selected high-quality B-scans in conventional 840-nm spectral domain OCT.3 However, in both EDI-OCT and HD-OCT, the requirement of averaging limits the number of B-scans finally obtained. Therefore, the choroid volume cannot be accurately calculated. Another recently introduced technique is the swept-source OCT (SS-OCT) with a central wavelength near .10 The longer wavelength allows better penetration through the deeper layers. As a result, a three-dimensional (3-D) volume showing both the retina and the entire choroid can be obtained. Along with the improved scanning speed, the wide-view scanning can include both the optical nerve head (ONH) and the macula. Two B-scans obtained by 1050-nm SS-OCT are shown in Fig. 1. Compared to EDI-OCT and HD-OCT, the 3-D image has higher level of speckle noise, which poses more difficulty for accurate segmentation. Fig. 1B-scan of 1050-nm wide-view SS-OCT image: (a) an original B-scan from SS-OCT image volume and (b) the same B-scan with several structure manually labeled.  The goal of automatic choroid segmentation is to delineate the Bruch’s membrane (BM) as the upper boundary and the choroidal–scleral interface (CSI) as the lower boundary, as shown in Fig. 1. The BM is the lower boundary of the retinal pigment epithelium (RPE) with high reflectivity, which is often detected by finding the maxima in intensity or gradient. On the contrary, the CSI can only be observed as the boundary between the textured region of choroid and the uniform region of sclera. It is often weak, broken, and open to subjective interpretation. Hence, the segmentation of CSI is far more challenging. Some studies on automatic choroid segmentation have been carried out based on the aforementioned choroid imaging techniques. For EDI-OCT images, Tian et al.11 detected “valley pixels” as local minima of A-scans and found CSI as the shortest path using Dijkstra’s algorithm. Alonso-Caneiro et al.12 calculated a dual brightness probability gradient following image enhancement, which was used to form the weights to search for the CSI. Vupparaboina et al.13 measured the structural dissimilarity between choroid and sclera by structural similarity index and achieved final delineation by thresholding and tensor voting. Danesh et al.14 employed the wavelet-based features to construct a Gaussian mixture model, which was then used in a graph cut method for segmentation of CSI. Chen et al.15 used a graph-based method for delineating CSI in which both the searching space and the cost were obtained based on vasculature characteristics. Chen et al.16 also proposed a method based on gradual intensity distance for choroid segmentation in HD-OCT images. These are all two-dimensional (2-D) methods applied on images with high SNR. No 3-D context is used in segmentation, and most of them do not work well for images with low SNR. Studies on 3-D OCT volumes are limited. Hu et al.17 applied a 3-D multistage multisurface graph search method for choroid segmentation of highly anisotropic and high-SNR OCT volumes obtained by B-scan averaging. Kajic et al.18 used a statistical model based on texture and shape to automatically detect the choroid boundaries in the 1060-nm OCT B-scans of both healthy and pathological eyes. However, the method required extensive training. Zhang et al.19 proposed a 3-D method by which the CSI was identified by fitting a thin plate spline to the lower boundary of segmented choroidal vessels. It was later validated on both EDI-OCT and SS-OCT volumes by Philip et al.20 Zhang et al.21 also proposed a method based on combined graph-cut-graph-search method and yielded accurate segmentation results for choroid surface segmentation in SS-OCT volumes. But the 3-D graph construction was complicated and the method was computationally expensive. In addition, manufacturer-provided segmentation software are also available for choroid thickness analysis,22 but failures are often observed23 and manual correction is needed. In this paper, we propose a 3-D automatic choroid segmentation method for 1050-nm wide-view SS-OCT volumes. The 3-D context is taken into consideration in the segmentation. A choroid thickness map and the choroid volume can be calculated from the segmentation results. The choroid both below the fovea and near the ONH can be segmented, which gives more information than methods based on the conventional macula-centered OCT images. The method works with images with relatively high speckle noise level. In preprocessing, we propose to use linear mapping and a cross bilateral filtering to enhance the image quality. In the segmentation, modified Canny operators are used to detect two auxiliary boundaries. Based on the second boundary, the ONH is detected and the corresponding A-scans are excluded in the following procedures. The 3-D multiresolution graph search method is applied to achieve segmentation of the BM and CSI. For CSI, we propose the wavelet-based gradual intensity distance cost. Combined with the conventional gradient-based cost, accurate segmentation is obtained, even with weak and broken boundaries. The segmentation accuracy is quantitatively evaluated by comparing with manual segmentation and by reproducibility test. 2.Materials and Methods3-D OCT volumes were acquired using a Topcon Swept Source OCT scanner at 1050 nm (DRI OCT-1 Atlantis, Topcon, Tokyo), with lateral resolution and axial resolution. The image size is , which corresponds to a volume. In this paper, we define as the width index, as the B-scan index, and as the depth index, and a B-scan image is in the plane. Three data groups were constructed. Both eyes from 16 normal subjects were scanned twice within 10-min intervals, and formed groups A and B for reproducibility evaluation. Another 10 eyes from 5 normal subjects were scanned once, and formed group C for parameter optimization. For each image volume in groups A and C, 10 B-scans with even spacing (25 B-scans apart) were manually segmented by two experts. The average position of the manual delineation was used as ground truth (GT) for BM and CSI. The study was approved by the Institutional Review Board of Soochow University, and informed consent was obtained from all subjects. 2.1.Image EnhancementTo work with an image with relatively high speckle noise level, two procedures are used to enhance the image quality.

Part of a B-scan before and after the two-step image enhancement is shown in Fig. 2. 2.2.Optical Nerve Head DetectionInside the ONH, most of the retinal layers, including RPE and CSI, are discontinuous. Therefore we detect the region first and remove it from the volume of interest (VOI). This also involves two steps:

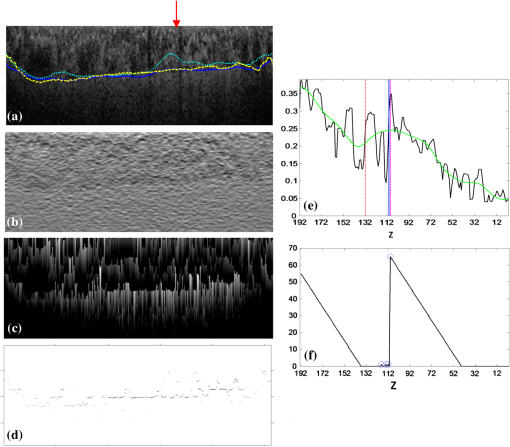

Fig. 3Detection of two boundaries using modified Canny edge detectors: (a) edge map to detect surface 1, (b) edge map to detect surface 2. Note that vertical edges do not present in . (c) Detection results of surfaced 1 and 2, shown as red and green curves. Vertical yellow lines indicate the detected ONH region.  Fig. 4ONH detection: (a) 2-D map obtained from detecting absence of surface 2, (b) after removing small regions and hole filling, and (c) final result obtained using convex hull.  Finally, all the discontinuities in surface 2, including ONH, are removed by linear interpolation. This surface, as shown in Fig. 3(c), is used to constrain the BM segmentation in the next section. 2.3.BM and CSI SegmentationThe BM is a boundary with bright-to-dark transition at the bottom of the retina. Although the CSI is not a clear boundary, in most places it appears as the bottom of large choroidal vessels. Therefore, we get the initial segmentation by assuming it as a dark-to-bright boundary. Since the BM and CSI are less notable transitions than surfaces 1 and 2, a 3-D multiresolution graph search method is used for the segmentation. By defining smoothness constraint in both lateral directions and finding the optimal segmenting surface in the whole volume, the 3-D context is fully utilized. This is especially beneficial for CSI with broken and weak boundaries. The 3-D graph search algorithm for optimal surface segmentation proposed by Li et al.29 and its variations30–32 were successfully applied to retinal layer segmentation. The 3-D image volume is mapped into a graph, with each voxel corresponding to a node. Nodes are connected by intracolumn and intercolumn arcs. The smoothing constraints and controlling the change of surface position between neighboring A-scans are used to construct the intercolumn arcs. A cost function inversely related to the likelihood that the voxel belongs to the detected surface is assigned to each voxel, and then converted to the weight of each node. Detecting a surface with minimum total cost is converted into finding a minimum weight closed set in this node-weighted digraph, which can then be solved in polynomial time by computing a minimum s-t cut in a derived arc-weighted digraph. The multiresolution graph search method24 is used in our algorithm to improve the efficiency. The 3-D OCT scan is downsampled by a factor of 2 three times in the -direction to form four resolution levels. The search for the surface in higher resolution is constrained in a subimage near the position of the surface detected in the next lower resolution. For BM, the cost function is calculated as the inverse of the 3-D Sobel gradient in the -direction while for CSI, is used. The costs are set to 0 for all A-scans inside the detected ONH region, so that the detected surface near ONH is not affected by the structures inside it. To obtain a smooth surface, the minimal smoothness constraints are used for all resolutions. The previously detected surfaces are used to constrain the searching space. The BM is detected in a subimage below surface 2. Based on the position of BM, the image is flattened, and the CSI is detected in a subimage below the flattened BM. The segmentation results are smoothed using cubic splines. 2.4.CSI RefinementBy using the gradient-based cost, the BM can be successfully segmented, but the results of CSI are not accurate enough. Due to the complex structure of choroidal vessels, the detected surface may be attracted by vessel edges inside the choroid. Therefore the initial detection results tend to locate above the true boundary. In this section, we propose to incorporate a region-based cost into the graph search and refine the CSI segmentation. The gradual intensity distance measure was first proposed by Chen et al.16 for segmenting CSI in 2-D HD-OCT images. It was observed that below the CSI, the intensity level of sclera decreased gradually. Hence the position where this decreasing started could be marked as the CSI. However, this measure is not directly applicable for 3-D SS-OCT volumes, because the high-level noise destroys the monotonic decreasing pattern of sclera intensity. Nevertheless, we find that the general trend of decreasing can be recovered to some extent using wavelet approximations and propose a wavelet-based modified gradual intensity distance (WGID) cost for the refinement of CSI segmentation. Define the VOI of CSI segmentation as the subimage below BM in the flattened image excluding the ONH. Use as a simplified notation of an A-scan in the VOI. Its discrete wavelet transform can be written as where and are the scaling function and wavelet function, respectively, and are the scaling and translation parameters, respectively, and are the corresponding approximation coefficients and detail coefficients, and is the decomposition level. The wavelet approximation of is obtained by setting all the detail coefficients , so that As the scaling function is designed to be a low-frequency smooth function, and the wavelet function is a high-frequency function that oscillates around zero, is a smoothed approximation of , that keeps the general trend and removes the fluctuations of the signal, as shown in Fig. 5(e). The smoothness is affected by the type of wavelet used and the decomposition level . In this paper, we choose the wavelet from the biorthogonal wavelet family with linear-phase filters, which is often used in image processing. The wavelet “bior5.5” and are chosen by minimizing the average difference between the maximum position of WGID and the GT in data group C.Fig. 5Illustrations of the WGID cost: (a) the flattened and cropped subimage below BM with segmentation results overlaid. The cyan dotted curve, blue solid curve, and yellow dashed curve are the initial segmentation by gradient-based cost, the final segmentation using both gradient-based and WGID-based cost, and the manual segmentation, respectively. (b) The gradient-based cost image, (c) the WGID image, (d) the WGID-based cost image, (e) an A-scan from (a), whose position is indicated by the red arrow. The black and green curves represent the original signal and the wavelet approximation, respectively. The red dotted, blue solid, and magenta dashed vertical lines indicate the initial segmentation by gradient-based cost, the maximum WGID position and the manual segmentation, respectively. (f) The black signal is the WGID calculated from (e), corresponding to one column in (c). The blue circles represent the local maxima below initial segmentation that form (d).  Assuming at the bottom of the image, the WGID for an A-scan is calculated as The WGID of a B-scan is shown in Fig. 5(c). To improve robustness, instead of only picking the value at the maximum position as the cost, as proposed in Ref. 16, we retain all the local maxima below the initial detected CSI to form the WGID-based cost. By definition, the local maximum is the point where the WGID changes from positive values to zero, as shown in Fig. 5(f). Therefore for an A-scan at The WGID-based cost is combined with the gradient-based cost in graph search for refinement of the CSI. The weight is set as , which is optimized by minimizing the average absolute distance between detected CSI and the GT in data group C.The initial and final segmentation results of CSI for a B-scan are shown in Fig. 5(a). The gradient-based cost and the WGID-based cost for the B-scan are shown in Figs. 5(b) and 5(d), respectively. Note that the gradient-based cost is weak and noisy, so that the initial segmentation deviates from the true boundary while the WGID-based cost is strong near the true boundary. 2.5.Evaluation MetricsFor images from the 32 eyes from 16 normal subjects in data group A, two trained experts manually delineated the BM and CSI in the B-scans using the ITK-SNAP Software.33 The experts might refer to adjacent B-scans when they were unsure about the position. The average position of the two manual delineations is used as GT. The absolute boundary difference (ABD) and signed boundary difference (SBD) of BM and CSI, the thickness difference (TD), and the Dice similarity coefficient (DSC), computed as in Eq. (13), are calculated. The errors of the automatic segmentation are compared with the interobserver variability. Paired t-tests are conducted and is considered statistically significant. where and refer to the set of choroid voxels of automatic segmentation results and the GT, and means the size of voxel set.Reproducibility of the volume quantification is conducted between data groups A and B for the 32 eyes. The position of the fovea is automatically detected by finding the lowest point of surface 1 in the flattened image, within a certain range of the center of detected ONH. We define three circles with 1, 3, and 6 mm diameters centered at the fovea, as in the early treatment diabetic retinopathy chart.34 The choroid volumes in the center circle and the two rings, calculated from the same eye, are compared. The correlation coefficient (CC), the coefficient of determination of linear regression (), and the relative volume difference (RVD), calculated as are used for quantitative evaluation of reproducibility. The Bland–Altman charts are also plotted to show the agreement between two groups of results.3.Results and Discussion3.1.Quantitative EvaluationThe mean and standard deviation of ABD and SBD of BM and CSI, the mean TD, and the mean DSC between final segmentation results and GT are shown in Table 1, and compared with those of the initial segmentation results and the interobserver variability. The mean ABD of BM is , and the mean ABD of CSI is . The ABD of BM is smaller than that of CSI, for both automatic and manual segmentation, which agrees with the fact that BM is much more distinguishable than CSI. The mean TD is . The ABD of BM, ABD of CSI, and TD of the automatic segmentation are all smaller than the interobserver difference. The DSC of the automatic segmentation is and is larger than the interobserver DSC. The -values of these four metrics show statistical significance. The SBD values suggest the automatic segmentation is slightly above the GT for BM and slightly below the GT for CSI. Compared with the initialization results, all the evaluation metrics are improved by the refinement with WGID-based cost. Especially, the SBD values show that the initialization by gradient-based cost is above the GT but is corrected in the final segmentation. The slightly positive SBD of final segmentation is largely caused by discrepancies with manual delineations in segmenting weak parts of the CSI. Table 1The mean and standard deviation of ABD and SBD of BM and CSI, the mean TD, and the mean DSC between initial segmentation results, final segmentation results and GT, and between two observers.

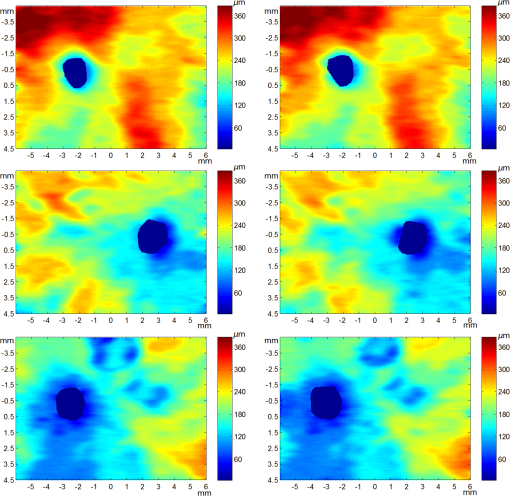

The mean and standard deviation of RVD, the CC, and of linear regression of the volumes obtained from repeated scans for the 1-mm center circle, 1- to 3-mm ring, and 3- to 6-mm ring, are shown in Table 2. High agreement can be observed. The RVD values are all smaller than 4%, the CC values are all larger than 0.97, and the values are all larger than 0.95. The Bland–Altman charts plotted in Fig. 6 also show that the difference between two groups of choroid volumes is small. Table 2Mean and standard deviation of relative choroid volume difference (RVD), CC, and coefficient of determination (R2) between repeated scans for 1-mm center circle, 1- to 3-mm ring, and 3- to 6-mm ring.

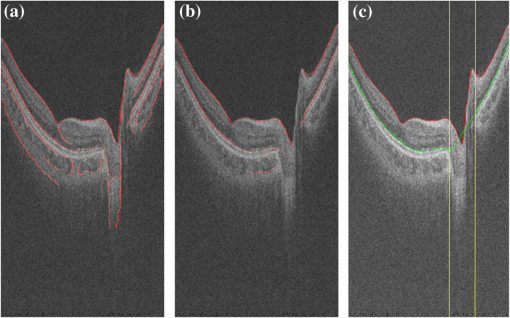

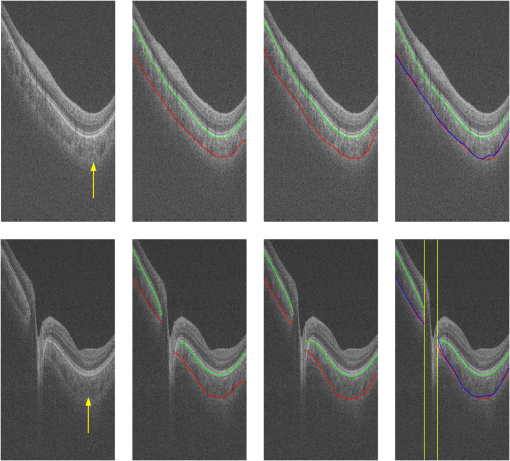

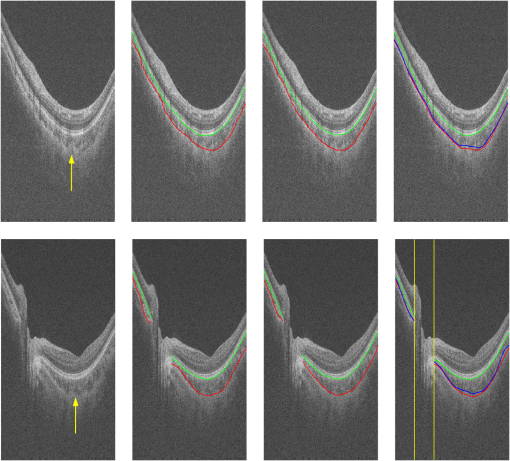

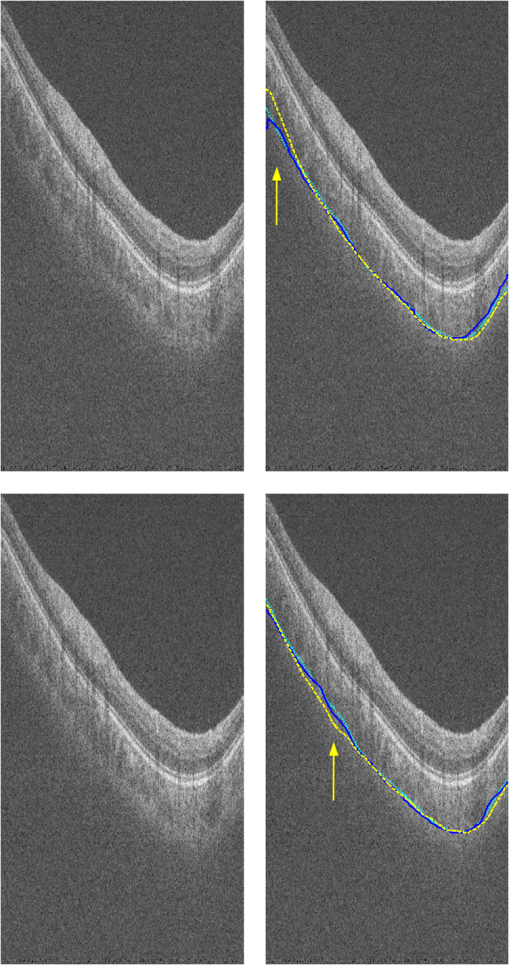

3.2.Qualitative EvaluationSegmentation results overlaid on original B-scans are shown in Figs. 7 and 8 for two eyes, one with thick choroid and the other with thin choroid. Two B-scans with and without the fovea and ONH are shown for each eye. In the weak parts of CSI, as indicated by yellow arrows, both the difference between observers and the difference between automatic and manual segmentations are relatively higher. Compared with initialization results, the final segmentation is more close to the manual delineation. Fig. 7Segmentation results of an eye with thick choroid. Green and red curves refer to BM and CSI. Blue curves refer to the initialization of CSI. Yellow vertical lines refer to start and end of ONH. First column: original B-scans. Second and third columns: two manual segmentations. Fourth column: automatic results.  Fig. 8Segmentation results of an eye with thin choroid. Green and red curves refer to BM and CSI. Blue curves refer to the initialization of CSI. Yellow vertical lines refer to start and end of ONH. First column: original B-scans. Second and third columns: two manual segmentations. Fourth column: automatic results.  Choroid thickness maps can be plotted according to automatic segmentation results. Six thickness maps of repeated scans from three eyes with large, medium, and small average choroid thickness are shown in Fig. 9. High resemblance can be observed from maps for the same eye, again proving the reproducibility of the proposed method. 3.3.Comparison to Two-Dimensional MethodsAs the existing methods were tested on different datasets with different scanning protocols and imaging resolutions, we are unable to compare with their results based on the reported errors. What is more, the intensity of RPE is usually the highest for EDI-OCT images, while not the case for SS-OCT images, and thus the BM segmentation for EDI-OCT images are not applicable for our dataset. Therefore, we only implemented and tested some 2-D CSI segmentation methods for comparison. As stated before, many methods designed for 2-D EDI-OCT images fail for our dataset with low SNR. For example, by Tian’s method,11 the true CSI boundary points will be dominated by the “valley points” caused by noise. Because the method only uses location information but no intensity/gradient information in recovering the shortest path, the results will be much deviated from the true boundary. By Alonso-Caneiro et al.’s method,12 the image enhancement essential for highlighting the CSI boundary will further magnify the noise, and thus results in very noisy cost functions. By Chen’s method,16 the gradual intensity distance below CSI will be corrupted by noise, as has been shown in Fig. 5(e). One feasible method is that of Ref. 15, designed for EDI-OCT B-scans with five times averaging, which have lower SNR. It is also a graph-based method but with different cost function. In testing, the same VOI undersegmented BM and excluding ONH is used for the searching of CSI. As tested, the 2-D method results in ABD of , which is statistically significantly larger than the proposed method (). The SBD is , indicating their results lie in some distance below the GT in general. Figure 10 shows the segmentation results in two consecutive B-scans. Notice that the 2-D method may be accurate in one B-scan, but not so in another. This is caused by the subtle difference between the two consecutive B-scans. While with our 3-D method, the segmentations are accurate in both B-scans, due to the constraints applied to 3-D neighbors. Fig. 10Comparison of CSI segmentation results with that of Ref. 15 for two consecutive B-scans. Cyan dotted curves refer to the results of proposed method. Yellow dashed curves refer to the results of Ref. 15. Blue solid curves refer to the GT. Yellow arrows point to the locations where the 2-D method results in errors due to variation between B-scans.  4.ConclusionsWe have presented an automated 3-D choroid segmentation method for 1050-nm wide-view SS-OCT image volumes with low SNR. Effective methods for image quality enhancement and for ONH removal are proposed. Both gradient-based and region-based costs are used in the graph-based multiresolution method to detect the CSI with weak or broken boundaries. Using wavelet approximation, the gradual intensity distance measure originally proposed for high quality HD-OCT image segmentation is adopted to work successfully for SS-OCT images. The segmentation results are evaluated by comparison with manual segmentation and by a reproducibility test. The segmentation error is statistically significantly smaller than the interobserver variability. The reproducibility of volume quantification is high. Both quantitative and qualitative analyses show that the proposed method achieved good segmentation performance. Comparison to existing methods proves the superiority of the proposed method where 3-D context is considered. With the method, choroid thickness and volume both below the fovea and near the ONH can be quantitatively analyzed. Currently the method is only tested on normal eyes. For eyes with pathology, several complications may add to the challenge of choroid segmentation. First, the RPE may be deformed, and relaxation of the smoothness constraint in graph search may be needed, as suggested in Ref. 24. Second, the CSI boundary may be further weakened. Other large lesions inside the retina and the choroid will also affect the segmentation accuracy. We will test the performance of the proposed method on pathological eyes and investigate the improvement in the future. Another limitation of the method lies in the detection of ONH. The termination in the upper boundary of photoreceptor/RPE complex is slightly different than the definition of neural canal opening (NCO), which is the termination of the RPE. By detecting the NCO, the ONH can be delineated more precisely, which will help both choroid segmentation nearby and other quantifications such as cup-disc ratio calculation. AcknowledgmentsThis work has been supported by the National Basic Research Program of China (973 Program) under Grant No. 2014CB748600, the National Natural Science Foundation of China (NSFC) under Grants Nos. 81371629, 61401293, 61401294, 81401451, and 81401472, and the Natural Science Foundation of the Jiangsu Province under Grant No. BK20140052. ReferencesD. L. Nickla and J. Wallman,

“The multifunctional choroid,”

Prog. Retinal Eye Res., 29 144

–168

(2010). http://dx.doi.org/10.1016/j.preteyeres.2009.12.002 PRTRES 1350-9462 Google Scholar

X. Ding et al.,

“Choroidal thickness in healthy Chinese subjects,”

Invest. Ophthalmol. Visual Sci., 52 9555

–9560

(2011). http://dx.doi.org/10.1167/iovs.11-8076 IOVSDA 0146-0404 Google Scholar

V. Manjunath et al.,

“Choroidal thickness in normal eyes measured using Cirrus HD optical coherence tomography,”

Am. J. Ophthalmol., 150 325

–329

(2010). http://dx.doi.org/10.1016/j.ajo.2010.04.018 AJOPAA 0002-9394 Google Scholar

Z. Q. Yin et al.,

“Widespread choroidal insufficiency in primary open-angle glaucoma,”

J. Glaucoma, 6

(1), 23

–32

(1997). http://dx.doi.org/10.1097/00061198-199702000-00006 JOGLES Google Scholar

T. Fujiwara et al.,

“Enhanced depth imaging optical coherence tomography of the choroid in highly myopic eyes,”

Am. J. Ophthalmol., 148

(3), 445

–450

(2009). http://dx.doi.org/10.1016/j.ajo.2009.04.029 AJOPAA 0002-9394 Google Scholar

R. F. Spaide,

“Age-related choroidal atrophy,”

Am. J. Ophthalmol., 147

(5), 801

–810

(2009). http://dx.doi.org/10.1016/j.ajo.2008.12.010 AJOPAA 0002-9394 Google Scholar

M. Gemenetzi, G. De Salvo and A. J. Lotery,

“Central serous chorioretinopathy: an update on pathogenesis and treatment,”

Eye, 24

(12), 1743

–1756

(2010). http://dx.doi.org/10.1038/eye.2010.130 Google Scholar

I. Maruko et al.,

“Subfoveal choroidal thickness after treatment of Vogt-Koyanagi-Harada disease,”

Retina, 31

(3), 510

–517

(2011). http://dx.doi.org/10.1097/IAE.0b013e3181eef053 RETIDX 0275-004X Google Scholar

R. F. Spaide, H. Koizumi and M. C. Pozonni,

“Enhanced depth imaging spectral-domain optical coherence tomography,”

Am. J. Ophthalmol., 146

(4), 496

–500

(2008). http://dx.doi.org/10.1016/j.ajo.2008.05.032 AJOPAA 0002-9394 Google Scholar

Y. Yasuno et al.,

“In vivo high-contrast imaging of deep posterior eye by swept source optical coherence tomography and scattering optical coherence angiography,”

Opt. Express, 15 6121

–6139

(2007). http://dx.doi.org/10.1364/OE.15.006121 OPEXFF 1094-4087 Google Scholar

J. Tian et al.,

“Automatic segmentation of the choroid in enhanced depth imaging optical coherence tomography images,”

Biomed. Opt. Express, 4

(3), 397

–411

(2013). http://dx.doi.org/10.1364/BOE.4.000397 BOEICL 2156-7085 Google Scholar

D. Alonso-Caneiro, S. A. Read and M. J. Collins,

“Automatic segmentation of choroidal thickness in optical coherence tomography,”

Biomed. Opt. Express, 4

(12), 2795

–2812

(2013). http://dx.doi.org/10.1364/BOE.4.002795 BOEICL 2156-7085 Google Scholar

K. K. Vupparaboina et al.,

“Automated estimation of choroidal thickness distribution and volume based on OCT images of posterior visual section,”

Comput. Med. Imaging Graphics, 46

(3), 315

–327

(2015). http://dx.doi.org/10.1016/j.compmedimag.2015.09.008 Google Scholar

H. Danesh et al.,

“Segmentation of choroidal boundary in enhanced depth imaging OCTs using a multiresolution texture based modeling in graph cuts,”

Comput. Math. Methods Med., 2014 479268

(2014). http://dx.doi.org/10.1155/2014/479268 Google Scholar

Q. Chen et al.,

“Choroidal vasculature characteristics based choroid segmentation for enhanced depth imaging optical coherence tomography images,”

Med. Phys., 43 1649

–1661

(2016). http://dx.doi.org/10.1118/1.4943382 Google Scholar

Q. Chen et al.,

“Automated choroid segmentation based on gradual intensity distance in HD-OCT images,”

Opt. Express, 23

(7), 8974

–8994

(2015). http://dx.doi.org/10.1364/OE.23.008974 OPEXFF 1094-4087 Google Scholar

Z. Hu et al.,

“Semiautomated segmentation of the choroid in spectral-domain optical coherence tomography scans,”

Invest. Ophthalmol. Visual Sci., 54

(3), 1722

–1729

(2013). http://dx.doi.org/10.1167/iovs.12-10578 IOVSDA 0146-0404 Google Scholar

V. Kajic et al.,

“Automated choroidal segmentation of 1060 nm OCT in healthy and pathologic eyes using a statistical model,”

Biomed. Opt. Express, 3

(1), 86

–103

(2012). http://dx.doi.org/10.1364/BOE.3.000086 BOEICL 2156-7085 Google Scholar

L. Zhang et al.,

“Automated segmentation of the choroid from clinical SD-OCT,”

Invest. Ophthalmol. Visual Sci., 53

(12), 7510

–7519

(2012). http://dx.doi.org/10.1167/iovs.12-10311 IOVSDA 0146-0404 Google Scholar

A. Philip et al.,

“Choroidal thickness maps from spectral domain and swept source optical coherence tomography: algorithmic versus ground truth annotation,”

Br. J. Ophthalmol., 100

(10), 1372

–1376

(2016). http://dx.doi.org/10.1136/bjophthalmol-2015-307985 BJOPAL 0007-1161 Google Scholar

L. Zhang et al.,

“Validity of automated choroidal segmentation in SS-OCT and SD-OCT,”

Invest. Ophthalmol. Visual Sci., 56

(5), 3202

–3211

(2015). http://dx.doi.org/10.1167/iovs.14-15669 IOVSDA 0146-0404 Google Scholar

K. Mansouri et al.,

“Assessment of choroidal thickness and volume during water drinking test by swept-source optical coherence tomography,”

Ophthalmology, 120

(12), 2508

–2516

(2013). http://dx.doi.org/10.1016/j.ophtha.2013.07.040 Google Scholar

K. Mansouri et al.,

“Evaluation of retinal and choroidal thickness by swept-source optical coherence tomography: repeatability and assessment of artifacts,”

Am. J. Ophthalmol., 157

(5), 1022

–1032

(2014). http://dx.doi.org/10.1016/j.ajo.2014.02.008 AJOPAA 0002-9394 Google Scholar

F. Shi et al.,

“Automated 3-D retinal layer segmentation of macular optical coherence tomography images with serous pigment epithelial detachments,”

IEEE Trans. Med. Imag., 34

(2), 441

–452

(2015). http://dx.doi.org/10.1109/TMI.2014.2359980 ITMID4 0278-0062 Google Scholar

G. R. Wilkins, O. M. Houghton and A. L. Oldenburg,

“Automated segmentation of intraretinal cystoid fluid in optical coherence tomography,”

IEEE Trans. Biomed. Imag., 59

(4), 1109

–1114

(2012). http://dx.doi.org/10.1109/TBME.2012.2184759 Google Scholar

C. Tomasi and R. Manduchi,

“Bilateral filtering for gray and color images,”

in Proc. IEEE Int. Conf. Computer Vision,

839

–846

(1998). http://dx.doi.org/10.1109/ICCV.1998.710815 Google Scholar

S. Paris and F. Durand,

“A fast approximation of the bilateral filter using a signal processing approach,”

Int. J. Comput. Vision, 81

(1), 568

–580

(2006). http://dx.doi.org/10.1007/11744085_44 IJCVEQ 0920-5691 Google Scholar

J. Canny,

“A computational approach to edge detection,”

IEEE Trans. Pattern Anal. Mach. Intell., PAMI-8

(6), 679

–698

(1986). http://dx.doi.org/10.1109/TPAMI.1986.4767851 ITPIDJ 0162-8828 Google Scholar

K. Li et al.,

“Optimal surface segmentation in volumetric images—a graph-theoretic approach,”

IEEE Trans. Pattern Anal. Mach. Intell., 28

(1), 119

–134

(2006). http://dx.doi.org/10.1109/TPAMI.2006.19 ITPIDJ 0162-8828 Google Scholar

M. K. Garvin et al.,

“Automated 3-D intraretinal layer segmentation of macular spectral-domain optical coherence tomography images,”

IEEE Trans. Med. Imag., 28

(9), 1436

–1447

(2009). http://dx.doi.org/10.1109/TMI.2009.2016958 ITMID4 0278-0062 Google Scholar

Q. Song et al.,

“Optimal multiple surface segmentation with shape and context priors,”

IEEE Trans. Med. Imag., 32

(2), 376

–386

(2013). http://dx.doi.org/10.1109/TMI.2012.2227120 ITMID4 0278-0062 Google Scholar

X. Chen et al.,

“Three-dimensional segmentation of fluid-associated abnormalities in retinal OCT: probability constrained graph-search-graph-cut,”

IEEE Trans. Med. Imag., 31

(8), 1521

–1531

(2012). http://dx.doi.org/10.1109/TMI.2012.2191302 ITMID4 0278-0062 Google Scholar

P. A. Yushkevich et al.,

“User-guided 3D active contour segmentation of anatomical structures: significantly improved efficiency and reliability,”

Neuroimage, 31

(3), 1116

–1128

(2006). http://dx.doi.org/10.1016/j.neuroimage.2006.01.015 NEIMEF 1053-8119 Google Scholar

“Photocoagulation for diabetic macular edema. Early treatment diabetic retinopathy study report number 1,”

Arch Ophthalmol., 103 1796

–1806

(1985). http://dx.doi.org/10.1001/archopht.1985.01050120030015 Google Scholar

BiographyFei Shi is an assistant professor at Soochow University, Suzhou, China. She received her PhD in electrical engineering from Polytechnic University (now New York University Tandon School of Engineering), Brooklyn, United States, in 2006. She has coauthored over 20 papers in internationally recognized journals and conferences. Her current research is focused on medical image processing and analysis. Xinjian Chen is a distinguished professor at Soochow University, Suzhou, China. He received his PhD from the Chinese Academy of Sciences in 2006. He conducted postdoctoral research at the University of Pennsylvania, National Institute of Health, and University of Iowa, USA, from 2008 to 2012. He has published over 80 top international journal and conference papers. He has also been granted with six patents. |