|

|

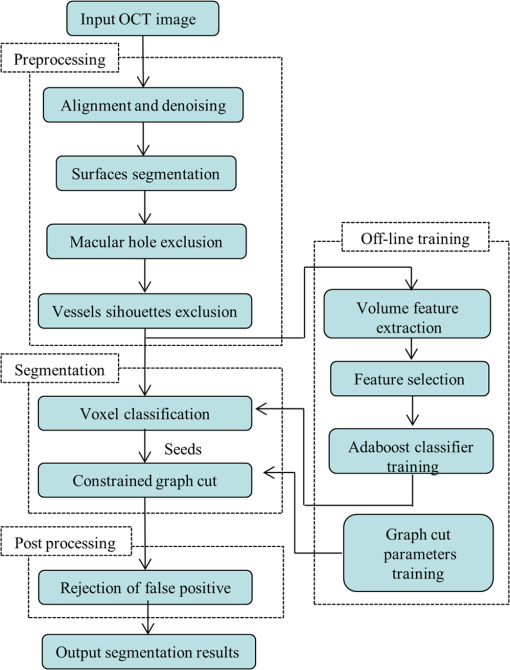

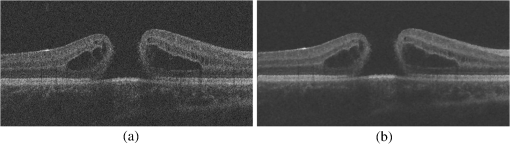

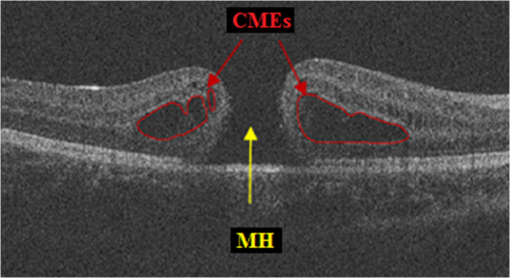

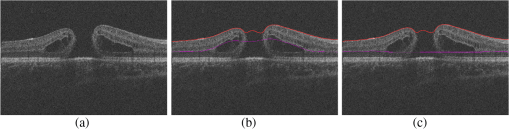

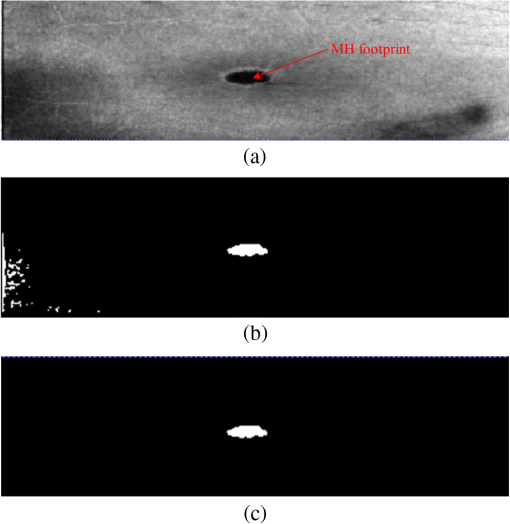

1.IntroductionOptical coherence tomography (OCT) is becoming a mainstay in ophthalmology as a noninvasive imaging technique for human retina.1–4 OCT has been widely used in the diagnosis of ocular diseases, such as macular edema (ME), macular hole (MH), glaucoma, etc. The macula is located near the center of the retina and is responsible for central, high-resolution, and color vision. Some acute maculopathies may lead to the loss of central vision and even blindness.5 MH is a full-thickness defect of neurosensory retina involving the anatomic fovea which severely affects central visual acuity.6 The overall incidence of MH is cases in 1000 in the United States.7 MH often progresses gradually and is common in people of age 60 and over.8 A person with MH may notice a slight distortion or blurriness in straight-ahead vision. Cystoid macular edema (CME) occurs in a wide variety of ocular diseases, such as diabetic retinopathy, intraocular inflammation, and central or branch retinal vein occlusion.9,10 If CME is untreated, the final result is the permanent deterioration of the central vision. Figure 1 shows a B-scan of the 3-D OCT image with CMEs and MH. Red curves show the manual segmentation of CMEs and yellow arrow shows the position of the MH. In clinical practice, if the CMEs and MH co-occur, the CMEs usually surround the MH as in Fig. 1. It has been proved that the volume of CMEs in the retina can be an accurate predictor of visual acuity.10 Furthermore, the volume of CMEs can lead to better metrics for making treatment protocols. Therefore, automated methods for CMEs segmentation in the 3-D OCT images with coexistence of CMEs and MH have urgent needs. Fig. 1Example for the coexistence of MH and CMEs in a B-scan of optical coherence tomography ( plane).  There are several previous studies focused on the segmentation of CMEs. Both semiautomated and automated methods have been studied. The semiautomated methods rely on manual initialization in each B-scan to quantify the volume of CMEs. Kashani et al.11 used the OCTOR software to quantify the cystoid spaces in eyes. Fernández12 proposed a method that requires the manual initialization of the snake and the fluid regions in 2-D OCT B-scans can be detected using a deformable model. Zheng et al.13 developed a technique that utilized the computerized segmentation combined with the minimal expert interaction to quantify the volume of CMEs. Although the abovementioned methods can detect the volume of CMEs accurately, they are semiautomatic so that they are laborious and time-consuming. Several automated methods have also been proposed which can obtain satisfactory results. Wilkins et al.14 segmented the CMEs in 3-D OCT images by thresholding and boundary tracing. Roychowdhury et al.15 localized cysts in diabetic macular edema (DME) by iterative high-pass filtering with further analysis using solidity, mean, and maximum pixel value as decisive features. Sisternes et al.16 segmented the cysts in 3-D OCT images using lasso regularization-based regression and adaptive thresholding. Esmaeili et al.17 proposed a 3-D curvelet transform-based dictionary learning method for automatic segmentation of cysts. Girish et al.18 proposed a marker-controlled watershed transform-based method to segment cysts on 2-D OCT B-scan images. Venhuizen et al.19 proposed a multiscale convolutional neural network-based method to predict if an image voxel belongs to a cyst. Wang et al.20 proposed a fuzzy level set-based method to automatically segment the intraretinal fluid and subretinal fluid in DME eyes using structural OCT and angiography OCT data. However, these methods may fail when the MH and CMEs coexist. There may be two reasons. First, most of the CMEs segmentation methods in OCT images are based on the intensity differences between CMEs and the other tissues of retina, and the intensity of MH is similar to the one of CMEs, so MH may be very likely segmented as a false positive (FP) region. Second, some of the above methods, e.g., Sisternes et al.16 use the position information about the fovea center because CMEs usually surround the fovea. When MH co-occurs, the normal shape of retina is altered and the correct position of the fovea center cannot be detected. This may be another cause of FPs. Therefore, new methods that can fully segment the CMEs in the retina with MH are urgently needed. The automatic segmentation of the CMEs coexisting with the MH is a challenging problem. The key difficulties lie in two aspects: (1) MH is a great disturbance for CMEs segmentation, and MH cannot be easily segmented because the shapes of the MHs may be irregular and even distorted by the detached tissues, such as posterior vitreous detachment.21 (2) The number and size of CMEs are always uncertain, making it difficult to quantify the volume of the cysts. In this paper, we propose an automated framework for intraretinal CME segmentation in 3-D OCT images with MH. The proposed method consists of two steps: preprocessing and CMEs segmentation. In the preprocessing, the operations including signal-to-noise (SNR)-balancing, 3-D curvature anisotropic diffusion filtering, layer segmentation and flattening, and MH and vessel silhouettes exclusion, are applied to the OCT scans. In the CMEs segmentation, first, an AdaBoost classifier with 57 features is employed for the coarse segmentation. Second, the morphological dilation and erosion operations are used to generate the foreground (CMEs) seeds and background seeds for the following graph cut-based segmentation method. Third, an automatic shape-constrained graph cut algorithm is applied for the fine segmentation. Finally, the FPs are eliminated according to the CMEs area-related information. The flowchart of the proposed method is shown in Fig. 2. 2.Materials and Methods2.1.Subjects and Data CollectionThe collection and analysis of image data were approved by the Institutional Review Board of Joint Shantou International Eye Center (JSIEC), Shantou University and the Chinese University of Hong Kong and adhered to the tenets of the Declaration of Helsinki. Because of its retrospective nature, informed consent was not required from subjects. The medical records and OCT database of JSIEC from December 2008 to 2013 were searched and reviewed. Totally, 19 eyes with coexistence of CMEs and MH from 18 subjects were included and underwent macular-centered () SD-OCT scan (Topcon 3-D OCT-1000, , , , , or , ). There were 8 males and 10 females with the mean age of years (range: 9 to 66 years). Subjects with other eye diseases were excluded except for refractive error diopter. The raw, uncompressed data were exported from the OCT machine as “.fds” format for analysis. 2.2.Ground Truth DelineationAll the CME regions in the 3-D OCT images were manually marked slice by slice by two observers independently, under the directions of an experienced ophthalmologist. The marked results marked are considered as the ground truth one (GT1) and ground truth two (GT2), respectively. 2.3.PreprocessingThe OCT volume is first segmented into 10 layers by 11 surfaces using the multiscale 3-D graph-search approach.22–25 To remove the image deformation caused by eye movement, the 3-D OCT volume is flattened based on the retinal pigment epithelium (surface 11).26–28 The inner retina is defined as the region between surfaces 1 and 6, and the outer retina refers to the region between surfaces 7 and 11. As the CMEs are mainly located in the inner retina, the inner retina is our initial volume of interest (VOI). So the precise segmentation of surfaces 1 and 6 is necessary. As shown in Fig. 3(b), surface 6 is well detected by the 3-D graph-search-based approach except the region around the CMEs, due to the coexistence of CMEs and MH. Surface 6 is then adjusted by replacing it with the lower boundary of its convex hull.29 Figure 3(c) shows the results after adjustment. Fig. 3Illustration of layer segmentation and adjustment. (a) One slice from the original 3-D OCT image. (b) Layer segmentation results of surface 1 (red) and surface 6 (purple) by the graph-search method. (c) Layer segmentation after surface 6 adjustment.  The OCT images acquired from different patients have different intensity profiles, which will affect the CMEs segmentation. In this paper, a SNR balancing14 is used on each B-scan to obtain uniform intensity profile. The noise level is taken as the mean intensity within a window in the background. The signal level is taken as the mean intensity within a window in the region between surfaces 1 and 6. The image data are SNR balanced using the equation , where is the original intensity value and is the final intensity value. Speckle noise is the main quality-degrading factor in OCT scans. The denoising method applied to OCT images should be efficient for speckle noise suppression while maintaining edge-like features. A 3-D curvature anisotropic diffusion filter is adopted in this paper. This filter performs anisotropic diffusion using the modified curvature diffusion equation (MCDE),30 which can efficiently enhance the contrast of edges. The MCDE equation is given as where denotes the input image, is the conductance function. The conductance modified curvature term is . Figure 4(b) shows a B-scan image after the 3-D curvature anisotropic diffusion filtering, in which the speckle noise is effectively reduced.2.4.Macular Hole Detection and Vessel Silhouettes ExclusionBecause the MH and vessel silhouettes have similar intensity value to the CMEs, it is necessary to isolate the MH and vessel silhouettes before extracting the CMEs volumetric feature. This procedure can reduce the volume of data to be analyzed and reduce the FPs. The likelihood that a point belongs to an MH footprint can be calculated from the 2-D projection image in plane.31 Here, a 2-D projection image is generated by averaging the OCT subvolume between surfaces 1 and 6 in the -direction, as shown in Fig. 5(a). Then a footprint of MH is defined by thresholding [, in range (0, 255)]. The initial result is shown in Fig. 5(b). Because the footprint of MH usually locates around the center of the 2-D projection image, the falsely detected regions nearby the border, caused by signal attenuation, are discarded. The final result of the MH footprint is shown in Fig. 5(c). Fig. 5MH footprint detection. (a) The 2-D projection image ( plane) from surfaces 1 to 6. (b) Rough detection results by thresholding. (c) MH footprint detection result after removing FPs.  With the detected MH footprint, an improved region-growing method is applied to delineate the MH in the 3-D OCT image. First, the seeds for region growing are determined based on the following criteria:

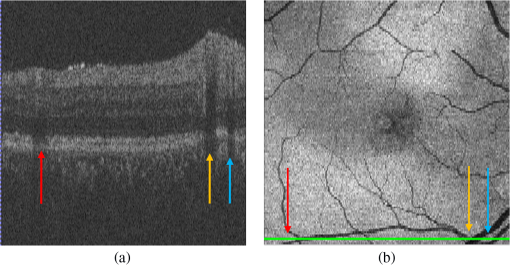

Second, with the detected seeds, the region-growing method becomes automatic. The neighbors of the seeds are searched and determined whether they belong to the object region according to intensity. The CMEs segmentation results may occasionally include the low-intensity regions, such as vessel silhouettes, which are shown in Fig. 6. The arrows indicate vessel silhouettes formed by the vasculature of the retina. The green line in (b) indicates the location of the slice shown in (a) on the projection image of the retina. The vessel silhouettes should be detected and excluded before the CMEs segmentation. Here, the vessel silhouettes are effectively detected using a vessel detector.32 First, a 2-D projection image [Fig. 6(b)] is calculated by averaging the intensities of pixels in the outer retina (from surfaces 7 to 12) in the -direction, as the vessel silhouettes have excellent contrast only in the outer retina. Then the vessel silhouettes are segmented from the projection image by a KNN classifier with 31 features. Finally, if the voxels in the inner retina regions have the same , locations with the vessel silhouettes, they are excluded from the VOI. Fig. 6Vessel silhouettes appear as low-intensity regions which may cause FPs. (a) The arrows indicate vessel silhouettes on a B-scan image. (b) The green line indicates the location of the slice shown in (a) on the projection image.  At last, the subvolume of the retina between surfaces 1 and 6 without the MH and the vessel silhouettes is defined as our real VOI. 2.5.AdaBoost Classifier-Based Coarse Cystoid Macular Edemas SegmentationAfter preprocessing, 57 features are extracted for each voxel in the VOI, including textural and structural information of the image. Table 1 shows the list of features. Features 1 to 8 describe the local texture of the image. The first 6 features were defined in our previous research.33 The skewness and kurtosis are used to describe the statistical distribution characteristics of the intensity values in the VOI. Features 9 to 57 describe the local image structure. In order to speed up the feature extraction phase, the training images are sampled which yielded images of . Then, an AdaBoost classifier is applied for the coarse segmentation of CMEs in the VOI. AdaBoost is an iterative boosting algorithm constructing a strong classifier as a linear combination of weak classifiers.34,35 The algorithm takes the set of as the input, where is a -dimensional feature, and is the corresponding label, set as 1 or 0 for cyst or noncyst voxels. In this paper, . Table 1List of the features used in the AdaBoost classifier.

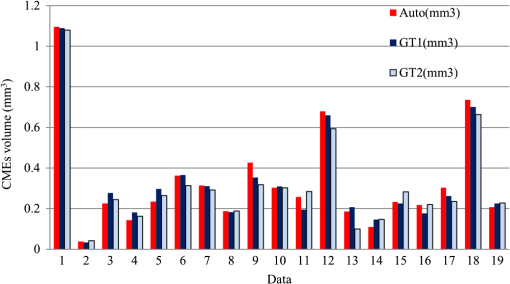

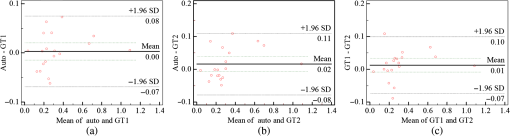

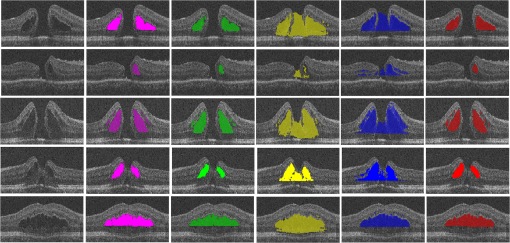

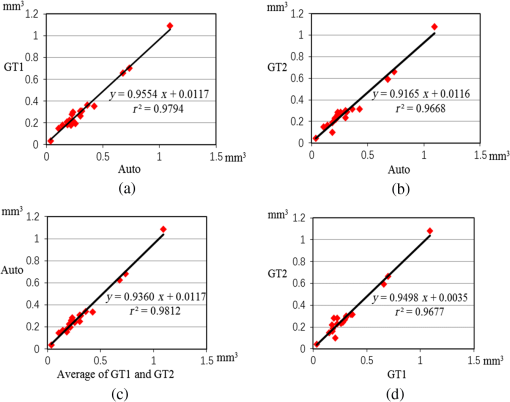

The coarsely detected CME regions are processed by the morphological dilation and erosion operations, to generate the object and background seeds for the following graph cut-based fine CMEs segmentation. 2.6.Constrained Graph Cut-Based Fine Cystoid Macular Edemas SegmentationThe graph cut algorithm has been widely used for the image segmentation in recent years.36–40 The energy function of traditional graph cut includes a regional term and boundary term. Obtaining seeds is an important step of the graph cut algorithm. With the given seeds generated in the coarse segmentation step, an automated shape-constrained graph cut method is applied for the final fine segmentation.41 In the graph cut algorithm, the overall problem can be formulated as an energy minimization problem.42 The energy function of the shape-constrained graph cut is defined as where , , denote the weights for the data term, shape term, and boundary term, respectively. They satisfy . The data term is defined as a probability of the voxel to be the object or not. The boundary term is defined on the gradient of the intensity between the adjacent voxels. They are defined as follows: and where is the intensity of the voxel and is the label assigned to the voxel . and represent the probabilities that the voxel belongs to CME and non-CME, respectively. They can be calculated from the histogram of the CME and non-CME voxels during the training phase. is the distance between voxel and . is the standard deviation of the voxel intensity.The shape term is defined as, where is the distance from voxel to the coarse CMEs segmentation result in the AdaBoost classification. is the radius of a circle that can just enclose the CMEs. The shape-constrained graph cut is implemented on the VOI and the CMEs region can be refined.2.7.False Positive RejectionThe CMEs FPs may be caused by the noise and the retinal region with low intensity value. The connected component detection based on morphological operations is used to reject the FPs. If the area of the detected connected region is smaller than 6 pixels, it will be discarded as an FP. 3.ResultsLeave-one-out cross-validation strategy was used for training and testing the AdaBoost classifier and the parameters used in the graph cut. To objectively evaluate the CME volume segmentation results, the true positive volume fraction (TPVF), FP volume fraction (FPVF), accuracy rate (ACC), and dice similarity coefficient (DSC) are used in the experiment.43,44 TPVF indicates the rate of correctly detected volume compared with the reference standard. FPVF denotes the fraction of incorrectly detected volume in the true negative volume. ACC indicates the detection ACC. DSC is used for comparing the similarity between the automated segmentation results and the ground truth. The definitions are defined as follows: where denotes volume, denotes the true positive set, denotes the FP set, denotes the true negative set, and denotes the false negative set. denotes the ground truth, and denotes the VOI.As shown in Table 2, the mean of TPVF, FPVF, ACC, and DSC for the proposed method was 81.0%, 0.80%, 99.7%, and 80.9%, respectively. The proposed method was compared with the coarse CMEs segmentation based only on the AdaBoost algorithm, the 2-D thresholding segmentation method proposed in Ref. 14, and the lasso regularization-based regression method proposed in Ref. 16. Compared to the results by the AdaBoost algorithm, the DSC of the proposed method increased slightly (with a -value of 0.061 in the paired -test) and the corresponding ACC showed a significant improvement (with a -value ). The results of the proposed 3-D method showed a more significant improvement compared to the results of the 2-D thresholding segmentation proposed in Ref. 14. Though the average TPVF of the regression method proposed in Ref. 16 is higher than the proposed method, its FPVF, ACC, and DSC are much poorer than the ones of the proposed method, which is caused by large FP regions. Table 2Mean±standard deviation comparison of the CMEs segmentation results. Figure 7 shows five examples of CMEs segmentation results. The first column shows the original image, the second column shows the coarse segmentation results of the AdaBoost classifier, the third column shows the final fine segmentation results, the fourth column shows the segmentation results by 2-D thresholding segmentation method proposed in Ref. 14, the fifth column shows the segmentation results by lasso regularization-based regression method proposed in Ref. 16, and the last column shows the GT1. Note the improvements between columns 2 and 3. There are FPs caused by the presence of MH in columns 3 and 4. Fig. 7Experimental results for five examples of CMEs segmentation. The first column shows the original image, the second column shows the segmentation results of AdaBoost classifier, the third column shows the final fine segmentation results, the fourth column shows the segmentation results by 2-D thresholding segmentation method proposed in Ref. 14, the fifth column shows the segmentation results by lasso regularization-based regression method proposed in Ref. 16, and the last column shows the GT1.  Figure 8 shows the linear regression analysis results for the GT1 versus the GT2. The correlation between the proposed method and GT1, GT2, and the average of GT1 and GT2 were 0.9794, 0.9668, and 0.9812, respectively. The correlation coefficient between GT1 and GT2 was 0.9677, which indicated the two ground truths are very consistent. Fig. 8Statistical correlation analysis between the automated method, GT1 and GT2. (a) The linear regression analysis results between GT1 and the automatic method. (b) The linear regression analysis results between GT2 and the automatic method. (c) The linear regression analysis results between the automatic method and the average of GT1 and GT2. (d) The linear regression analysis results between GT1 and GT2.  Figure 9 shows the CMEs volume of 19 subjects by three methods. Bland and Altman plots were used to evaluate the agreement between two different methods. The Bland–Altman plots for the proposed method (auto) versus the GT1 and the GT2 are shown in Fig. 10. The points were mostly located in the 95% limits of agreement ( standard deviation of the difference), which indicated good agreement between the proposed method and GT1 and GT2. 4.Discussion and ConclusionsAutomated segmentation of CMEs in 3-D OCT images is a challenging task due to the variation of CMEs’ shape and size and the textural similarity between the foreground and background. The experiment results showed the good performance of the proposed method. The FPVF of the proposed method was low, which may be due to several facts: (1) compared with the VOI region between surfaces 1 and 6, the volume of the CMEs is very small; (2) the size for FP exclusion in the postprocessing is very effective. The CMEs can be effectively segmented by the proposed method. With coexistence of MH, both the 2-D thresholding method proposed in Ref. 14 and the lasso regularization-based regression method proposed in Ref. 16 fail to exclude the MH effectively, because the intensity value is similar between MH and CMEs and neither of these two methods uses the spatial and shape information about the MH. Our contributions are summarized as follows:

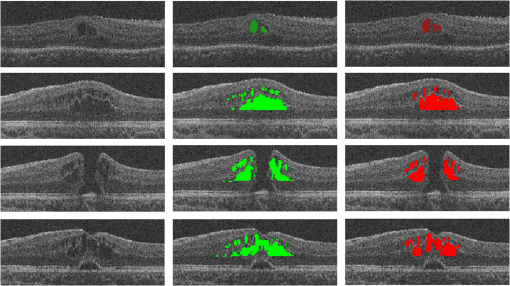

Fig. 11Segmentation results for CMEs with unpredictable locations and ambiguous boundaries. The first column shows the original image, the second column shows the segmentation results of the proposed method, and the third column shows the GT1.  However, there are still some limitations in our method. (1) The proposed method can accurately detect a big cyst, but it is not sensitive to very small cysts. This will be improved in the near future. (2) The final CMEs detection results largely rely on the preprocessed results. If the MH exclusion result is incorrect, the final segmentation results may include falsely detected regions in MH. A better algorithm for MH detection is needed. (3) A larger dataset will be explored in the near future for further validation of the proposed method. In conclusion, an effective approach is proposed to segment the CMEs in the 3-D OCT images with coexistence of MH. This may provide a clinically useful tool to help ophthalmologists with disease diagnosis. DisclosuresLi Zhang has a financial interest in Oracle Inc., which, however, did not support this work. No conflicts of interest, financial or otherwise, are declared by the other authors. AcknowledgmentsThis study was supported by the National Basic Research Program of China (973), the Young Scientist Program Foundation of China (2014CB748600), the National Nature Science Foundation of China (81401472) and in part by the National Nature Science Foundation of China for Excellent Young Scholars (61622114), the National Nature Science Foundation of China (61401294, 61401293, and 81371629), the Natural Science Foundation of Jiangsu Province (BK20140052), the International Cooperation Project of Ministry of Science and Technology (2016YFE010770), and the Research Grant from the Joint Shantou International Eye Center (2012–2018). ReferencesM. D. Abràmoff, M. K. Garvin and M. Sonka,

“Retinal imaging and image analysis,”

IEEE Rev. Biomed. Eng., 3 169

–208

(2010). http://dx.doi.org/10.1109/RBME.2010.2084567 Google Scholar

C. Cukras et al.,

“Optical coherence tomography-based decision making in exudative age-related macular degeneration: comparison of time vs spectral-domain devices,”

Eye, 24

(5), 775

–783

(2010). http://dx.doi.org/10.1038/eye.2009.211 12ZYAS 0950-222X Google Scholar

D. Huang et al.,

“Optical coherence tomography,”

Science, 254

(5035), 1178

–1181

(1991). http://dx.doi.org/10.1126/science.1957169 SCIEAS 0036-8075 Google Scholar

E. A. Swanson et al.,

“In vivo retinal imaging by optical coherence tomography,”

Opt. Lett., 18

(21), 1864

–1866

(1993). http://dx.doi.org/10.1364/OL.18.001864 OPLEDP 0146-9592 Google Scholar

Y. Liu et al.,

“Automated macular pathology diagnosis in retinal OCT images using multi-scale spatial pyramid and local binary patterns in texture and shape encoding,”

Med. Image Anal., 15

(5), 748

–759

(2011). http://dx.doi.org/10.1016/j.media.2011.06.005 Google Scholar

H. Faghihi et al.,

“Spontaneous closure of traumatic macular holes,”

Can. J. Ophthalmol., 49

(4), 395

–398

(2014). http://dx.doi.org/10.1016/j.jcjo.2014.04.017 CAJOBA 0008-4182 Google Scholar

W. E. Smiddy and H. W. Flynn,

“Pathogenesis of macular holes and therapeutic implications,”

Am. J. Ophthalmol., 137

(3), 525

–537

(2004). http://dx.doi.org/10.1016/j.ajo.2003.12.011 AJOPAA 0002-9394 Google Scholar

A. Luckie and W. Heriot,

“Macular holes. Pathogenesis, natural history and surgical outcomes,”

J. Ophthalmol., 23

(2), 93

–100

(1995). http://dx.doi.org/10.1111/j.1442-9071.1995.tb00136.x Google Scholar

T. G. Rotsos and M. M. Moschos,

“Cystoid macular edema,”

Clin. Ophthalmol., 2

(4), 919

–930

(2008). http://dx.doi.org/10.2147/OPTH Google Scholar

L. Pelosini et al.,

“Optical coherence tomography may be used to predict visual acuity in patients with macular edema,”

Invest. Ophthalmol. Visual Sci., 52

(5), 2741

–2748

(2011). http://dx.doi.org/10.1167/iovs.09-4493 IOVSDA 0146-0404 Google Scholar

A. H. Kashani et al.,

“Quantitative subanalysis of cystoid spaces and outer nuclear layer using optical coherence tomography in age-related macular degeneration,”

Invest. Ophthalmol. Visual Sci., 50

(7), 3366

–3373

(2009). http://dx.doi.org/10.1167/iovs.08-2691 IOVSDA 0146-0404 Google Scholar

D. C. Fernández,

“Delineating fluid-filled region boundaries in optical coherence tomography images of the retina,”

IEEE Trans. Med. Imaging, 24

(8), 929

–945

(2005). http://dx.doi.org/10.1109/TMI.2005.848655 ITMID4 0278-0062 Google Scholar

Y. Zheng et al.,

“Computerized assessment of intraretinal and subretinal fluid regions in spectral-domain optical coherence,”

Am. J. Ophthalmol., 155

(2), 277

–286

(2013). http://dx.doi.org/10.1016/j.ajo.2012.07.030 AJOPAA 0002-9394 Google Scholar

G. R. Wilkins, O. M. Houghton and A. L. Oldenburg,

“Automated segmentation of intraretinal cystoid fluid in optical coherence tomography,”

IEEE Trans. Biomed. Eng., 59

(4), 1109

–1114

(2012). http://dx.doi.org/10.1109/TBME.2012.2184759 IEBEAX 0018-9294 Google Scholar

S. Roychowdhury et al.,

“Automated localization of cysts in diabetic macular edema using optical coherence tomography images,”

in 35th Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society (EMBC 2013),

1426

–1429

(2013). http://dx.doi.org/10.1109/EMBC.2013.6609778 Google Scholar

L. D. Sisternes et al.,

“A machine learning approach for device-independent automated segmentation of retinal cysts in spectral domain optical coherence tomography images,”

in Proc. MICCAI 2015 OPTIMA Challenge,

(2015). Google Scholar

M. Esmaeili et al.,

“Three-dimensional segmentation of retinal cysts from spectral-domain optical coherence tomography images by the use of three-dimensional curvelet based K-SVD,”

J. Med. Signals Sens., 6

(3), 166

–171

(2016). Google Scholar

G. N. Girish, A. R. Kothari and J. Rajan,

“Automated segmentation of intra-retinal cysts from optical coherence tomography scans using marker controlled watershed transform,”

in IEEE 38th Annual Int. Conf. of the Engineering in Medicine and Biology Society (EMBC 2016),

1292

–1295

(2016). http://dx.doi.org/10.1109/EMBC.2016.7590943 Google Scholar

F. Venhuizen et al.,

“Fully automated segmentation of intraretinal cysts in 3D optical coherence tomography,”

in ARVO Annual Meeting Abstract,

5949

(2016). Google Scholar

J. Wang et al.,

“Automated volumetric segmentation of retinal fluid on optical coherence tomography,”

Biomed. Opt. Express, 7

(4), 1577

–1589

(2016). http://dx.doi.org/10.1364/BOE.7.001577 BOEICL 2156-7085 Google Scholar

U. C. Christensen,

“Value of internal limiting membrane peeling in surgery for idiopathic macular hole and the correlation between function and retinal morphology,”

Acta Ophthalmol., 87 1

–23

(2009). http://dx.doi.org/10.1111/j.1755-3768.2009.01777.x ACOPAT 0001-639X Google Scholar

K. Li et al.,

“Optimal surface segmentation in volumetric images-a graph-theoretic approach,”

IEEE Trans. Pattern Anal. Mach. Intell., 28

(1), 119

–134

(2006). http://dx.doi.org/10.1109/TPAMI.2006.19 ITPIDJ 0162-8828 Google Scholar

X. J. Chen et al.,

“3D segmentation of fluid-associated abnormalities in retinal OCT: probability constrained graph-search–graph-cut,”

IEEE Trans. Med. Imaging, 31

(8), 1521

–1531

(2012). http://dx.doi.org/10.1109/TMI.2012.2191302 ITMID4 0278-0062 Google Scholar

F. Shi et al.,

“Automated 3-D retinal layer segmentation of macular optical coherence tomography images with serous pigment epithelial detachments,”

IEEE Trans. Med. Imaging, 34

(2), 441

–452

(2015). http://dx.doi.org/10.1109/TMI.2014.2359980 ITMID4 0278-0062 Google Scholar

F. Shi et al.,

“Automated choroid segmentation in three-dimensional 1-μm wide-view OCT images with gradient and regional costs,”

J. Biomed. Opt., 21

(12), 126017

(2016). http://dx.doi.org/10.1117/1.JBO.21.12.126017 JBOPFO 1083-3668 Google Scholar

X. J. Chen et al.,

“Quantification of external limiting membrane disruption caused by diabetic macular edema from SD-OCT,”

Invest. Ophthalmol. Visual Sci., 53

(13), 8042

–8048

(2012). http://dx.doi.org/10.1167/iovs.12-10083 IOVSDA 0146-0404 Google Scholar

M. K. Garvin et al.,

“Automated 3-D intrarential layer segmentation of macular spectral-domain optical coherence tomography images,”

IEEE Trans. Med. Imaging, 28

(9), 1436

–1447

(2009). http://dx.doi.org/10.1109/TMI.2009.2016958 ITMID4 0278-0062 Google Scholar

K. Lee et al.,

“Segmentation of the optic disc in 3-D OCT scans of the optic nerve head,”

IEEE Trans. Med. Imaging, 29

(1), 159

–168

(2010). http://dx.doi.org/10.1109/TMI.2009.2031324 ITMID4 0278-0062 Google Scholar

R. C. Gonzalez, R. E. Woods and S. L. Eddins, Digital Image Processing Using MATLAB, Pearson Prentice-Hall, New Jersey

(2004). Google Scholar

R. Whitaker and X. Xue,

“Variable-conductance, level-set curvature for image denoising,”

in Proc. of the Int. Conf. on Image Processing,

142

–145

(2010). http://dx.doi.org/10.1109/ICIP.2001.958071 Google Scholar

G. Quellec et al.,

“Three-dimensional analysis of retinal layer texture: identification of fluid-filled regions in SD-OCT of the macula,”

IEEE Trans. Med. Imaging, 29

(6), 1321

–1330

(2010). http://dx.doi.org/10.1109/TMI.2010.2047023 ITMID4 0278-0062 Google Scholar

M. Niemeijer et al.,

“Vessel segmentation in 3D spectral OCT scans of the retina,”

Proc. SPIE, 6914 69141R

(2008). http://dx.doi.org/10.1117/12.772680 PSISDG 0277-786X Google Scholar

L. Y. Wang et al.,

“Support vector machine based IS/OS disruption detection from SD-OCT images,”

Proc. SPIE, 9034 90341U

(2014). http://dx.doi.org/10.1117/12.2043439 PSISDG 0277-786X Google Scholar

C. A. Lupascu, D. Tegolo and E. Trucco,

“FABC: retinal vessel segmentation using AdaBoost,”

IEEE Trans. Inf. Technol. Biomed., 14

(5), 1267

–1274

(2010). http://dx.doi.org/10.1109/TITB.2010.2052282 Google Scholar

X. Li, L. Wang and E. Sung,

“AdaBoost with SVM-based component classifiers,”

Eng. Appl. Artif. Intell., 21

(5), 785

–795

(2008). http://dx.doi.org/10.1016/j.engappai.2007.07.001 EAAIE6 0952-1976 Google Scholar

X. J. Chen and U. Bağci,

“3D automatic anatomy segmentation based on iterative graph-cut-ASM,”

Med. Phys., 38

(8), 4610

–4622

(2011). http://dx.doi.org/10.1118/1.3602070 MPHYA6 0094-2405 Google Scholar

Y. Boykov and G. Funka-Lea,

“Graph cuts and efficient N–D image segmentation,”

Int. J. Comput. Vision, 70

(2), 109

–131

(2006). http://dx.doi.org/10.1007/s11263-006-7934-5 IJCVEQ 0920-5691 Google Scholar

X. J. Chen et al.,

“Medical image segmentation by combining graph cut and oriented active appearance models,”

IEEE Trans. Image Process., 21

(4), 2035

–2046

(2012). http://dx.doi.org/10.1109/TIP.2012.2186306 IIPRE4 1057-7149 Google Scholar

Y. Y. Boykov and M. P. Jolly,

“Interactive graph cuts for optimal boundary & region segmentation of objects in N-D Images,”

in Proc. of the Eighth IEEE Int. Conf. on Computer Vision (ICCV 2001),

105

–112

(2001). http://dx.doi.org/10.1109/ICCV.2001.937505 Google Scholar

X. J. Chen et al.,

“GC-ASM: synergistic integration of graph-cut and active shape model strategies for medical image segmentation,”

Comput. Vision Image Understanding, 117

(5), 513

–524

(2013). http://dx.doi.org/10.1016/j.cviu.2012.12.001 CVIUF4 1077-3142 Google Scholar

X. J. Chen et al.,

“A framework of whole heart extracellular volume fraction estimation for low dose cardiac CT images,”

IEEE Trans. Inf. Technol. Biomed., 16

(5), 842

–851

(2012). http://dx.doi.org/10.1109/TITB.2012.2204405 Google Scholar

Y. Boykov and V. Kolmogorov,

“An experimental comparison of min-cut/max-flow algorithms for energy minimization in vision,”

IEEE Trans. Pattern Anal. Mach. Intell., 26

(9), 1124

–1137

(2004). http://dx.doi.org/10.1109/TPAMI.2004.60 ITPIDJ 0162-8828 Google Scholar

J. K. Udupa et al.,

“A framework for evaluating image segmentation algorithms,”

Comput. Med. Imaging Graphics, 30

(2), 75

–87

(2006). http://dx.doi.org/10.1016/j.compmedimag.2005.12.001 CMIGEY 0895-6111 Google Scholar

W. Ju et al.,

“Random walk and graph cut for co-segmentation of lung tumor on PET-CT images,”

IEEE Trans. Image Process., 24

(12), 5854

–5867

(2015). http://dx.doi.org/10.1109/TIP.2015.2488902 IIPRE4 1057-7149 Google Scholar

BiographyWeifang Zhu is an associate professor at Soochow University, Suzhou, China. She received her PhD in electrical engineering from Soochow University, China, in 2013. She has coauthored over 15 papers in internationally recognized journals and conferences. Her current research is focused on medical image processing and analysis. Xinjian Chen is a distinguished professor at Soochow University, Suzhou, China. He received a PhD from the Chinese Academy of Sciences in 2006. He conducted postdoctoral research in the University of Pennsylvania, National Institute of Health, and University of Iowa, USA from 2008 to 2012. He has published over 80 top international journals and conference papers. He has also been awarded six patents. |